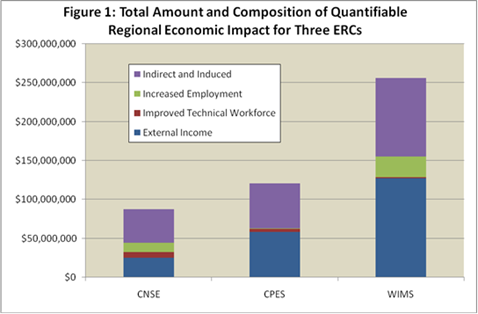

Previous chapters of this History described the evolution of the Engineering Research Centers (ERC) Program at the National Science Foundation (NSF) from the standpoint of research, education, industrial interaction, and center and Program leadership. The foregoing Chapter 10 described the Program’s impacts on academic engineering. This chapter provides an overview of selected achievements of the ERCs in systems and technology development and of some of their important impacts in industry and on the U.S. economy, including such notable advances as the portable defibrillator, the mpeg video file format, wearable computers, the prosthetic retina, and new radars for tornado detection.

The introduction to the 1984 National Academy of Engineering (NAE) report that laid out Guidelines for ERCs began by asserting two purposes that underlie ERCs:

- Enhance the capacities of engineering research universities to conduct cross-disciplinary research on problems of industrial importance.

- Lessen one of several weaknesses in engineering education: an inadequate understanding by students of engineering practice; that is, the understanding of how engineering knowledge is converted by industry into societal goods and services. [1]

The opening section of a report of a symposium held in early 1990 to review the ERC Program put it this way: “Indeed, a major goal of the ERC Program is to facilitate the more efficient conversion of advances in fundamental research in universities into high-quality, competitive products and processes in industry.”[2] The connecting bridge between fundamental research and commercialized products is innovation—and that space, including the type of education needed to achieve it and produce graduates who could “hit the ground running,” was where ERCs would prove their worth. As NAE panel Chairman W. Dale Compton said in his preface to the Guidelines report, “If the Foundation embraces the concept with enthusiasm and supports it with zeal, the program could contribute well beyond expectations.”[3] NSF did just that, and so has the ERC Program.

11-A Innovative Systems Platforms and Their Impacts

One of the primary aspects of the ERC Program’s mission was and is to make it possible—and acceptable—for academic researchers to pursue research on large-scale, next-generation engineered systems. Such systems are the core of each ERC’s vision. This work requires a coordinated, strategically planned team approach, carried out in the context of industrial perspectives and needs—something that was highly innovative and thus controversial on university campuses, especially in the early years of the Program. This section will describe examples of such systems in several broad areas of technology development.

11-A(a) Flexible/Intelligent Manufacturing

When the ERC Program was initiated, there was very little academic engineering research focused on manufacturing processes and systems, outside of chemical engineering processes for the manufacture of pharmaceuticals, oil and gas products, and food products. There had been some NSF support for robotics per se from the Research Applied to National Needs (RANN) Program in the 1970s and some follow-on support of robotics in manufacturing after the demise of RANN, but it was at the project level.[4] With the onset of the ERC Program and its goal to drive research strategically from the systems level, there was through an ERC enough time to build systems-level testbed platforms and some financial support; but larger scale testbeds would require additional support. While there were 14 ERCs that focused on manufacturing systems between 1985 and 2014, this section will focus on a few that were especially successful at creating flexible and intelligent manufacturing systems platforms for the manufacture of physical parts and products, as opposed to chemical and biological products.

Quick Turnaround Cell:The first was at the Purdue ERC for Intelligent Manufacturing Systems (ERC Class of 1985[5]), where one of the first systems-level testbeds, the Quick Turnaround Cell, was developed to enable more flexible manufacturing for industry so that design, cutting, and quality inspection could be integrated to support rapid production of small batch and one-of-a-kind machined parts. See Section 11-B(a)iii. Advanced Manufacturing, for its eventual use by the Army Missile Command to design and machine prototype parts and its widespread impact on computer-aided design programs. In addition, a search for Quick Turnaround CNC Machining will show the wide variety of applications of this concept in machining and manufacturing today.

Rapid Prototype System: The next important manufacturing testbed developed at an ERC was the Rapid Prototype System developed in collaboration with General Motors (GM) by the Engineering Design Research Center (EDRC) (Class of 1986) at Carnegie Mellon University. GM asked the EDRC to work with them collaboratively on a technology that would be directly applied to a manufacturing issue at Inland Fisher Guide Division. This division made lights, plastic trim, and other non-structural components. These parts changed from model year to model year, so the Division wanted to develop a product from initial concept to implementation in a time frame of 30-40 weeks, where it took 60-70 weeks at that time. To support design engineers at GM, some of whom might be inexperienced, the EDRC developed a knowledge-based system that could be connected to GM’s own design system, prompting the design engineers to recognize when design rules had been violated. Working on this system on the factory floor with production engineers and technicians, the EDRC faculty and students led a completely new approach to tooling, as an alternative to conventional machining at the time. They were able to develop a rapid tool technology, which enabled the use of metal spraying as a prototype. The outcome was a reduction in cost and increase in speed of making the tools via this process at an order of magnitude lower than with conventional machining technology.[6]

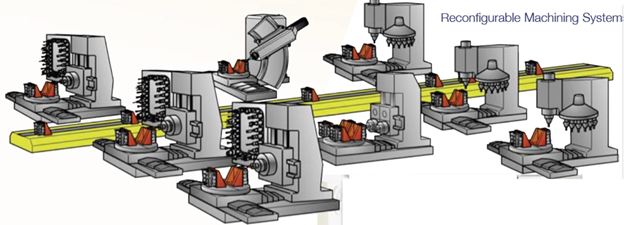

Reconfigurable Manufacturing: The ERC for Reconfigurable Manufacturing Systems (RMS), established at the University of Michigan (Class of 1996) envisioned building manufacturing systems platforms and machines with the exact capacity and functionality needed, and changing them rapidly exactly when needed not months or years later. The visionary leader of the ERC, Yoram Koren, and his leadership team weren’t sure they could do this in the ten-year lifespan of an ERC. However, with close collaboration with visionary engineering leaders in industry, in less than ten years the RMS reconfigurable inspection technology was already integrated into engine production lines of the domestic auto industry, and the data reduction methodologies were widely applied in manufacturing plants.

The RMS team defined three principles that facilitate achieving cost-effective, rapid reconfiguration:

(1) adjustable production resources to respond to imminent market changes;

(2) functionality rapidly converted to the production of new parts; and

(3) adjustable, rapid response to unexpected equipment failures.[7]

The challenge was to develop mathematical tools to support reconfigurable manufacturing and then to design and build “three full-size prototypes of original machines that revolutionized the state-of-art in production engineering, particularly in real-time engine inspection at the line-speed. Inspection machines were implemented, which improved productivity in 69 production lines in 15 factories in the U.S. and Canada (e.g., Chrysler Mack Avenue Engine Plant, Ford Windsor Engine Plant, GM Flint Engine Plant, Boeing Everett Operations in Seattle, and Cummins engine plant, Columbus, Indiana.)”[8] The resulting technology is explained in depth in Section 11-B(c) of this chapter.

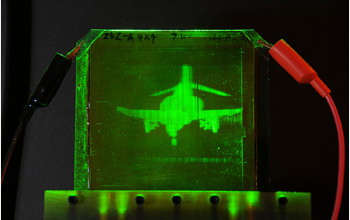

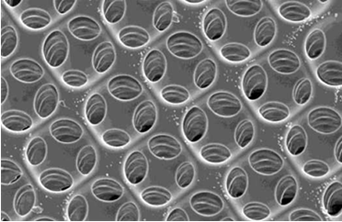

Nanomanufacturing Platforms: Physical parts come in all scales, and at present they are sometimes produced at the nanoscale, which poses challenges as nanoscale devices are integrated with other devices in a systems testbed. The ERC for Nanomanufacturing Systems for Mobile Computing and Mobile Energy Technologies (NASCENT), was established at the University of Texas at Austin in 2012. NASCENT’s vision is to create high-throughput, reliable, and versatile nanomanufacturing process systems. The research outcome of novel processes and systems from this center contributes to the development of critical nanomanufacturing infrastructure by identifying new and different manufacturing equipment and process systems for high-speed, low-defect, reliable nanomanufacturing systems that are not currently available. NASCENT is focused on developing nanosystems platforms, such as: the Transfer Torque Random Access Memory (STT-RAM) with data densities exceeding a terabit/sq. in.; high-speed FETs on flex substrates that will provide bulk Si CMOS-like transistor performance at flat panel display-like costs; and rollable batteries with high energy density Si nanowire anodes for lithium batteries. Its systems platforms include: (1) Roll-to-Roll (R2R) Nanofabrication Testbed—2D and 3D nanosculpting on R2R flexible substrates, high-speed solvent-less R2R graphene transfer, R2R thin-film coatings with nanoscale thicknesses, R2R printable nanomaterials, and in-line nanometrology; and (2) Flex Crystalline Nanofabrication Testbed—flexible crystalline substrates exfoliated from bulk wafers, wafer-scale 2D and 3D nanosculpting with unprecedented control in size and shape of patterns, and in-line nanometrology.

11-A(b) Nanosystems

Beyond nanomanufacturing, by 2011 there had been more than a decade of sustained funding for nanoscale research that was enriching the fundamental knowledge base about the characteristics, behavior, and functionality of a wide range of particles at the nanoscale. Some work had begun on how they could be combined into nanoscale devices and some new products using these particles had emerged, such as environmental sensors and micromechanical motors. However, at that time there had been insufficient investment in long-term research to explore how these nanoscale devices could be combined into components and systems.

To explore this frontier and determine if it could become a new system platform for innovation, the ERC Program joined with the Nanoscale Science and Engineering Program to support new nanosystems ERCs. “The Nanosystems ERCs will build on more than a decade of investment and discoveries in fundamental nanoscale science and engineering,” said Thomas Peterson, the NSF’s Assistant Director for Engineering at the time. “Our understanding of nanoscale phenomena, materials and devices has progressed to a point where we can make significant strides in nanoscale components, systems and manufacturing.”[9] Three new nanosystems ERCs were funded in 2012:

- The NSF Nanosystems Engineering Research Center for Advanced Self-Powered Systems of Sensors and Technology (ASSIST), led by Professor Veena Misra, North Carolina State University, is creating self-powered monitoring systems that are worn on the wrist to simultaneously monitor a person’s environment and health, in search of connections between exposure to pollutants and chronic disease.

- The NSF Nanosystems Engineering Research Center for Translational Applications of Nanoscale Multiferroic Systems (TANMS), led by Greg Carman of the University of California, Los Angeles, seeks to reduce the size and increase the efficiency of components and systems whose functions rely on the manipulation of either magnetic or electromagnetic fields.

- NSF Nanosystems Engineering Research Center for Nanomanufacturing Systems for Mobile Computing and Mobile Energy Technologies (NASCENT), led by Roger Bonnecaze and S.V. Sreenivasan of the University of Texas at Austin, is pursuing high-throughput, reliable, and versatile nanomanufacturing process systems, and is demonstrating them through the manufacture of mobile nanodevices.

Curious about the actual barriers to integrating nanoscale devices with larger-scale components and systems while preparing this History, Preston asked the Directors of these three nanosystems ERCs, which were approaching their sixth year of operation at the time (2018), to give her some input regarding those challenges. Greg Carman’s response is a good example of the issues his ERC has addressed get to the systems plane. As background, the then-current mission statement for TANMS is to “develop a fundamentally new approach coupling electricity to magnetism using engineered nanoscale multiferroic elements to enable increased energy efficiency, reduced physical size, and increased power output in consumer electronics. This new nanoscale multiferroic approach overcomes the scaling limitations present in the two-century-old mechanism to control magnetism that was originally discovered by Oersted in 1820. TANMS’s goals are to translate its research discoveries on nanoscale multiferroics to industry while seamlessly integrating a cradle-to-career education philosophy involving all of its students and future engineers in unique research and entrepreneurial experiences.”[10] In December 2018, Carman laid out three key barriers TANMS had to address to begin to reach its systems goals:

1. Increasing the voltage control of magnetism constant in materials that are presently used in magnetic memory. The TANMS team used interface materials to increase this value by an order of magnitude. The most recent results suggest that it can be increased by another two orders of magnitude, increasing the potential that this new approach may be adopted by the community in the near future. This particular Voltage Control of Magnetic Anisotropy (VCMA) is only present at length scales on the order of the “exchange length,” ~10 nm. The NASCENT team discovered that adding in an additional atomic layer of a different material modifies the electronic interaction between the materials and dramatically improves the coupling. The most recent analysis indicates that the increase is on the order of 100x, and once again this is only present at small length scales and is considered inconsequential or absent at large length scales.

2. Developing new coupled models that allow the integration of piezoelectric with magnetostrictive materials to launch electromagnetic waves from electrically small antennae. TANMS is one of the only groups in the world now to have a numerical multi-physics code that combines the elastodynamics equations developed by Newton with the electromagnetic equations developed by Maxwell, and finally with the micro-magnetic equations developed by Landau-Liftshitz-Gilbert.

3. Electromagnetic motors presently do not exist in the small scale due to resistive losses; therefore, this is a speculative market where a clear application has not been clearly articulated. TANMS, working with bioengineering researchers, have found that individual cell selection for personalized medicine represents an important problem and could substantially benefit from magnetic control. The biology community has been using magnetic particles attached to cells for quite some time now, but have not been able to solve the problem of individually selecting superior cells necessary for personalized medicine. This community has found that they can tag cells with different proteins/enzymes attached to magnetic particles. These proteins or enzymes produce different luminosity, so the cell themselves can be interrogated optically through the luminescence. Importantly, certain cells have “superior” capabilities for fighting specific diseases (e.g., cancer); thus, we can select the correct superior cells out of a large population, with the goal to select these superior cells and then culture them and reintroduce them back to the body. The biology community has tried to select the superior cells through optical tweezers, but has largely been unsuccessful. Recently, TANMS has demonstrated that its electromagnetic motor concept is ideally suited to this problem with demonstrations of cell capture.

11-A(c) Collaborative Adaptive Sensing Systems

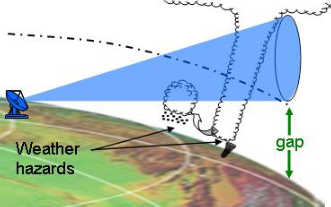

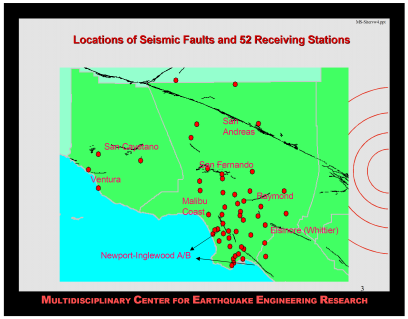

The Collaborative Adaptive Sensing Systems (CASA) ERC (Class of 2003), headquartered at the University of Massachusetts (UMass), Amherst, is a multi-university partnership among engineers at UMass and Colorado State University; atmospheric scientists, at the University of Oklahoma; and social scientists at the University of Delaware. From its inception, CASA was committed to developing a new kind of sensing system to be deployed to improve forecasting of and response to high-impact weather events, which are transient and occur at heights and scales that are difficult to monitor with the existing weather infrastructure. Examples of such weather events include tornados, microbursts, flash floods, boundaries that initiate convection, and lofted radiological, chemical, and biological agents. At the time of the ERC’s award, monitoring practices would likely either miss these events because they form in blind regions of present-day sensors, or record them poorly because they occur when the sensor is monitoring other regions or at distances where resolution is degraded. (See Figure 11-1.) In addition, each sensor of standard operational Doppler radar systems (WSR‑88D in the U.S.) was independent from all others; hence, they were not able to coordinate in a manner that could result in optimal use. CASA was designed to prove the efficacy of networked arrays of low‑cost, closely spaced advanced radars to adaptively sense severe weather conditions (e.g., tornados) and use relevant observations in atmospheric numerical forecast models.

| Figure 11-1: Existing weather radars leave gaps in coverage at low altitudes. (Source: CASA) |

The vision of CASA was to establish a revolutionary new paradigm in which a system of distributed, collaborative, and adaptive sensors (DCAS) is deployed to overcome fundamental limitations of current approaches to the detection of weather hazards. (See Figure 11-2.) Success of the Center would depend upon collaborative research between the computational, atmospheric and social sciences, and electrical engineering. CASA had to solve four fundamental problems: radar engineering to be able to develop advanced, small and inexpensive radar systems; networking to be able to perform real‑time optimal command and control of the system; atmospheric sciences to develop cutting edge algorithms and models for detection and forecasting of weather hazards; and social sciences to fully understand end user (e.g., weather forecasters, emergency managers) needs and develop optimal protocols that would be used for system control.

| Figure 11-2: CASA’s new system of distributed, collaborative, and adaptive sensors (DCAS) improves weather hazard detection. (Source: CASA) |

All of this knowledge was driven by the need to develop real-time testbeds deployed in the field to predict and detect tornados and issue warnings to emergency responders with accurate and timely data. The impacts of the ERC are multiple and include not only improved weather forecasting, prediction, and warnings, but also development of new technologies to enable construction of low-cost radars, electronic beam-steering, and network interfaces. (See Ch. 5, Section 5-D(a)ii for further discussion of CASA’s interface with DCAS system end users.)

CASA’s major systems-level testbed was perhaps the most complex large-scale testbed launched by an ERC in a real-time environment. The CASA testbed covered a 7,000 square km region in southwestern Oklahoma that receives an average of four tornado warnings and 53 thunderstorm warnings per year. The network was comprised of four small radars spaced closely enough together to defeat the Earth curvature blockage and allow users to directly view the lower atmosphere beneath the coverage of the current national radar network. The radars operated in a closed-loop configuration enabling the resources of the system to be preferentially allocated toward particular sub-regions of the atmosphere in response to the changing weather itself. This testbed was “end-to-end,” in that critical and substantial end-user populations have been involved in the development and research trials conducted with the system. The system implements a policy mechanism in its resource allocation calculation that preferentially allocates resources to different user populations so as to maximize the overall utility of the information collected. The network became operational in 2007 and remained in near-continuous operation for extended periods until 2011, allowing research and operational users to directly view the lower atmosphere with high-resolution observations.

The ERC pointed to significant impacts in its Final Report in 2013:

CASA’s research using the IP1 testbed demonstrated a new dimension to weather observing that leads to improved characterization of storms and the potential for better forecasting and improved warning and response to tornadoes and other hazards. During a severe thunderstorm in southwest Oklahoma in June 2011, a tornado struck the town of Newcastle where the IP1 radar network was operating and was providing real-time storm data to local emergency managers. The trajectory of the tornado was changing too rapidly for it to be accurately tracked by the nearest NEXRAD National Doppler radar, but the CASA network was able to resolve the rapid motion and provide that information to the emergency managers to better warn the public. The Oklahoma City Journal-Record reported on July 1, 2011, ‘The data from a new radar system being tested in Newcastle was so precise that refugees from the storm were able to time the closing of the town’s public shelter down to the last minute.’ City Manager Nick Nazar said: ‘The opportunity to use this advanced technology was very helpful and probably saved lives. It was literally up to the minute and it made a difference.’ As a result of the publications and presentations on this deployment, the 2008 National Research Council report on Observing Weather and Climate from the Ground Up has recommended that CASA-type technology be deployed in future radar systems, writing, ‘Emerging technologies for distributed-collaborative adaptive sensing should be employed by observing networks, especially scanning remote sensors such as radars and lidars.’[11]

As CASA reported, its “research using this testbed demonstrated a new dimension to weather observing, improved characterization of storms, better forecasting, and improved warning and response to tornadoes and other hazards. In trials conducted during the 2007-2011 Oklahoma storm seasons, CASA team members gathered data that demonstrated substantive quantitative improvements relative to the state of the art, including a 5x increase in resolution and update times compared to today’s NEXRAD system, as well as fundamentally new capabilities such as visibility down to 200 m altitude and multi-Doppler observations for estimating the atmospheric wind vector field. In a case-study assessment conducted with National Weather Service forecasters, CASA participants documented the ability of these users to reduce their estimates of surface winds by 31% when they are working with CASA data, compared to when they didn’t have these data.”[12]

As CASA neared graduation, it shifted it major testbed implementation from tornadoes to severe storms. Brenda Philips, the co-Director of CASA post-graduation, leads one of CASA’s principle sustaining activities. She contributed the accompanying case study to this History in 2018.

11-A(d) Neuroengineering Systems

Two then-new fields, biotechnology and computational neuroscience, were separately at the forefront of major advances in the last decades of the 20th century. Their confluence in the first two decades of the 21st century has catalyzed some of the most prominent new advances in science and engineering. Among the most promising new areas is the rapidly developing field of neuroengineering. This interdisciplinary research area encompasses the development of concepts, algorithms, and devices that are used to assist, understand, and interact with neural systems. It comprises fundamental, experimental, computational, theoretical, and quantitative research aimed at furthering our ability to understand and augment brain and neural function in both health and disease.

Like the other ERC systems platforms just described, neuroengineering systems serve as a platform for innovation in several ERCs, usually in the form of testbeds aimed at the development of systems to aid people with a variety of neural disorders in reducing the impact of the disorders on their lives.

Neuroprosthetics. The earliest ERC to pursue research in neuroengineering was the Center for Neuromorphic Systems Engineering, established at Caltech in 1995. The goal of the CNSE was “to develop the technology infrastructure for endowing the machines of the next century with the senses of vision, touch, and olfaction which mimic or improve upon human sensory systems.” One of two main testbeds of the Center focused on neuroprosthetics, employing a a unique cognitive approach: decoding goals, intentions, and the cognitive state of the paralyzed patient, leading to the implementation of real-time control of a robotic arm through a brain/computer interface, or probe.

Cochlear Implant. The ERC for Wireless Integrated MicroSystems WIMS ERC at the University of Michigan (Class of 2000) also focused on advancing neural prosthetics—specifically, a cochlear implant. The cochlear microsystem for the profoundly deaf contained a custom microcontroller, a digital signal processor that executed speech processing algorithms, a wireless chip that derived power from an external radio-frequency carrier and provided bidirectional data transfer, and an ultra-flexible thin-film electrode array that could be inserted deep within the cochlea of the inner ear.

Biomimetic Systems. Two more examples of ERC work in neuroengineering are from the Biomimetic MicroElectronic Systems (BMES) ERC at USC (Class of 2003), i.e.: the Argus II retinal prosthesis developed by Center Director Mark Humayun and his team, and the efforts by an interdisciplinary team led by Professor Theodore Berger to restore higher cognitive functions that are lost as a result of damage (stroke, head trauma, epilepsy) or degenerative disease (dementia, Alzheimer’s disease, etc.) by focusing on long-term memory formation.

Neuroplasticity. A more recent example is the ERC for Sensorimotor Neural Engineering (CSNE)[13] at the University of Washington (Class of 2011), with two testbeds aimed at engineering neuroplasticity in the damaged brain and spinal cord.

These examples and others are described in more detail in the research Chapter 5, Section 5-D(b)ii, the “Special Topics” subsection on Neuromorphic Systems Engineering.

11-B Technology/Innovation Achievements and Impacts

The pursuit of a large-scale system vision, as described in the previous section, involves a coordinated approach to fundamental research and the advancement of technology that underlies and enables the successful realization of the system. In the process, smaller-scale innovations and inventions are often achieved that are significant and highly useful advances in and of themselves. The enabling technology developed at ERCs is often licensed to their industrial members, who then attempt to take the advancement farther into commercialization. This technology transfer is a key element of the mission of government-funded ERCs, in which public funding is used to bring social and economic benefits to the Nation and its people.

This section will present just some of the hundreds of important achievements in technology development and innovation attained by the ERCs over the past 30-plus years in a wide range of fields of engineering and technology.

11-B(a) Bioengineering

Biotechnology Process Engineering Center (BPEC), Massachusetts Institute of Technology, Class of 1985

Bioprocess Technologies: Advances made in mammalian cell bioprocess technology and protein therapeutics made by BPEC enabled the development of a wide range of new pharmaceuticals. In its first ten years as an ERC (BPEC I),[14] these advances contributed to the following major impacts on its industrial partners:

- Copyrighted the BioDesigner software for bioprocess simulation, leading to the efficient design and synthesis of bioprocesses. This algorithm was licensed to a start-up company, Intelligen, and is used presently by biotechnology companies worldwide for bioprocess simulation as well as in universities for course teaching.

- Rational-medium design based on fundamental principles in stoichiometry, biochemistry, and metabolism to increase cell viability and prolong cell culture times in animal cell culture systems, increasing the product concentration. Many companies now employ the algorithm from this research in the manufacture of mammalian cell products.

- Methodologies for the characterization of glycoprotein quality, with special emphasis on sialic acid content of therapeutic glycoproteins, which successfully demonstrated a means to increase sialic acid content in recombinant glycoproteins, thus helping industry to maintain protein quality during manufacturing.

Center for Emerging Cardiovascular Technologies (ERC/ECT), Duke University, Class of 1987

Implantable Defibrillators: The research in antiarrhythmic systems at the ERC for Emerging Cardiovascular Technologies (ECT) at Duke University, which graduated in 1996, was aimed toward developing high‑tech devices to halt or prevent ventricular fibrillation, the primary cause of sudden cardiac death. About 400,000 people succumb to sudden cardiac death annually in the United States. The ERC judged in the late ‘80s that if only 10% of these individuals could be identified to be at risk and have devices implanted, the potential U.S. market would be close to a billion dollars per year and the international market several times larger.

Two of the ERC/ECT’s major research breakthroughs in antiarrhythmic systems—improved electrodes and a novel understanding of biphasic waveforms, which led to biphasic waveform circuitry—were transferred to the implantable defibrillator industry. Both of these developments reduced the energy needed to defibrillate. This single improvement resulted in five advantages over previous implantable defibrillator technology: (1) reduced tissue damage; (2) reduced device size, allowing for easier implantation; (3) reduced time to charge the device, thus decreasing the time the body is without blood flow during the arrhythmia; (4) extended battery life; and (5) a wider range of patients treatable with implantable defibrillators.

Biphasic waveforms have been adopted by the implantable defibrillator industry. Two Center member companies, Intermedics and Ventritex, working with ERC/ECT researchers, licensed the Center’s technology and took the research in biphasic waveforms to the stage of clinical testing. Two other companies, Cardiac Pacemakers (CPI) and Medtronic, developed their own biphasic waveform circuitry based in part on this ERC/ECT research. Intermedics also brought to clinical trials the improved electrodes developed by the ERC/ECT. Today, implantable defibrillator companies continue to build on the Center’s findings and modify their internal defibrillators accordingly.

Portable Defibrillators: The same improvements in sensors and electrodes that the ERC/ECT’s work brought to internal defibrillators have also been used by industry to design external, portable defibrillators (Figure 11-6) that are easier to use and less expensive than preexisting models and help people who suffer heart attacks in public places. A more efficient and effective power source for delivery of the shock permitted miniaturization of the devices.

| Figure 11-6: An early portable defibrillator based in part on the ERC/ECT’s work (Source: Defibtech) |

3D Ultrasound: The ERC/ECT achieved several breakthroughs in sensing and image processing that made three-dimensional ultrasound possible. At the time, this technology was 5–7 years ahead of acceptance by the medical community and insurance companies; now it is ubiquitous, partly as a result of early championing by the ERC/ECT through a startup, Volumetrics Medical Imaging.

Engineering Design Research Center (EDRC), Carnegie Mellon University, Class of 1986

Wearable Computers: Carnegie Mellon University (CMU) has had a long involvement with the development of wearable information technology through two ERCs, the EDRC (1986–1996) and the later Quality of Life Technologies (QoLT) ERC (2006–2015). CMU faculty associated with the EDRC built their first wearable computer in 1991 and had units in the field with head-mounted displays in 1994-1995, supporting maintenance activities of the U.S. military. By 1995, a community of wearable computing researchers was forming. In 1997, an IEEE Working Group on Wearable Information Systems was formed and organized the first International Symposium on Wearable Computers (ISWC) at MIT, with the EDRC’s Thad Starner as general chair. By 1998 the Working Group had been promoted to a Technical Committee that Daniel Siewiorek (later QoLT center director) chaired; the second ISWC was held in 1998 at CMU.

The early research in this field was codified in a 2008 research monograph entitled “Application Design for Wearable Computers,” co-authored by Siewiorek, Starner, and Asim Smailagic.[15] The results of CMU’s research were then spread through a number of students and faculty who came through the EDRC and QoLT and started companies, producing products that laid the foundation of today’s wearable technology industry. These included BodyMedia, one of the first companies to commercially offer wearable sensors; Morewood Labs, which designed electronics for the first five models of wearables from FitBit, Inc.; and ESI, a forerunner to Inmedius that developed tools for authoring Interactive Electronic Technical Manuals for F-18 fighter jet maintenance worldwide (eventually acquired by Boeing to transfer the technology to commercial aircraft). Google Glass, a voice-activated computer/monitor combination worn on eyeglass frames, has direct ties to the wearable computing research at EDRC through Thad Starner, who went to work at Google as the proselytizer for this technology (Figure 11-7).

| Figure 11-7: Google Glass eyewear, Explorer Edition (Credit: Google) |

From 1995 to 1997, four EDRC-inspired wearable computers (VuMan 3, MoCCA, Digital Ink, and Promera) won prestigious international design awards, making the EDRC design team competitive with internationally known design firms such as Frog Design and IDEO.

The EDRC inspired a summer design course at CMU that originally led to the concept of wearable computers and to building the first models. The methodology developed for rapidly designing and building CMU’s wearable computers has since been used for over 25 years in interdisciplinary engineering capstone design courses worldwide.

Computer-Integrated Surgical Systems and Technology (CISST), Johns Hopkins University, Class of 1998

Robotic Surgery: Over its ten-year lifetime as an ERC, the Center for Computer Integrated Surgical Systems and Technology, based at John Hopkins University, made major advances in robotic surgery. These include new technologies that steady the hands of surgeons while they perform microscopically precise eye surgeries; tiny robotic “snakes” that can travel through the esophagus to remove hard-to-reach tumors that would otherwise require very invasive surgery to reach; and visualization and mapping tools that give surgeons greater confidence and accuracy in performing biopsies, delivering radiation seeds, and other delicate operations.

The vision that drove and still drives the Center’s research is to integrate cutting-edge technologies into systems that are able both to greatly improve physicians’ ability to plan and perform existing surgical interventions and to enable new procedures that would not otherwise be possible. This vision has led the Center to explore all aspects of computer-integrated interventional medicine. The advances pursued will eventually touch upon virtually every aspect of care delivery: more accurate procedures; more consistent, predictable results from one patient to the next; improved clinical outcomes; greater patient safety; more cost-effective methods for treatment of care; better methods for physician training; and creation of an information infrastructure that will facilitate experience-based methods for assessing treatment alternatives and improving procedures.

Although the Center’s central focus has been on medical robotics, its interdisciplinary research has accordingly been very broad, encompassing medical imaging, modeling of patient anatomy and surgical procedures, novel sensors and mechanisms, human-machine interactions, and systems science.

The Surgical Assistant Workstation: The practice of surgery has long involved direct hands-on operations performed by a highly skilled surgeon. But in recent years that age-old model is being expanded. Academic and industrial research and development are combining to produce robotic assistants that extend and augment the abilities of surgeons to perform operations that are beyond the limitations of human eyes and hands. We are also entering the era of “telesurgery,” in which teleoperated robotic surgical systems in combination with high-resolution video and broadband internet communications will be employed to perform surgery remotely, vital given growing populations worldwide and an impending shortage of qualified surgeons.

The CISST is a leader in research that underlies the advances being made in this new realm of surgical support. One example is the Surgical Assistant Workstation (SAW) project. SAW is a modular, open-source software framework that is designed to serve several important purposes: it can be used for rapid prototyping of new telesurgical research systems. It provides enhanced 3-D visualization of the surgical site, and it allows users to interact with the surgical system using 3-D manipulations. This versatile framework addresses a very important need to support systems-level research in medical robotics and computer-integrated interventional medicine, on the one hand, while also promoting advanced research on the individual components of such systems. Additionally, it provides a flexible and low-cost mechanism for industrial researchers to evaluate academic research advances within the context of their own commercial products.

Being modular, the SAW framework includes a library of software components that can be used to implement single-user or multi-user robot control systems that rely on complex video pipelines and an innovative, highly interactive surgical visualization environment. The software is designed to be plug-and-play, so system developers can add support for their own robotic devices and hardware platforms.

Image-guided Needle Placement: The value of image-guided needle-based therapy and biopsy for dealing with a wide variety of medical problems is proven. However, both the accuracy and the procedure time vary widely among practitioners of most systems currently in use. Typically, a physician views the images on the scanner’s console and then must mentally relate those images to the anatomy of the actual patient. A variety of virtual reality methods, such as head-mounted displays, video projections, and volumetric image overlay have been investigated, but all these require elaborate calibration, registration, and spatial tracking of all actors and components. This creates a rather complex and expensive surgical tool and requires a surgeon with exceptional skills to integrate the images with needle placement in real time.

Researchers at the CISST, in collaboration with Dr. Ken Masamune of Tokyo Denki University in Japan and the Siemens Corporation, developed an inexpensive 2D image overlay system to simplify, and increase the precision of image-guided needle placements using conventional CT scanners. The device consists of a flat LCD display and a half mirror, mounted on the gantry. When the practitioner looks at the patient through the mirror, the CT image appears to be floating inside the patient with correct size and position, thereby providing the physician with two-dimensional “X-ray vision” to guide needle placement procedures.

By enhancing the physician’s ability to accurately maneuver inside the human body, needle steering could potentially improve a range of procedures from chemotherapy and radiotherapy to biopsy collection and tumor ablation, all without additional trauma to the patient. By increasing the dexterity and accuracy of minimally invasive procedures, anticipated results will not only improve outcomes of existing procedures, but will also enable percutaneous procedures for many conditions that currently require open surgery. Ultimately, this advance could also significantly improve public health by lowering treatment costs, infection rates, and patient recovery times.

Center for Biofilm Engineering (CBE), Montana State University, Class of 1990

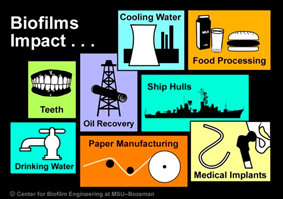

Biofilm Control by Signal Manipulation: Biofilms form on surfaces in contact with water. (See Figure 11-8.) At the time NSF funded this ERC it was known that all engineered aqueous systems suffer from the deleterious effects of biofilm formation, which causes fouling, corrosion, and filter blockage in industrial systems and much more profound problems in the human body, such as chronic infection from medical device implants and wounds. Marine systems are adversely affected by bacterial fouling, and by subsequent macrophyte colonization; and the increased energy costs of propelling fouled hulls through the water costs the US Navy billions of dollars per year. Little was known about how to combat the formation of biofilms.

| Figure 11-8: Biofilms cause problems with many types of surfaces (Source: CBE) |

In the late 1990s, researchers at the CBE discovered that biofilms are composed of cells in matrix-enclosed micro-colonies and that these micro-colonies form “towers” interspersed between open water channels, to enable the flow of nutrients to nourish the bacteria living in the colonies. They concluded that a system of chemical signals must control the development of these complex communities. They were then the first to show, in an April 1998 Science paper,[16] that biofilm formation in Gram-negative bacteria[17] is controlled by chemical signals (acyl homoserine lactones, or AHLs) that also control quorum sensing processes by which bacteria “sense” the number of cells present in a given ecosystem. Subsequently, the CBE described many different signals of this type. Because of this discovery, the ERC and the medical research community began to realize that many chronic diseases, such as cystic fibrosis, prostatitis, and chronic wounds, are the result of biofilm formation.

Biofilm control signals have subsequently been identified in many economically important organisms and several start-up companies sought to find and commercialize specific signal-blocking analogues in order to control biofilm formation. The CBE was awarded a patent on biofilm control by signal manipulation and a start-up firm, BioSurface Technologies, was spun off to capitalize on this technology.

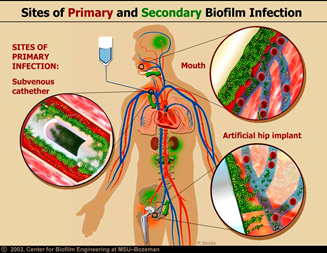

Biofilm as a Causative Agent in Chronic Wounds: Together with Dr. Randy Wolcott of the Southwest Regional Wound Care Clinic in Lubbock, Texas, the CBE showed that chronic wounds, such as diabetic lower extremity ulcers, are due to persistent biofilm infections (Figure 11-9). Preliminary work in this field led to the award of a NIH grant to the CBE to continue to develop models for the in-vitro study of chronic wounds and assess the efficacy of anti-biofilm agents.

| Figure 11-9: Biofilms attack various tissues and structures in the human body. (Source: CBE) |

Biofilm Assessment Methodologies: The CBE was instrumental in standardizing methods of measuring biofilms. This impacts how commercial products are evaluated by regulatory agencies, how health-related guidelines are enforced, and how researchers choose assays to quantify attached cell growth. The Center led activities within standard-setting organizations such as ASTM and the American Dental Association, as well as within regulatory agencies such as EPA and FDA, to propose statistically valid methods for biofilm assessment and quantification.

See Chapter 5, Section 5-B for further description of the CBE’s work in biofilms.

Biomimetic MicroElectronic Systems Center (BMES), University of Southern California, Class of 2003

Prosthetic Retina: By May 2014, artificial retina technology developed by a BMES team led by Center Director Dr. Mark Humayun had been successfully implanted in five U.S. patients with retinitis pigmentosa (RP), a condition that often leads to blindness. This was the first “bionic eye” to be approved for use in the United States through Food and Drug Administration market approval (in February 2013). By fall 2013, Second Sight Medical Products, Inc. (BMES’ commercial partner) received Medicare reimbursement approval and began transitioning the bionic eye—known as the Argus II Retinal Prosthesis System (Figure 11-10)—into mainstream application, with availability at 12 surgical centers throughout the U.S. as of the time of writing.

| Figure 11-10: The Argus II retinal prosthesis developed by BMES and Second Sight Medical Products is entering clinical practice. (Credit: Second Sight) |

The Argus II was the first FDA-approved long-term therapy for people with advanced RP in the U.S. and a breakthrough device for treating blindness. As a result of the retinal prosthesis, patients with chronic degenerative eye disease can regain some vision to detect the shapes of people and objects around them. The sight gained is enough to allow patients to navigate independently, improving their mobility as well as confidence.

The effort by Humayun and his colleagues received early and continuing support from NSF, the National Institutes of Health and the Department of Energy, with grants totaling more than $100 million. The private sector’s support nearly matched that of the federal government. The Argus I and Argus II systems won worldwide recognition, earning many prestigious awards. The BMES ERC has since developed a newer prototype system with an array of more than 15 times as many electrodes and an ultra-miniature video camera that can be implanted in the eye. The center, now “graduated” from NSF ERC Program funding, continues to work toward improving the Argus II and training surgeons in implanting it, as it is now available for patient use.

Hippocampal Prosthesis: Impairment of brain function can stem from many sources: external injury such as from head trauma, internal damage such as from stroke or epilepsy, or degenerative diseases such as Parkinson’s or Alzheimer’s. Since 2003, Dr. Theodore Berger and his colleagues at the BMES ERC have made rapid, even revolutionary strides toward developing implantable microcomputer chips that can bypass damaged parts of the brain and provide some restoration of brain function. Their main focus has been on developing a chip that can replace the function lost due to damage to the hippocampus, an area of the brain that is essential for learning and memory. The implanted chip will bypass the damaged brain region by mimicking the signal processing function of hippocampal neurons and circuits. The electronic prosthetic system is based on a multi-input multi-output (MIMO) nonlinear mathematical model that can influence the firing patterns of multiple neurons in the hippocampus. The ultimate aim is to devise neural prostheses to replace lost cognitive and memory function in injured soldiers, accident victims, and anyone suffering from cognitive and memory impairment.

The team began with successful tests in live rats in 2011 and moved to successful tests in non-human primates in 2013. They began testing in humans in 2015; and in 2016 a startup named Kernel was formed to move toward commercialization and clinical use of the hippocampus memory prosthetic. By 2018, working with researchers at Wake Forest Baptist Medical Center, Berger and his team had demonstrated the successful implementation of the prosthetic system, for the first time using clinical subjects’ own memory patterns to facilitate the brain’s ability to encode and recall memory. In the study, published in the Journal of Neural Engineering, participants’ short-term memory performance showed a 35 to 37 percent improvement over baseline measurements.[18]

Synthetic Biology Engineering Research Center (Synberc), University of California at Berkeley, Class of 2006

BIOFAB: BIOFAB, built by Synberc in 2009 with support from an ERC Innovation award, was the world’s first biotechnology design-and-build facility. In its first two years, the BIOFAB completed two major technical projects. First, the team assembled and tested all of the most popular expression control elements used in prokaryotic genetic engineering, then applied a new statistical approach for quantifying the primary activity of each part and also how much each part’s activity varies across changing contexts. This was the first example in synthetic biology of defining part quality in terms related to part reuse. It also provided a framework for establishing shareable descriptions of standard biological parts and devices. Second, the BIOFAB team invented and delivered the first example of a design architecture for standard biological parts that function reliably across changing contexts. This provided an initial realization of one of the core engineering dreams underlying synthetic biology—i.e., standard biological parts.

The Center created the BIOFAB to facilitate academic and industry synthetic biology projects, enabling researchers to mix and match parts in synthetic organisms to produce new drugs, fuels, or chemicals. The pilotprojectswere essential to launching BIOFAB’s operations and provide the first systematic insights into how standard biological parts behave in combination with each other.

“Off-the-Shelf” Biological Parts: Researchers at Synberc addressed the need for highly reproducible control elements in synthetic biology. The Joint BioEnergy Institute (JBEI), a multi-institution partnership that included Synberc, created new software—the Inventory of Composable Elements, or JBEI-ICE—that made a wide variety of essential information readily available to the synthetic biology design community for the first time. Synberc was instrumental in developing a robust “Web of Registries” (Figure 11-11) to make parts and tools available to the design community. JBEI-ICE is an information repository of these quality parts for designers to use to engineer biological systems. Researchers at Synberc partner institution MIT made advances in developing “biological circuit” components that can be relied on to work the same way every time. Using genes as interchangeable parts, synthetic biologists can design cellular circuits to perform new functions, enabling improved organisms such as plants that produce biofuels and bacteria that can detect pollution.

| Figure 11-11: Synberc/JBEI’s Web of Registries gives synthetic biology designers ready access to parts and tools. (Source: Synberc) |

CRISPR: The ability to control gene expression in an organism is an essential goal of synthetic biology. Gene expression is used in the synthesis of functional biochemical material, i.e., ribonucleic acid (RNA) or protein. In 2012, independent investigators Jennifer Doudna (UC-Berkeley) and Emmanuelle Charpentier (Max Planck Institute for Infection Biology) demonstrated that CRISPR-Cas9[19] (enzymes from bacteria that control microbial immunity), a naturally occurring genome editing system, could be used for programmable editing of genes in DNA, thus controlling gene expression. This seminal discovery launched a race to exploit the CRISPR-Cas9 system’s precision-cutting approach to gene editing by applying it to the genomes of higher organisms. Synberc-affiliated researchers at MIT (chiefly George Church and colleagues) constituted one of two teams that led the race, using CRISPR/Cas9 to edit genes in human stem cells. The other team, led by Feng Zhang of Harvard and MIT, simultaneously applied the technique to mouse and human cells in vitro. Both teams reported their results in the same issue of Science in January 2013.[20] These researchers were able to engineer CRISPR for the first time to do precise editing, launching a revolution in gene editing that is accelerating progress in synthetic biology.

Quality of Life Technologies ERC (QoLT), Carnegie Mellon University and University of Pittsburgh, Class of 2006

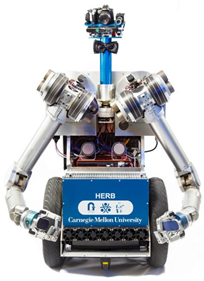

Assistive Robotics (Non-Surgical): Robots are extremely effective in environments like factory floors that are structured for them, and currently ineffective in environments like our homes that are structured for humans. The Personal Robotics Lab of the Robotics Institute at Carnegie Mellon University, supported by researchers and funding from the Quality of Life Technology Center (QoLT), is developing the fundamental building blocks of perception, navigation, manipulation, and interaction that will enable robots to perform useful tasks in human environments.[21] An “assistive robot” is one that can sense and process sensory information in order to benefit people with disabilities as well as seniors and others needing such care. The United Nations predicts that the global over-65 population will grow by 181% and will account for nearly 16% of the population by 2050.[22] Assistive eldercare robots are targeted at this aging population and are expected to rapidly expand the robot base, surpassing the numbers in industrial settings by then.

Service tasks such as complex cooking and cleaning remain major technical challenges for assistive robots. But QoLT researchers are finding solutions with projects such as the Home Exploring Robot Butler. The two-armed, mobile robot, known as “HERB,” (Figure 11-12) can recognize and manipulate a variety of everyday objects. It can recycle bottles, retrieve personal items, and even play interactive games. HERB recently learned to cook and serve microwave meals.

| Figure 11-12: HERB is the QoLT ERC’s assistive robot designed for personal home use. (Source: QoLT) |

QoLT studies have explored how people react differently to variations in a robot’s voice, conversation and embodied movement patterns — with factors like gender, culture, pace, intonation, emotion, and subject matter all under consideration. “As intelligent and adaptive technologies become ever more integrated into our normal personal lives, we may not view them as cold, personal devices—mere ‘appliances,’’ said Daniel Siewiorek, QoLT Center Director. “We expect they will become true partners and companions to the human users they serve.”[23]

As in the case of the BMES retinal prosthesis, QoLT technologies offer a good example of the direction interaction with end users—in this case, individual people—in which ERC researchers engage, as well as the personal benefits that their advances provide directly to end users. See Chapter 5, Section 5-B for further discussion of end-user interactions.

11-B(b) Communications

Institute for Systems Research (ISR) (formerly Systems Research Center, or SRC), University of Maryland, Class of 1985

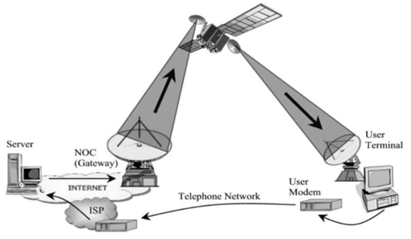

Satellite-based Internet Access: Despite the rapid deployment of traditional cable and DSL broadband across the country and around the world, billions of people live beyond the reach of high-speed, wired Internet connections. That fact created a multi-billion-dollar market for satellite-based, two-way Internet connections conceived and designed by the SRC/ISR.

The then-SRC worked closely with Hughes Network Systems in developing the hardware and software necessary to multicast broadband data across satellites to terrestrial receivers at homes and offices. Then-Director of the Center, John Baras, created the necessary algorithms. Hughes has marketed the resulting product under a variety of names, starting as DirecPC (Figure 11-13) and now incorporated into its HughesNet® offering, currently sold to 1.3 million subscribers in the Americas (in 2018) under plans that reach speeds of 25 Mbps for downloads and 3 Mbps for uploads. With each customer currently paying rates that start at about $50 a month, by 2018 the system was bringing recurring revenue of about $1.4 billion per year to Hughes alone. The SRC-developed technology also led to a worldwide industry of other companies delivering similar broadband services over satellites. In addition to utilizing the technology, Hughes hired many of the SRC/ISR students who worked on the project through the years.

In late 2012, the Senior Vice President for Engineering at Hughes described the impact of their collaboration with Dr. Baras and the Center: “About $5 billion can be directly credited to the work done at the SRC. We employ 1,500 people here in the state. Most of them are working on businesses related to this technology. This helped develop not just a new product, but a new industry.”

| Figure 11-13: The original Hughes DirecPC system was a novel hybrid of satellite and telephone network links. (Source: SRC) |

Network Simulator Testbed (NEST): NEST was a network simulator testbed tool consisting of a software environment to test network designs and scenarios. NEST was installed in over 400 companies and universities, where it was extensively applied in a wide range of networks and distributed systems studies and designs. NETMATE was a comprehensive network management platform consisting of tools to model and organize massive and complex managed information as well as tools to support visual access to and effective interpretation of managed information. For example, Citibank chose NETMATE as the centerpiece of the platform it used to manage its enterprise network worldwide.

Integrated Media Systems Center (IMSC), University of Southern California, Class of 1996

Immersive Media: The vision of the IMSC was the creation of a three-dimensional multimedia environment that immerses people at remote locations in a completely lifelike visual, auditory, and tactile experience. Before the term “virtual reality” was in common use, they termed this environment and the experience it would produce “immersipresence”—taking video conferencing to a level that would enable interaction and collaboration among people in widely separated locations that is so realistic it can largely replace the need for travel in business, education, collaborative research, and even to enjoy sports, arts, and other entertainment events.

In pursuit of this vision, the IMSC developed an array of technologies that support the creation, use, distribution, and effective communication of multi-modal information. These technologies include: high-definition video transmitted over a shared network at extremely high speed; 360º panoramic video; multichannel immersive audio that reproduces a fully realistic aural experience; real-time digital storage, playback, and transmission of multiple streams of video and audio data; and integration of all these technologies into a seamless immersipresence experience.

IMSC advances in several key technologies enabled a richer and higher-fidelity aural and visual ambience. These ground-breaking technologies led collectively to the Remote Media Immersion (RMI) system. Demonstration of the RMI integration in a concert hall with audience members located remotely proved the feasibility and viability of advanced, immersive systems on the internet. The simultaneous, synchronized live transmission of four channels of high-definition video and 10.2 channels of audio over the Internet had never before been achieved or even attempted at that time (2004).

11-B(c) Advanced Manufacturing

ERC for Intelligent Manufacturing Systems (CIMS), Purdue University, Class of 1985

Quick Turnaround Cell: The Quick Turnaround Cell (QTC), developed at the Purdue University ERC for Intelligent Manufacturing Systems, was one of the first engineering system testbeds produced in an ERC. It was a flexible manufacturing cell that integrated design, cutting, and quality inspection. It was used for rapid production of small-batch and one-of-a kind machined parts. It led the ERC team to understand that the geometries in use at that time were not sufficient for real production situations, yielding the development of more advanced feature-based approaches to design of complex parts. This realization identified the essential role of the concept of “features” to integrate computer-based manufacturing programs. The QTC was advanced in sophistication over time as it moved out of the ERC through government and industry partners to the point where it was, by 1992, in use at the Army Missile Command and Loral Vought Systems for design and machining of prototype parts. By the mid-1990s, all new computer-aided design programs were using this concept.

Engineering Design Research Center (EDRC), Carnegie Mellon University, Class of 1986

Traveling Salesman Algorithm: A joint project between an EDRC Ph.D. candidate who was a Visiting Scientist at the DuPont Company and a Dupont researcher who became an Industrial Resident at the EDRC resulted in the development of a parallel branch-and-bound algorithm[24] for solving the asymmetric traveling salesman problem, which made it possible to solve problems larger than existing algorithms could. The corresponding mathematical model was used to optimize the production sequence of different product lots in multiproduct batch-processing plants. A major accomplishment of this work was that problems with up to 7,000 lots could be solved to the optimal point in less than 20 minutes of computation time. The EDRC student who worked on the project was hired by DuPont to implement the model. The software was transferred to DuPont and applied to the operation of 35 DuPont businesses encompassing 8 plants worldwide. The annual cost savings for Dupont, documented in November 1989, were on the order of $2.5M—of which almost 10 percent could be attributed directly to the traveling salesman optimization procedure developed at the ERC. A number of applications for these algorithms specific to the chemical industry were also identified and adopted by chemical processors.

Reconfigurable Manufacturing Systems (RMS) ERC, University of Michigan, Class of 1996

Reconfigurable Inspection Machine on GMC Manufacturing Line: Today’s automotive engine technology is extremely sophisticated and requires manufacturers to maintain exacting quality specifications to ensure optimum engine performance and reliability. Therefore, manufacturers are increasingly employing in-line inspection stations to inspect critical part features on every part. In-line inspection minimizes the chances of defective parts reaching the customer and facilitates process control and improvement. The best applications of in-line inspection are those where the quality is highly unpredictable.

A good example is the need for in-line surface porosity inspection systems. Surface porosity is caused by tiny voids or pits at the surface of machined castings such as engine blocks and engine heads. It begins in the casting process when gases are trapped in the metal as the casting solidifies, creating voids in the material. If the void is exposed during machining, it leaves a small pit (a pore) at the surface. Although they are typically smaller than 1mm, surface pores can cause significant leaks of coolant, oil, or combustion gases between mated surfaces and cause severe damage to engines and transmissions. If such a pore is not detected, the consumer will have a noisy engine with a shorter lifetime. A major challenge to engine manufacturers lies in the difficulty in objectively measuring the sizes and location of irregularly shaped surface pores at production line rates.

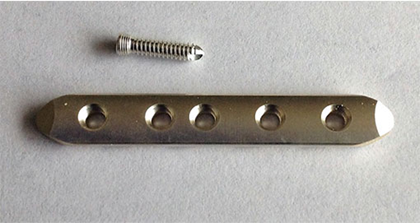

As an outgrowth of its Reconfigurable Inspection Machine project, the ERC/RMS developed a prototype machine-vision system for in-line surface porosity inspection of engine blocks and engine heads. The system utilizes a specially designed vision system to acquire very high-resolution (300 megapixel) images of the part surface. The high-resolution images are then analyzed rapidly to detect, locate, and measure pores without slowing the production line.

In July 2006, General Motors Corp., an ERC member company, installed an industrial system for in-line surface porosity inspection of engine blocks in Flint, Michigan (Figure 11-14). The system is based on the technology developed at the ERC/RMS. The inspection system is integrated into the production line, and a conveyor moves engine blocks through the inspection station. Every part is measured within 15-20 seconds, allowing the human inspector to do a more thorough job much faster.

| Figure 11-14: At the In-Line Porosity Inspection Station in GMC’s Flint, Michigan, plant, an operator inspects the images of an engine block in which pores were detected and makes an informed decision as to whether the engine block is indeed defective. |

Performance Analysis for Manufacturing Systems: A key element of reconfigurable manufacturing systems technology (Figure 11-15) is giving a system-level planner the tools to evaluate the desired volume and mix, comparing productivity, part quality, convertibility, and scalability options. The planner then can perform automatic system-balancing based on algorithms and statistics. One useful software package to perform these tasks is Performance Analysis for Manufacturing Systems (PAMS).

Invented with the support of the ERC/RMS, the PAMS software package analyzes and optimizes manufacturing system performances. It has analysis modules for system throughput and work-in-process calculation and optimization. It can identify machine bottlenecks and calculates the optimal allocation of buffers for pull or push manufacturing systems.

| Figure 11-15: The RMS ERC’s Reconfigurable Machining System concept gained rapid acceptance in industry. |

In 2007 officials at the Chrysler Indiana Transmission Plant were planning to add more pallets to reduce traffic blockage and streamline their entire Materials Handling System. ERC/RMS analysis using PAMS software instead recommended pulling out 15-18 pallets from the current closed-loop transmission machining line. The plant implemented the recommendation, and Chrysler reported an observed increase of throughput around 5%. Considering the mass production scale of the assembly line, this single improvement on the transmission case machining line has saved Chrysler hundreds of thousands of dollars annually.

Similar applications in 2007 at the Ford Cleveland Engine Assembly line, and in 2008 at four production lines at the Chrysler Kokomo Transmission Plant, realized even greater improvements in production. GM, meanwhile, imported the source codes of PAMS from the ERC and incorporated them into their own production throughput software.

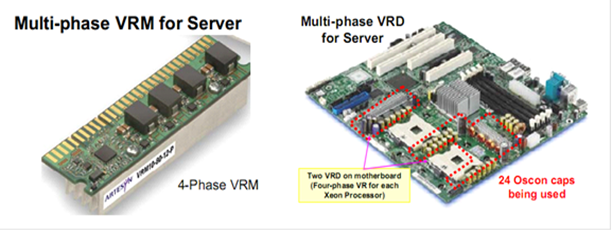

Center for Power Electronics Systems (CPES), Virginia Tech, Class of 1998

Multiphase Voltage Regulator Module: Intel microprocessors operate at very low voltage and high current, and with ever-increasing speed, requiring a fast and dynamic response to switch the microprocessor from sleep to power mode and vice versa. This operating mode is necessary to conserve energy, as well as to extend the operation time for any battery-operated equipment. The challenge for the voltage regulator module (VRM) is to provide tightly regulated output voltage with fast dynamic response in order to transfer energy as quickly as possible to the microprocessor. The first generation of VRM, developed for the Pentium II processor in the late 1990s, was too slow to respond to the power demand of subsequent generations of microprocessors, which included the Pentium III and Pentium 4. As a result, a large number of capacitors had to be placed adjacent to the microprocessor in order to provide the required fast power transfer. This solution became costly and bulky.

Responding to Intel’s microprocessor challenges, CPES established a mini-consortium of companies with a keen interest in the development of VRMs for future generations of high-speed microprocessors. When CPES at Virginia Tech first proposed the multiphase buck converter as a VRM for the Intel processors, this became the standard practice in the entire industry.

| Figure 11-16: The multi-phase voltage regulator module developed at CPES became standard in Intel microprocessors. (Source: CPES) |

Subsequently, every computer containing Intel microprocessors used the multiphase VRM approach developed at CPES (Figure 11-16). This particular technology developed into a multi-billion-dollar industry and gave U.S. industry the leadership role in both technology and market position. It also enabled new job creation and job retention in the U.S. Without this technology infusion from CPES, U.S. industry would have lost its market position in providing power management solutions to the new generation of microprocessors to overseas low-cost providers.

Novel Multi-Phase Coupled-inductor and Current Sensing: Today, every microprocessor is powered by a multi-phase voltage regulator (VR). Each phase employs a sizeable energy storage inductor to perform the necessary power conversion. Generally, for such an application, large inductance is preferred for steady-state operation, so that the current ripples can be reduced. On the other hand, a smaller inductor is preferred for fast transients, such as from “sleep mode” to “wake-up mode” and vice versa. To satisfy this conflicting requirement, in principle, a nonlinear inductor would be preferred so that during the steady state the inductance value is large, while the transient value is small. However, there is no simple way of realizing such a nonlinear inductor. In 1999, CPES proposed a coupled-inductor concept to address the issue. When the inductors are coupled in a multi-phase buck converter, by virtue of the switching network, they behave like nonlinear inductors. The equivalent inductance is large for a steady state and small for a transient. This enables a multi-phase VR to deliver power to the microprocessor that is currently operating at GHz clock frequency. This coupled-inductor concept was adopted in industry practice, where it enabled much improved performance, resulting in reduced footprint and cost. Coupled induction is still widely used in VR applications today.

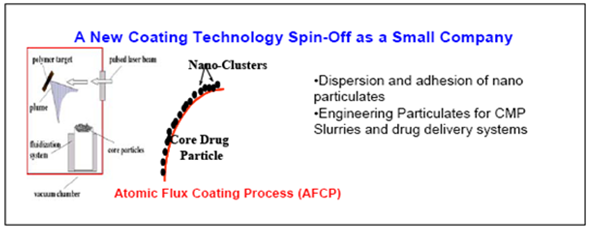

Particle Engineering Research Center (PERC), University of Florida, Class of 1995

Synthesis of Nanofunctionalized Particulates by the Atomic Flux Coating Process (AFCP): The aim of this ERC was to develop innovative particulate-based systems for next-generation processes and devices. To that end, PERC researchers synthesized a then-new class of particulate materials with specific functional characteristics. The particulates are synthesized by the attachment of nanometer-sized inorganic functional clusters onto the surface of core particles. The researchers demonstrated the synthesis of artificially structured, nano-functionalized particulate materials with unique optical, cathodoluminescent, superconducting, and electrical properties. It was shown that attaching atomic-to-nanosized inorganic, multi-elemental clusters onto the surface of the core particles generated materials and products with significantly enhanced properties.

Such materials can be used for a wide range of existing and emerging products involving advanced ceramics, metals, and composites which span multiple industries such as aerospace, automobile manufacturing, machining, vacuum electronics, batteries, data storage, catalysis, and superconductors. The Center applied this technology to develop coated drugs for slow release. It was expected that hundreds of millions of dollars would be saved in this pharmaceutical application alone.

In 1999 a company called Nanotherapeutics was formed (originally as NanoCoat) to license PERC’s atomic flux coating process technology (Figure 11-17). The CEO of the company was James Talton, a former graduate student in the ERC. A particular focus was on coating drug molecules with thin, porous films to allow the timed release of the drug. The company developed several other proprietary drug delivery technologies for pharmaceuticals, including, for example, an injectable bone filler. In 2009, Nanotherapeutics was awarded a $30.9 million, 5-year contract from the National Institute of Allergy and Infectious Diseases, part of the National Institutes of Health, to develop an inhaled version of the injectable antiviral drug, cidofovir. The drug would provide non-invasive, post-exposure prophylaxis and treatment of the Category A bioterrorism agent smallpox. In 2007 the company had been awarded a $20 million contract to develop an inhaled version of gentamicin for the post-exposure prophylaxis and treatment of tularemia and plague, both also Category A bioterrorism agents.

| Figure 11-17: The PERC ERC spun off several successful startups based on Center-developed technologies. (Source: PERC} |

In late 2016, the company opened a new $138M plant near Gainesville, Florida. The plant was built to fulfill a Department of Defense contract potentially worth up to $359 million. In October 2017, Nanotherapeutics changed its name to Ology Bioservices.

Center for Advanced Engineering Fibers and Films (CAEFF), Clemson University, Class of 1998

Modifying Substrates: The CAEFF provided an integrated research and education environment for the systems-oriented study of fibers and films, promoting the transformation from trial-and-error development to computer-based design of fibers and films. CAEFF researchers developed novel technology in the area of surface modification of polymeric and inorganic substrates. This technology uses a “primer” layer of poly glycidyl methacrylate (PGMA) to provide reactive groups on a surface that can subsequently be functionalized with other molecules, including biomolecules. Clemson University licensed the technology to one company, Invenca, in the form of an exclusive license restricted to the field of liquid chromatography and to Aldrich Chemical as a non-exclusive license restricted to the field of soft lithography. A third company, Specialty & Custom Fibers, also licensed the surface-treatment technology for anti-fouling fibers for biological species.

11-B(d) Energy, Sustainability, Infrastructure

Center for Advanced Technology for Large Structural Systems (ATLSS), Lehigh University, Class of 1986

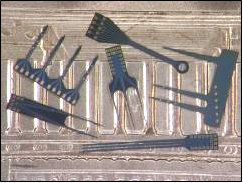

ATLSS Integrated Building Systems: The AIBS program was developed to coordinate ongoing research projects in automated construction and connections systems in order to design, fabricate, erect, and evaluate cost-effective building systems, with a focus on providing a computer-integrated approach to these activities. A family of structural systems, called ATLSS connections, was developed with enhanced fabrication and erection characteristics. These ATLSS connections have the capability of being erected using automated construction techniques. This feature minimizes human assistance during construction, resulting in quicker, less expensive erection procedures in which workers are less susceptible to injury or fatalities. The technology for automated construction is heavily dependent on the use of Stewart platform cranes, which are controlled by a system of six cables to allow precise movement in six directions. A scale-model Stewart platform crane was constructed in the ATLSS laboratory to test the feasibility and limitations of automated construction with these connections.

Bridge Inspection Technologies: A series of bridge-related technologies were developed at ATLSS which saw implementation and commercialization. The bridge technologies were designed to assist bridge inspectors and owners to inspect, assess, and maintain bridges in a more cost-effective and safe manner. These are:

Corrosion Monitor: Provides a measurement of atmospheric corrosivity by the production of an electric current generated during the corrosion process. It quantifies the corrosion on a steel element due to atmospheric conditions such as dust, humidity, condensation, salt spray, etc. The device was been placed on six bridge sites in five states.

Fatigue Monitoring System: Conceived to simplify the process of collecting and processing the stress history data required to estimate the remaining fatigue life of steel bridges. The key differentiating technology is a Fatigue Data Processing Chip which accepts conditioned data, performs “rainflow counting” calculations, generates a stress histogram, computes equivalent stress ranges, and stores the processed data. The ATLSS laboratory was used as a full-scale testbed for validating the chip, including using telemetry to retrieve data. In addition, a field demonstration was arranged with one of the ATLSS industry partners.

Hypermedia Bridge Fatigue Investigator (HBFI): Knowledge-based computer system that was designed to increase the effectiveness of bridge inspection of fatigue-critical components. HBFI tells inspectors where in a structure to look for evidence of fatigue cracking, leading to early detection and hence more economical repairs when needed. The hypermedia portion of the software guides an inspector through data entry on an existing bridge, and provides supplemental information on the concepts of fatigue and inspection for fatigue.

Smart Paint: Developed to augment visual inspection of steel bridges for existence of cracks. The system consists of dye-containing microcapsules mixed with paint. In the event of a crack in the underlying substrate, the paint cracks, resulting in the rupture of the microcapsules. The dye is released and quickly appears along the crack edges. The Smart Paint was tested in the laboratory, both on small specimens and full-scale ship components under long-term cyclic loading. A field bridge site was developed to further test the weatherability and performance of the paint.

Advanced Combustion Engineering Research Center (ACERC), Brigham Young University and the University of Utah, Class of 1986

3-D Entrained-flow Coal Combustion Model: With 85 percent of the world’s energy generated by burning fossil fuels, ACERC’s early research was aimed at making coal-burning plants cleaner and more efficient. Specifically, the center fostered the use of computational fluid dynamics (CFD) as a tool in this field, including improvements to the comprehensive 3-D entrained-flow coal combustion model (PCGC-3).