2-A Initial Formulation of Program Goals and Key Features

With the NAE’s Guidelines[1] coming to NSF to catalyze the Engineering Research Centers Program, the mood lifted for those at NSF who were committed to joining disciplines to advance technology and thereby strengthen U.S. competitiveness. After the demise of RANN in 1977, for nearly a decade the staff trying to develop a new and stable platform in NSF to achieve that goal repeatedly found the platform undermined by the basic research politics at NSF. Many began to feel a Sisyphus-like sense of futility and frustration. Now it looked like that “curse” had been lifted and there was a different future on the way, thanks to the efforts of Dr. Carl Hall and others in NSF, the White House, Congress, and the National Academy of Engineering.

Upon receipt of the NAE guidelines, the first step for NSF was to form a task force to develop the new ERC Program’s goals and features. Dr. Hall, then Acting Assistant Director (AD) for ENG, pulled together a team of NSF staff experienced with interdisciplinary research and start-up of new programs. Dr. William Butcher, an NSF staff member with materials research expertise, headed the task force. Other members were:

- Ms. Lynn Preston, Acting Head of the Office of Interdisciplinary Research, who had served in the RANN program and had recently led an initiative through which NSF addressed the emerging field of biotechnology;

- Mr. Norman Caplan, who had led NSF programs that founded the field of biomedical engineering;

- Dr. William Spurgeon, a mechanical engineer who had served in the RANN Program;

- Dr. Marshall Lih, Division Director for Chemical and Process Engineering, where the emerging program in enzyme engineering was housed in the MPE Directorate and later in ASRA;

- Mr. Paul Herer, Office of the ENG AD, who was serving as budget officer and strategic advisor to the AD and had served the same role in ASRA and RANN; and

- Ms. Janice Apruzese, who served in the Office of the Deputy AD for Engineering.[2]

The planning task force debated the features that were to be included in the program announcement, as the guidance from the NAE was given in broadly general terms.

The planning

task force understood the importance of the nascent program to engineering and

the opportunity it presented to set bold new directions for NSF, academe, and

industry. The task force looked upon the NAE guidance as a green light for a

new NSF mission to build a much-needed new culture in academic engineering.

This guidance will be condensed here as a basis for understanding the efforts

of the task force to develop the first ERC Program Announcement and the goals

and “key features” of the new program.

2-A(a) The NAE Guidelines Condensed: A Road Map

The NAE report pointed to long-standing problems in academe that were barriers to effective utilization of new engineering knowledge by industry. These included a lack of integration of knowledge and techniques to address large systems areas—like computer-integrated manufacturing and large construction efforts—because of the small-scale nature of academic engineering research and its separation from engineering practice. Progress would depend on the development of a “new breed” of engineers capable of working in cross-disciplinary teams to integrate basic knowledge with the requirements of technology development and process improvement. Engineering graduates had become too analytical, with too little opportunity for “hands-on” experimental research and with little knowledge of engineering practice.

While NSF had proposed academic engineering centers in the past to address these problems, it took the combined power of input from industry, academe, and finally the White House and the NAE to move the idea forward. The NAE recommended that Engineering Research Centers be established and that they be bold and ambitious in response to these needs and staffed with first-class faculty and students. In addition, the centers needed to be of sufficient scale to make a noticeable impact on the content of engineering education and the culture of engineering in academe.[3]

The Guidelines laid out the basic challenges to be addressed by the centers:

• Develop fundamental knowledge in fields that will strengthen US competitiveness;

• Increase the proportion of engineering faculty committed to cross-disciplinary teams;

• Focus on engineering systems and increase competence in new fields needed by industry;

• Increase the number of engineering graduates who can contribute innovatively to U.S. productivity;

• Include practicing industrial engineers as partners to stimulate technology transfer; and

• Firmly link research and education.

2-A(b) Planning the First ERC Program Announcement

Initially, the NSF task force reviewed the lessons learned from the RANN Program and ASRA as they related to large-program creation and operation, forming lasting interdisciplinary partnerships and partnerships between faculty and industry engineers, and post-award oversight. The lessons:

- There are enthusiastic faculty members who will work across disciplines to advance technology and the best way to reach them is through a special program announcement, not to wait for proposals to come in.

- Peer review has to include not only academics but also industrial and government engineers and scientists.

- Individual review bolstered by a panel review provides the best input.

- Faculty and industrial researchers can effectively plan research that will impact not only technology but also the education of future industrial engineers and the best way to achieve that is through long-term partnerships with industry.

- Strong partnerships can be built between faculty and industry engineers if industry pays part of the costs for the research. Long-term support is necessary to build these lasting partnerships.

- For industry/university partnerships to work in an academic center, industry has to have some say about projects funded; the I/UCRC program had pioneered the use of an Industrial Advisory Board to enable that input along with required industrial financial support.

- Integrating research knowledge into early-stage technology (proof-of-concept, a term coined later in the ERC Program), is a viable goal for academic research, but it lies at the edge of the comfort zone for university/industry partnerships, where the interface with industry is crucial for useful outcomes.

- Post-award oversight will be needed to ensure that the mission of the program is met and centers develop programs that effectively meet their goals and the goals of the Program.

- Site visits with external peer reviewers and NSF staff will strengthen the post-award oversight.

In the initial planning stage, the task force decided to issue a program announcement to reach a broader audience in a short time. Since the intent was to join scientists and engineers from different disciplines in each center, the task force members debated whether to use the words multidisciplinary or interdisciplinary. They settled on a new term: cross-disciplinary—which implied the involvement of faculty from different disciplines, joining their knowledge to address the research challenges offered by the technology to be addressed. “Multidisciplinary” was rejected because in practice multidisciplinary teams were often a collection of faculty from different discipline writing separately about their particular role in the research without sufficient integration. “Interdisciplinary” implied a stronger integration of disciplinary knowledge that would result in the development of new disciplines at the interface, which was not the intent of the program. Over time, new disciplines did emerge from ERCs, but that was a second-order benefit.

The planners didn’t want to fall into the trap of classifying research in ERCs as either basic or applied. After much debate among the task force members, Carl Hall chose the word “fundamental,” as opposed to “basic,” to differentiate engineering research, which developed knowledge as a “foundation for use in the solution of important problems” rather than as an exploration of natural phenomena for the sake of understanding only. The centers would include fundamental research exploring the phenomena underpinning the chosen technology and more applications-driven research to explore the barriers to the realization of the technology. It was essentially directed research as opposed to curiosity-driven research.

They envisioned structuring a new type of research program, which amounted to a paradigm shift in the culture of engineering research and education in academe. Prior NSF funding had encouraged explorations of the fundamentals of engineering, almost in the reductionist mode of science, as discussed in Chapter 1. However, engineers in general harness knowledge to build a body of tools and techniques to solve problems and advance technology, often in the process developing new engineering knowledge or stimulating basic research scientists to explore new knowledge.

Since the demise of RANN, NSF’s funding had left a gulf between the knowledge-driven culture of engineering science and the problem and technology-driven culture of engineering in practice. Funding was needed for the full spectrum of engineering, from fundamental research through to the advancement of technology, with the added complexity of the integration of that technology into engineering systems. All were necessary for the development of a workforce capable of innovation in industry. Engineering systems was a new term for all the members of the task force and its full impact on academic research would take a few years to realize.

The planners also debated how far along the technology development spectrum the research should go within academe. They decided that the ERCs should have sufficient support for instrumentation to allow experimental applications—early stages of the device, components, and system technology should be designed and built—but the term proof-of-concept did not arise until it was coined later in the Program’s history

They purposefully decided not to target narrowly any technology areas but rather employed broad, general terms as follows:

“Each Center should focus on a particular subject area of national importance. Examples of subject areas include systems for data and communications, computer-integrated manufacturing, computer graphics design, biotechnology processing, materials processing, transportation, and construction. These examples are given only to illustrate concerns which a Center may address, proposing institutions are invited to identify the subject area and focus of their proposed Center as well as the approach they consider would best address the area….”[4]

These complex challenges were the motivation for the task force as it designed the first ERC Program Announcement in response to the NAE guidelines. The guidance to proposers in the first Program Announcement included:[5]

- Develop fundamental knowledge in engineering fields that will enhance the international competitiveness of U.S. industry and prepare engineers to contribute through better engineering practice;

- Firmly link engineering education and research, key elements in improving U.S. industrial productivity;

- Provide cross-disciplinary research opportunities for faculty and students to provide fundamental knowledge that can contribute to the solution of important national problems;

- Prepare engineering graduates with the diversity and quality of education needed by U.S. industry;

- Have a strong commitment from industry (money, equipment, and people) to assure industrial involvement in the research and educational aspects of the Centers.

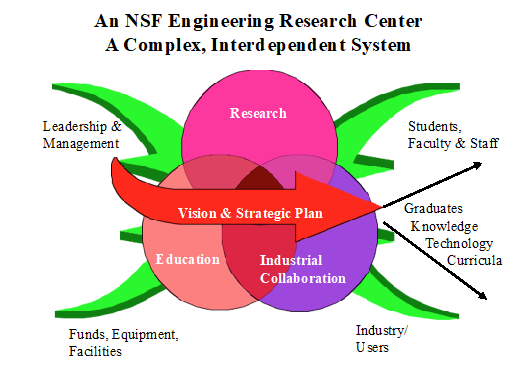

Based on this guidance the task force decided that the proposing ERC teams had to include the following “key features,” designed to build centers that integrated research, education, and industrial collaboration—thereby making ERCs themselves complex systems as depicted in Figure 2-1 (designed later in the program’s history). Those features were as follows:

- Involve a team effort of individuals from various backgrounds, possessing different engineering or scientific skills, where such effort can contribute more to the focus and goals of the Center than would occur with individually funded research projects. Hence, the nature of the Center’s research would be cross-disciplinary.

- Emphasize the systems aspects of engineering and help educate and train students in synthesizing, integrating, and managing engineering systems.

- Provide experimental capabilities not available to individual investigators because of large instrumental acquisition costs, requirements for a large number of skilled technicians, or other maintenance and operating requirements.

Figure 2-1: Each ERC is a complex, interdependent system.

- State and local agencies or government laboratories involved in engineering practice may also be participants. The development of new methods for the timely and successful transfer of knowledge to industrial users is expected.

- Include a significant component involving both graduate and undergraduate[6] students in the research activities of the Center. Student participation in the activities of the Center would expose future engineers to many aspects of engineering rather than one specific field and better prepare them for the systems nature of engineering practice. Codification of new knowledge generated at the Center and continuing education of practicing engineers may be another component.

- Strive to include 10 percent of the home institution’s graduates at the masters and doctoral levels.[7]

By April 1984, the first ERC Program Announcement was released and a budget of $10 million was set aside for the new centers. This was an augmentation of the NSF budget requested by NSF and provided by Congress, contrary to the opinions voiced by many traditional engineering Program Directors who felt that the funds would have flowed to their programs if the ERCs had not been invented. (See section 2-D(b), Disciplinary Wars, for further discussion.) The approval process for the announcement was short and swift, as is evident by the release date. It is important to note that NSF was relatively young and not very hierarchical, so approval came about through discussion between Dr. Hall and Dr. Edward Knapp, the Director of the Foundation at that time, in contrast to the lengthy internal review at all levels of the NSF now required for release of a program announcement.

It was understood that the features of the program would change the culture of engineering in academe and industry; therefore, the desire was to support faculty who were anxious to break out of the mold for engineering support set by engineering science programs at NSF and build a new culture at the cusp of research and technology, at the cusp of research and education, and at the cusp of academe and industry.

2-B Staffing Up

Given the challenges posed for the Program to build this new culture in academe, Carl Hall knew that he would need a team that was ready, able, and fully committed to ensuring the culture was built, to effectively leading and managing the ERC Program, and in the process to stimulating a new dimension in the culture for engineering at NSF. Lewis G. (Pete) Mayfield was selected to lead the ERC Program and serve as the new Head of the Office of Interdisciplinary Research (OIR) because of his experience in two realms. Early in his career at NSF, going back to the 1960s, he was able to nurture a new field at the interface of biology and engineering, which he called “enzyme engineering.” He led the development of this field into the 1980s. In addition, when he served as the Director of the Division of Advanced Technology in the RANN program and later in ASRA, he was responsible for the oversight of programs like the Earthquake Hazards Mitigation Program, where projects were supported to advance technology and public policy in collaboration with industry and state and local governments. Later, in the early ‘80s, he ran an office that supported emerging fields of engineering. Before coming to NSF in the early 1960s, Mayfield had taught at Montana State University and also worked in industry as a chemical engineer, so he was familiar with all three sectors involved in ERCs.

Lynn Preston continued to serve as the Deputy Head of OIR and Mayfield’s deputy in leading the ERC Program. She was selected for the ERC role because she had a proven capacity to develop new interdisciplinary programs. Preston had developed a program initiative and program announcement in the early 1980s that joined staff across the NSF to stimulate scientists and engineers to carry out research in the emerging field of biotechnology. At the request of Congress during ASRA, she developed the Appropriate Technology Program and a new program to join a broad spectrum of scientists and engineers to carry out research to aid the disabled. While her degrees were in economics, her genes were in engineering and she understood the engineering mindset and design/build processes from her father, a physicist who was an engineer in practice. The background in economics gave her a broader understanding of the role of industry and technology in the economy and a broader systems view than the more technologically focused engineers of the time tended to have. Before coming to NSF, Preston had worked on early macro-economic models at the Institute for Defense Analysis.

Both were known to be good managers; both were creative and thrived in the development and operation of programs at the cusp of disciplines and broke new ground in research partnerships. Most importantly, they were experienced in operating outside the NSF “business as usual” culture, which was essential for starting up and sustaining the ERC Program in a bureaucracy that was not very friendly toward research relevant to industrial competitiveness or to research support that would sustain more than one individual investigator from one discipline.

Sherry Scott, an excellent administrative manager, joined them to manage the logistics of the program. Syl McNinch, from the NSF Budget Office, provided advice since he had more experience working with the Office of Management and Budget (OMB) than Preston and Mayfield did. To expand their capacity in terms of numbers and disciplines, Mayfield built a team of engineering program directors, assigned to the other divisions of the directorate, who would provide reviewer suggestions and manage the panels that would be a part of the upcoming peer review process.

When the Directorate for Engineering was restructured in 1985, the OIR became the Office of Cross-Disciplinary Research (OCDR), which housed the ERC Program, the Industry/University Cooperative Research Centers Program and its PDs, Alex Schwarzkopf and Bob Colton, and the Small Business Innovation Research Program and its lead PD, Roland Tibbetts, and others. (See Section 1-B(e), 1985: New NSF Leadership Focuses on Research…)

2-C Inventing New Processes at NSF

2-C(a) Initial Connection with the Community

The first ERC Program Announcement was released in April 1984 and proposals were due October 1, 1984. These were full proposals, as the concept of a pre-proposal was yet to come. It meant that a lot of work had to be carried out in academe in a short time and it was urgent that the community understand the importance of the Program and the magnitude of the cultural changes in academe and industry that would ensue from merely proposing, not to mention winning an award. Given this urgency, the next task was to hold a Town Hall-style meeting to brief the engineering research community on the goals and features of the Program and the review process. Over 200 representatives of universities and colleges, professional societies, and other interested parties attended a June15, 1984 kick-off meeting for the Program convened by NSF and held in the General Services Administration auditorium in Washington D.C.[8]

2-C(b) Inventing a New Review Process

On October 1, 1984, 142 proposals arrived at NSF from 106 different institutions, involving over 3,000 participants and requesting more than $2 billion over five years. Seventy-five percent were from engineering disciplines and the remaining were from scientific disciplines and the humanities. The magnitude of the total requests, at $400 million per year, represented an increase of 30 per cent over then-current expenditures for U.S. engineering research, indicating a large reserve of underutilized engineering capacity in universities at that time. [9]

Prior to the arrival of the proposals, the ERC team developed the review process to deal with complex, cross-disciplinary proposals. The proposal review criteria were informed by the review criteria described in the Program Announcement, i.e.:[10]

- Nature and importance of the subject area which will be the focus of the Center.

- Proposed plans to assure that research of high quality and intrinsic merit will be supported by the Center.

- Integration of the Center into the education of engineers, both at the undergraduate and grade level(s). Continuing education of practicing engineers may be another important factor.

- Participation of engineers and scientists from industrial organizations involved in engineering practice and their expected contribution to identifying and reaching the goals of the Center.

- Cross-disciplinary nature of the Center, the extent to which individuals from varying backgrounds and expertise are involved and how they are involved, the rationale for supporting a Center rather than providing individual investigator support through other sources.

- The need for and advantage of sharing expensive or specialized equipment proposed for the Center and how such equipment will contribute to the study of the problem.

- Management plan for operation of the Center to assure the program will be of highest quality, including the qualifications of the principal investigator, mechanisms for selection research projects, allocating funds and equipment, recruiting staff, and dissemination and utilization of research results.”

These were expanded as follows to serve as the review criteria for the proposals:

Research and Research Team

- Is the research innovative and high quality?

- Will it lead to technological advances?

- Does it provide an integrated systems view?

- Is the research team appropriately cross-disciplinary?

- Is the quality of the faculty sufficient to achieve the goals?

International Competitiveness

- Is the focus directed toward competitiveness or a national problem underlying competitiveness?

- Will the planned advances serve as a basis for new/improved technology?

Education

- Does the center provide working relations between faculty and students and practicing engineers and scientists?

- Are a significant number of graduate and undergraduate students involved in cross-disciplinary research?

- Are they exposed to a systems view of engineering?

- Are there plans for new or improved course material generated from the center’s work?

- Are there effective plans for continuing education for practicing engineers?

Industrial Involvement/Technology Transfer

- Will industrial engineers and scientists be actively involved in the planning, research, and educational activities of the ERC?

- Is there a strong commitment or potential for a commitment for support from industry?

- Are there new and timely methods for successful transfer of knowledge and developments to industry?

Management

- Will the management be active in organizing human and physical resources to achieve an effective ERC?

University Commitment

- Is there evidence of support and commitment to the ERC by the university?

- Is there evidence that the tenure/reward practices will not deter successful cross-disciplinary collaboration?

Later in the review process, in subsequent years as the ERC portfolio grew, the following secondary criteria were applied by the NSF staff before making a final award decision. These criteria were:

- Geographic balance and distribution,

- Whether a university has already been granted an ERC, and

- Whether the research area complements the already existing centers. [11]

In 1984, the review process was as follows. The proposals were divided into like groups that would form a set of proposals for a panel to review, such as manufacturing, biotechnology, microelectronics, etc. Initially, 88 faculty and others from academe, industry, and government who were not involved in the proposals agreed to review the proposals. The proposals were boxed up and sent to the reviewers assigned to each panel, along with the program announcement and the review criteria, as there was no electronic means to send them at that time. The reviewers wrote and submitted individual reviews of the proposals, a minimum of three per proposal. These panelists then came to NSF in the fall of 1984 to serve on the panels. At this stage, the reviewers on a panel could see the content of the reviews from the other panelists and others who might not have participated in the panel, but not reviews of proposals assigned to other panels. The panels rated the 142 proposals as: highly recommended (40), recommended (30) and not recommended (72).

The 40 highly recommended proposals were reviewed by the ERC Panel, a diverse group of 14 scientists and engineers from industry (10) and academe (4), which was co-chaired by Professor Eric L. Walker, former President of Pennsylvania State University, and C. Lester Hogan, former President of Fairchild Camera. Among them were presidents and chancellors of universities, professors, and presidents and vice presidents of industrial firms. The panel met during the weekend of December 1, 1984. Pete Mayfield and Nam P. Suh, the newly appointed Assistant Director of the Directorate for Engineering, briefed the Panel on the Program goals, review criteria, and the review protocol. The panelists looked at all the proposals in all categories and the prior reviews to be sure excellent proposals had not been left behind.

Satisfied with the categorization of the proposals, they concentrated on the 40 in the highly recommended category. According to Eric Walker, “the panel was most impressed by the number of good ideas for research that appeared among the engineering research proposals. It is apparent that there is a tremendous capacity in our engineering schools for doing forefront research and that the full capacity is not being utilized. The Engineering Research Centers will provide expanded research and educational opportunities to take advantage of that potential, and so strengthen the nation’s engineering knowledge and talent bases.”[12] However, the Panel found that in a number of cases industrial participation was weak and many proposals were too broad, resembling a collection of individual projects.

Fourteen proposals emerged from that process to receive a site visit. Site visits were carried out on campus in the winter of 1985. The site visit teams included one or two members of the ERC Panel and two or three other reviewers. Two members from the NSF ERC Program team accompanied each of the site review teams to brief them on the Program and the review criteria. The site visit teams were briefed by the proposing team and met with the university administrators and industrial supporters, separately; and they toured any facilities. Each site visit team wrote a report on campus responding to the review criteria with an executive summary of findings.

The final meeting of the ERC Panel to recommend new awards to NSF took place in February 1985. Nam Suh added a new dimension to the process. He felt that the PIs of the 14 proposed centers should have an opportunity to brief the Panel and respond to panel questions. Erich Bloch and Nam Suh again spoke to the Panel about the goals of the Program.

The PI sessions took place on the first day. One of the finalists who eventually won an award, Professor John Baras, then only 35 years old, remembers that day as one of the “most stressful of my life, worse than my Ph.D. defense, as not only did my future depend on it but so did the future of the School of Engineering at the University of Maryland. I was terrified, but then Lynn Preston came to lead me into the panel room and introduce me to the panel, and when she said, ‘Don’t worry, you’ll do just fine,’ I knew I would be OK.” On the second day, the Panel deliberated the strengths and weaknesses of the candidate proposals, and after much heated debate, highly recommended six for award and three more as “recommended.” The remaining 29 were not recommended.

After the ERC Panel provided NSF with its recommendations, it was time to determine NSF’s recommendation. Mayfield conferred with Erich Bloch and Nam Suh and decided that since funds were limited only the six highly recommended proposals would be recommended for start-up awards as follows, beginning on May 1, 1985.

- Columbia University – $2.2M for 9 months, to $3.24M for Year 2

- University of Delaware – $.75M for 9 months, to $1.2M for Year 2

- University of Maryland – $1.5M for 9 months, to $2.426M for Year 2

- Massachusetts Institute of Technology – $2.2M for 9 months, to $3.048M for Year 2

- Purdue University – $1.6M for 9 months, to $2.038M for Year 2

- University of California at Santa Barbara – $1.17M for 9 months, to $1.75M for Year 2.[13]

The differences in funding levels reflected a determination of readiness to deal with a large award and consideration of funds already in place for new ERCs that were built on prior centers (Delaware and Columbia). That support accounted for $9.42M of the ERC Program’s $10M FY85 budget.

The National Science Board authorized the Director of NSF to proceed with funding these six awards on March 22, 1985, and Bloch announced them on April 3rd.[14] The structure of the review process from start to finish was a new model for NSF. It proved to be a significantly valuable model for the review of complex proposals with large budgets and long time-frames and has remained in place for the ERC Program to date. It also has impacted the review of other programs over time, such as the Science and Technology Centers Program, which was established in 1987 at the request of Erich Bloch.

Erich Bloch saw the ERCs as a path-setting new program that would change NSF and academe. “…when I looked at NSF…it was just the paymaster for PIs (principal investigators). I thought this was dead wrong. The idea was that NSF should be concerned about competitiveness and look for new avenues to tie together with industry and government and universities. It was a new idea to NSF.”[15]

2-C(c) Introducing the Winners

At the request of NSF, the National Academies’ studies and reports arm, the National Research Council (NRC), formed a Cross-Disciplinary Engineering Research Committee (CDERC). In April 1985, shortly after the first “class” of six ERCs was announced, the Committee hosted a two-day symposium at the main Academy building’s auditorium. The symposium was entitled “The New Engineering Research Centers: Purposes, Goals, and Expectations.”[16] This symposium served, in effect, to formally introduce the ERCs to the wider world. Kicked off with a keynote address by George Keyworth, the President’s Science Adviser, who had helped to launch the program less than 18 months earlier, it featured speakers who described the genesis of the program, the selection process, each of the six new centers, and future challenges that the ERCs would be expected to address. The symposium report summarized the various messages in the following way:

Clearly the real focus of the ERC concept, from the standpoint of both research and education, is the improvement of our national industrial competitiveness. If the ERCs can provide a strong link between academe and industry, research and development, education and practice, they can vastly improve the effectiveness with which we apply our rich national resources of knowledge and talent. If they can bridge the traditional engineering disciplines they can be the catalyst for a needed reshaping of research approaches and values, in universities as well as in industrial manufacturing practices. As George Keyworth observed in his keynote address, “This removal of barriers lies at the heart of the new Engineering Research Centers.” It will be necessary that everyone—those in academe, in industry, and in government—understand why those barriers must come down, and that all work with a will to help the ERCs succeed.[17]

The directors of the winning proposals made presentations about their centers’ goals and planned features. Brief descriptions are presented in the linked file, “The ERC Class of 1985.”

2-C(d) Inventing New Funding Mechanisms

Once the award decision was made, it was time to determine the award instrument and future funding levels during the first five-year award for each center. Mayfield and Preston understood that a new type of funding instrument would be required, as using a traditional NSF grant it was not possible to require the PI to deliver on the proposed research. A contract provided NSF with too much control. Preston conferred with a grants officer in the Division of Grants and Agreements. After explaining to him that the ERCs had to structure programs to fulfill the research, education, and industrial collaboration programs proposed and accepted for funding by NSF, they decided that the appropriate instrument would be a cooperative agreement. The agreement is basically a set of two-way obligations. He had developed cooperative agreements for the ocean science programs to oversee the deployment of research vessels.

An agreement was structured for the ERC Program using that model. The ERC Program goals were taken from the Program Announcement and the individual ERC’s proposed features were taken from the proposal and included in the agreement so that the ERC PI would be obligated to attempt to fulfill them. The PI was required to form an industrial advisory committee and hold annual meetings with industry, to attend annual meetings of ERC PIs and staff with NSF, to disseminate findings in research and education. In time, it was expanded to require the ERC to keep a database in order to provide NSF with quantitative indicators of activities and programs in meeting ERC Program goals. The agreement also stated that continued NSF support would depend, among other things, on an annual review of ERC progress. The recipient university(ies) and any state or local government were obligated to provide proposed cost sharing and promised leveraged support, in the case of governmental bodies. The ERC also reported to the Dean of Engineering, to position it in the academic hierarchy of a school of engineering and to signify and cement its cross-disciplinary structure, since housing it in a disciplinary department would be counterproductive. NSF would carry out post-award oversight, but through its guidance NSF staff would not attempt to usurp the role of the PI.

If future budgets were to grow, that growth would depend on performance—a new mode of using financial incentives to stimulate performance.

2-C(e) Inventing a New System of Post-Award Oversight

Based on their RANN experience, Preston and Mayfield realized that the ERC awards would need post-award oversight if the ERC Program were to fulfill its mission for the Nation. Merely providing large sums of money to academe and expecting it to stimulate the required changes in culture would be naïve. Don Kash remarked in 1986 that: “An ERC in its host university is like a transplanted organ. It requires powerful drugs and constant monitoring to keep it from being rejected by the host body.”[18]

The cooperative agreement was the first step, but that had to be followed up with reporting, performance criteria, and post-award oversight by NSF ERC Program Directors and outside peer review teams. Preston was put in charge of developing that system.

i. Life Cycle of an ERC

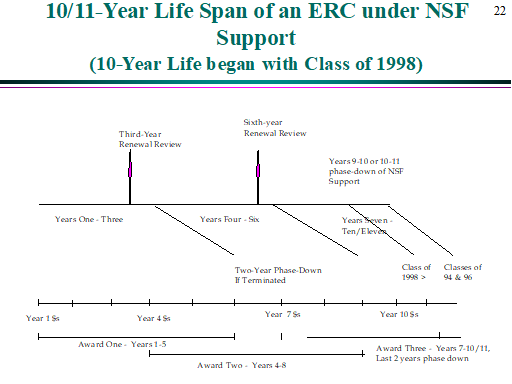

ERCs were initially funded through a five-year cooperative agreement. By 1986, at the request of OMB, the agreement had a termination point at the end of 11 years. Centers were free to recompete for a second award. The life-cycle with its annual and renewal reviews in years three and six is depicted in Figure 2-2. The initial award was for five years with a renewal review in the third year. If that review was successful, five more years were added to the agreement beginning in the fourth year. If the review is not successful, the funding was phased down in years four and five. For successfully renewed centers, there is an additional renewal review in the sixth year with the same funding pattern until the end of the award in the 11th year.

ii. Performance Criteria

Initial performance criteria were used to determine how effectively the center was organizing to address its goals and the goals of the ERC Program. They were derived from the key features in the program announcement, the cooperative agreement, and the review criteria used to review proposals. The process was also informed by a study on evaluation of ERCs that was done for NSF in 1986 by the NRC.[19] The criteria used in the third-year renewal reviews of the Class of 1985, carried out by March 1988, were distilled to the following by Preston for the GAO:

- Has the ERC met its goals and the ERC Program goals?

- Is the ERC cohesive, with shared goals?

- Is it an ERC or business as usual?

- What has the ERC achieved that could not be achieved through individual grants?

These general questions were supported with performance criteria relevant to each key feature. These criteria were used as a frame of reference and Preston is quoted in 1986 explaining to the General Accounting Office (GAO):

It is not intended that an evaluation/review report merely be answers to these questions. Rather, the report should reflect the judgment of the team regarding the progress and prospects of the ERC using these criteria as a frame of reference. They are intended to bring the reviewer up to speed on the goals and objectives of the ERC program. The application of the criteria to each center may differ depending upon whether or not the ERC was built on an existing center or is started de novo (anew), the degree of difficulty inherent in the focus of the center, the degree of difficulty inherent in the blending of the disciplines involved in the ERC, the degree of sophistication of the targeted industrial community, etc.[20]

iii. Reporting and Annual Reviews

Post-award reporting was necessary to provide information to carry out the performance reviews and the data needed to demonstrate progress, impact, and support to NSF, OMB, and Congress. Initial guidance for post-award reporting by the Class of 1985 was simple. The PIs were to prepare a report that described their goals for each key feature, progress, and plans. The features to be addressed were: (1) overall center goal; (2) research program; (3) education program (undergraduate, graduate, and workforce); (4) industrial collaboration; (5) leadership and management; (6) support (university, state or local government, and industry); and (7) publications and dissemination. They were asked to provide data: numbers of faculty and students (by degree program), numbers of industrial members and lists of member firms, and financial management data on expenditures and income.

Figure 2-2: Life Cycle of an Engineering Research Center

The first two annual reviews were carried out by teams from NSF and the external community. This required a new role for NSF Program Directors.

2-C(f) Inventing a New Role for NSF Program Directors

Traditional NSF Program Directors (PDs) functioned basically in a pre-award mode. Some, who had been in the Foundation for some time, served to stimulate new fields of research through support of proposals and interaction with professors at professional society meetings. Some, like the PDs in the Earthquake Hazard Mitigation Program, worked more actively with the academic and government communities in the field to promote an agenda for research and needed public policy. By in large, though, most NSF PDs waited for proposals to come in, sent them out for review, funded a small percentage, and then waited for ensuing publications.

That model was not going to suffice for the ERC Program. Drawing on their RANN experience, Mayfield and Preston developed a new role for ERC PDs. ERC PDs had to have interdisciplinary experience in prior roles at NSF or in industry or academe. Some had been engaged in promoting new types of programs within NSF during the RANN and ASRA period. Those who served as ERC PDs either “part-time” or full-time, were engaged in the pre-award and post-award processes.

Upon receipt of proposals and assignment of proposals to panels, an ERC PD developed and managed the pre-award panels. Mayfield and Preston managed the ERC Panel that was charged with recommending full proposals for site visit and finally award. The ERC PDs were responsible for pre-award site visits, for rounding up reviewers and managing the review and report production on site. Once an award was made, a full-time ERC PD was assigned to post-award oversight. This involved working with the PI(s) to oversee their management of the research, education, and industrial collaboration programs.

The first tool for post-award oversight by these PDs was on-campus visits at start-up, if funding was available, followed by annual site visits by teams of engineers and scientists from academe, industry, and government. Since the ERC Program was new, it was a creative process at start-up with much to learn on both sides in real time, as there was no time to determine “best practices.” The PDs formed Technical Advisory Committees (TAC) to assist with the oversight process. These teams involved both NSF and external reviewers. As time went by, it was determined that NSF staff should lead the visits and assist the lead PD but not participate in peer review. Later, the term “site visit team” came into use and continues in use to date.

Early in the process it was evident that some of the ERCs were taking off successfully and already meeting the challenges posed by the Program, as evidenced by:

- Teams of faculty quickly forming across laboratory boundaries to address issues larger and more complex than they could solve alone;

- Embracing the idea of building technology testbeds to explore the realization of their systems visions in an academic context;

- Engaging undergraduates in the ERC’s research across faculty lines;

- Building strong programs of industrial collaboration supported by Industrial Advisory Boards and industrial funds; and

- Universities ready and willing to provide the needed space, cost sharing, and other support to start-up and strengthen their ERCs and their management.

Others were having problems with the following issues:

- Initial start-up modes of operation showed that if was difficult getting faculty to put aside their own interests and join in a team with shared goals.

- Some centers resembled collections of single-investigator projects with little or no integration of findings.

- Education was separate from research, and few undergraduates were involved.

- The ERC team was not able to get a significant number of firms to provide cash to join the ERC, as members or the faculty would not listen to their advice and guidance.

- The university was not forthcoming in provide promised space or faculty hires.

As part of an assessment of NSF’s ability to mount and sustain the ERC Program that was conducted by the General Accounting Office (GAO), the GAO team interviewed ERC PDs.

One ERC program director told us, for example, that the review team, or Technical Advisory Committee (TAC) is at the center for 2 days, talking with faculty, university administrators, students, and industry officials, as well as visiting lab facilities. The TAC team chairman writes the report[21] advisory to the ERC program director, and on the basis of this report, the program director may make specific recommendations to the center director for improvements. According to one program director, the cooperative agreement is the “teeth” connected to the yearly site visit. For example, NSF cut the budget of one center intentionally because, as a result of the yearly site visit, it found that the center was not following certain key ERC program goals. The message was that the budget would be restored if the center followed up on the site visit recommendation. According to a program official, the center followed up on the recommendations and the budget was restored.[22]

2-C(g) An ERC Database

The TAC reports would include data, which were used as the basis for the beginning of the ERC Program’s database. It became operational in spreadsheet form at NSF by 1987. There were issues including comparability of the data derived from the definitions provided by NSF. The GAO reported the following problems with the data:

- Not all ERC provided the data initially.

- Some of the data were inconsistent because of different interpretations of requirements.

- ERC PDs found the data hard to interpret, as there were no benchmarks for good performance at that early stage.[23]

- Many of the ERC PDs were not used to interpreting data that did not arise from physical properties.

This was probably the first database of its kind for NSF and there was much to learn. The issues were addressed annually and in later years in collaboration with the ERC Administrative Directors, as discussed in Chapter 4.

2-D Energizing the Community Inside and Outside NSF

2-D(a) Program Leaders as Evangelizers

Mayfield and Preston as well as the ERC PDs took on a new role for NSF staff. They had to become program explainers and program advocates because of the culture in NSF and academe. Mayfield had to constantly watch the funding level for the Program to be sure that funds proposed to Congress and approved were actually allocated to the Program. They both had to make talks outside of NSF explaining the goals of the Program and how it functioned. They also had to recruit new PDs to the Program, as the number of centers was growing rapidly. Fred Betz, Tapan Mukherjee, and Steven Traugutt joined in 1986. Betz was a physicist who also had a business degree and had managed the Industry/University Cooperative Research Projects program in ASRA. Mukherjee, a chemist/chemical engineer in practice, joined the NSF during ASRA days and managed programs in materials processing and biological approaches to controlling environmental pollutants. Traugutt, a chemical engineer, came from industry.

2-D(b) “Disciplinary Wars” in NSF and the Universities

Building up support for the ERC Program and the paradigm shifts that it represented was generally straightforward at the highest levels of national engineering leadership—i.e., Congress, the White House Office of Science and Technology Policy, the NSF Director and AD for Engineering, top managers in industry, and in many cases deans of engineering. But its sharp departures from NSF and academic “business as usual” met with immediate resistance among the broad base of academic engineers and government officials who were most closely engaged with business as usual.

The focus on addressing industry needs through a cross-disciplinary pursuit of engineering systems flew in the face of the established disciplines and the academic departments around which they were structured. The organization of a center on campus with its own large budget from multiple sources—in some ways resembling small companies—threatened the authority and status of the departments and department chairs, especially as they drew the attention of many of the best discipline-based young faculty and also required significant physical space in which to operate. Team research did not mesh with the criteria of individual achievement on which promotion and tenure decisions were based. The emphasis on working closely with industry—even to the extent of industry helping to plan the centers’ research strategy—challenged the elite “ivory tower” status that science-based academic engineers had struggled to attain.

Perhaps most of all, there was a strong belief that the substantial funding for the ERCs would come directly out of the budgets ordinarily reserved for individual investigators—this concern extended even to the science directorates of NSF, who were alarmed that both the new Director of NSF, Erich Bloch, and the new AD for Engineering were engineers who had served on the NAE panel that produced the ERC’s “Guidelines.” Leaders of the existing discipline-based NSF divisions and their counterparts in the academic departments, along with many established individual investigators, shared this concern equally.

Carl Hall, Acting AD for Engineering just before the arrival of Nam Suh, recalled that as the program was getting off the ground, “I’d go out to these seminars that engineering colleges would invite me to attend, to talk about the ERCs. Usually faculty members would stand up and object. I’m not talking about the dean. I’m not talking about the department chair. I’m talking about individual investigators who had been successful in getting individual support. They saw this as a threat.”[24]

There were efforts to mollify the Program’s detractors on this score. One prominent example was the report of the Engineering Research Board, published in 1987.[25] This report, prepared during the first two years of operation of the ERCs, referenced them prominently throughout—even though the 11 ERCs that then existed were still new and had hardly even begun to fulfill their promise. The report reflected the uncertainly and even anxiety present throughout many colleges of engineering about the impact of this well-funded and much-trumpeted program on traditional single-investigator research support. While recommending support for this new approach to academic engineering research, the Board was careful to walk a fine line between the traditional and the new. For example:

To the extent that the large, multidisciplinary engineering research centers, now being supported by the National Science Foundation (NSF), indicate a trend toward stable funding, they are a timely and welcome development. Two caveats, however, must be recognized. First, the funding made available to the new research centers raises questions about the adequacy of funding support for interdisciplinary research at colleges and universities that do not have such centers. Second, research administrators must strike a balance between research by individuals and the collaborative research of the new engineering research centers.[26]

The Board went on to express its “…concern about the continuity of funding for new, innovative research investigations in the traditional disciplines, whose scale is modest by ERC standards and that generally involve the efforts of individual investigators and just one or only a few graduate students. …This type of research is a key to the health of the overall engineering research environment, and it is not likely to be sustained by “trickle-down” support filtering through the large, heavily funded activities.”[27]

Mayfield, admitted that, “They’re concerned, there’s no question about it”; but he pointed out that NSF’s overall budget was maintaining the “same strong pace of growth for basic research” that it had for the previous three years.[28]

The NAE’s 1989 Assessment of the ERC Program addressed the issue head-on, under the heading of “ERCs and Individual-Investigator Research”:[29]

The concern has been expressed that the ERC Program is being funded at the expense of individual investigator research. This argument, though an important issue, seems to assume that funds not spent on the ERC Program would have necessarily been allocated to individual investigator programs—that no part of the growth in the NSF Engineering Directorate can be attributed to congressional and executive branch commitment to the concept of NSF-funded Engineering Research Centers.

Somewhat contradictorily, the report then presented a brief analysis of funding for the Engineering Directorate, the ERC Program, and single-investigator grants for engineering in the period FY84 to FY89, which showed that “the Program was initially funded at the expense of the rest of the directorate, as critics charge”; and that in the ensuing years, while the Directorate’s budget grew substantially, the ERC Program’s budget grew even faster in percentage terms, while at the same time individual-investigator funding remained roughly flat in current dollars.

The committee concluded this analysis by conceding that:

By all appearances, funding for individual-investigator research from the NSF Engineering Directorate has suffered from the growth of the ERC Program. The Committee regrets this decline in constant dollars in support for basic engineering science performed by individual investigators. But given the constraints on NSF’s budget and the particular merits of the ERC Program, the Committee sees no other way in which the Engineering Directorate could have proceeded.

The budget imbalance moderated after the first few years, but the impression remained. In a 2004 interview with former NSF Director Bloch, the following exchange took place:

Interviewer: I was impressed with the fact that the ERCs were created with no detriment to the rest of the NSF budget for engineering research. Was this a self-conscious strategy to develop support for the ERCs?

Bloch: No, we never made that argument. It would not have been believed. University engineering faculty argued that if there were no centers then that money would have gone to the existing programs and funding PI’s in the usual way. That wasn’t true, but that was the spin.[30]

Apparently the spin went both ways. ENG AD Nam Suh, quoted in McNinch (1985), was more blunt about the new reality:

We can no longer afford the luxury of concentrating all of our resources in individual research project grants in the established fields of engineering. The task before us demands the best of university, industry, and government engineering capabilities. It requires cross-disciplinary efforts and a team spirit and approach never before achieved.[31]

The clear message was, “A change was due, and now it has come. Like it or not, a healthier balance is being achieved.” NSF would never again be the same.

2-D(c) Building a Community of ERCs

Given the challenges of developing successful ERCs on campuses where there was cultural and administrative “resistance” to change, the ERC Program managers decided it would be important for the leadership teams of the ERCs to meet together to share their successes and failures—to teach each other and NSF how to do this difficult job successfully. The first meeting was held around a table at NSF after the NAE Symposium presenting the new ERCs to the community in the April 1985. At this meeting, all agreed that it would be helpful to meet once a year in a conference-type setting to be able to exchange ideas about how to develop and manage centers.

That began the community-building effort, which was unique to the ERC Program at the time. The annual meetings were organized by an outside contractor[32] in order to enable a “third party” to work with the ERC community to develop the agenda. Topics for discussion were collected from various sources by the contractor, who discussed them with Preston. Based on these discussions, the contractor canvassed the ERCs and elsewhere for speakers who would present their experiences and lessons learned in a workshop format. Over time, these meetings developed a strong sense of community and an ERC esprit that Preston came to call “the ERC family.”

The following are examples of topics of discussion from the earliest annual meetings and some outcomes:

- What is an engineering system and how can it be effectively addressed realistically in an academic setting? As a result, Mayfield asked the NRC’s Cross-Disciplinary Research Committee to define and expand upon the concept of engineering systems research, which it did through a series of meetings held in 1986.[33]

- John Fisher, the Director of the ERC for Advanced Technology for Large Structural Systems (ATLSS) at Lehigh University, from the Class of 1986, remarked that he found it impossible to personally manage the research and industrial collaboration. To solve the problem, he created a new position, Industrial Liaison Officer (ILO), and appointed William Michalerya to that position.[34] Bill had research experience in both academe and industry. After Bill presented what he was doing and how well it supported the ERC, Preston decided to require all ERCs have ILOs. (See Michalerya’s profile in Chapter 6, Section 6-F.)

- When strategic planning was required, the annual meeting in 1987 focused on how to develop academic strategic planning, how to define an ERC “deliverable,” and how to visually depict that for faculty, industry, and NSF through milestone charts or other means.

- The idea of actually building a testbed to explore the systems realities of the research was still alien to some of the ERC researchers and much time was devoted to what that meant in an academic context—not industrial prototypes, but more a proof-of-concept testbed to explore the barriers to developing the technology.

2-D(d) Continuing Input from the NAE/NRC

Beyond the NAE Guidelines that provided the original basic blueprint for the ERC program, the NAE, through the Academies’ studies and reports arm, the National Research Council, continued to provide policy and management guidance regarding the ERCs to NSF program leadership. The main source of this continuing guidance was through the Cross-Disciplinary Engineering Research Committee, funded by NSF, whose members represented a cross-section of academic and industrial leaders.

A year after the kickoff symposium described in section 2-C(c) entitled “The Engineering Research Centers: Factors Affecting Their Thrusts,”[35] in late 1986 the CDERC held a second symposium, this one entitled, “The Engineering Research Centers: Leaders in Change.”[36] By that time 11 ERCs were in place—a fast start, as was envisioned by the Program’s founders and funders. The keynote address was given by John T. McTague, Vice-President for Research of the Ford Motor Company and formerly acting science adviser to the President (following George Keyworth). Nam Suh, AD for Engineering at NSF, talked about the lessons that NSF had learned in the first year of operation of the Program. The directors of the first six ERCs brought the audience up to date on progress in all aspects of their centers. Several speakers then examined industrial and state government involvement in centers like ERCs from various points of view. Finally, the directors of the five centers in the 1986 “class” of ERCs described their plans and programs.

Many of the speakers emphasized the rapid pace of technological change in the context of mounting international competition—which was the main driver for formation of the ERC Program. John McTague urged that we “rededicate ourselves to change” in order to regain and hold leadership in technology. He noted that “one of the perils of leadership is that the strategy for staying ahead requires far more vision and determination than do the tactics for catching up.” The symposium summary went on to say:

The ERCs are designed to fit such a strategy. NSF Director Erich Bloch refers to them explicitly as “our answer to Japanese industrial targeting.” With their emphasis on industrial interaction and research on problems of industrial relevance, with their crossdisciplinary focus and aggressive programs of technology transfer, the Centers are bringing a cultural change—what James Solberg terms “a quiet revolution”—to university campuses. They face considerable obstacles to their success, structural as well as attitudinal; the process of overcoming those obstacles is the process of changing the campus culture.[37]

The CDERC augmented these symposia with a series of short, targeted studies and reports “providing assistance and advice” to NSF’s Office of Cross-Disciplinary Research on various aspects of ERC operation and management, as requested by NSF. These included:

- 1985: Information technology exchange among ERCs and industry (how best to establish a continuous exchange of information, technology, and ideas with other ERCs, industry, and the engineering community broadly)[38]

- 1986: The systems aspects of cross-disciplinary engineering research (elucidating what the “systems approach” means in research and education, and how to apply it)[39]

- 1986: ERC evaluation criteria and process (supporting the third-year review of the first six ERCs)[40]

- 1988: The ERC selection process (suggestions for fine-tuning and improving the existing process)[41]

- 1988: ERCs and their evaluation (focusing on NSF’s proposed go/no-go decision based on an ERC’s third-year review).[42]

2-E Did the Class of 1985 Succeed in Starting a “Revolution” on their Campuses?

2-E(a) Third-year Review: Successes and Failures

The third-year renewal review for the Class of 1985 was a marker for whether or not NSF, academe, and industry could set a strong foundation for the future impacts envisioned by the goals and features of the ERC Program. The ERC Program provided the centers with reporting guidelines, site visit guidelines, and third-year renewal performance criteria in March of 1987. In July and August 1987, evaluation teams were finalized and NSF provided the teams with materials to support their assessments, including the ERC program announcement, decision documents justifying the award to the ERC,[43] the evaluation/review guidelines, and the relevant center’s renewal proposal. All the reviewers from all the site visit teams met in plenary session at NSF in September 1987 with ERC program staff to brief them on the process and give them prior TAC reviews, annual reports, and other information relevant to the center each person was to review. In this way the program managers hoped to calibrate the use of the review criteria and recommendation options across the teams.

The visits were held on campus in October of that year. For each visit, the center’s PD managed the team and one of the appointed external peer reviewers chaired the site visit report writing process.[44]

Using the renewal criteria outlined in section 2-C(b), the review teams were judging whether or not the ERC had been effectively structured to achieve its initial goals, how well it integrated the disciplines in research and education, and how well it collaborated with industry. They also factored in whether or not the center was built on a prior center funded by NSF or another source or had to start de novo, the degree of difficulty inherent in blending of the disciplines involved, and the degree of sophistication/experience of the target industrial community in working with faculty. Overall, program management wanted to determine the following:

- Has the ERC met its goals and the ERC program’s goals?

- Is the ERC cohesive, with shared goals?

- Is it an ERC or “business as usual”?

- What has the ERC achieved that could not be achieved through individual grants?[45]

The recommended outcomes were:

- Unconditional 5-year renewal if the center was strong in all criteria;

- Conditional 5-year renewal if the center was strong in some of the criteria and weak in others; or

- No renewal and phase-out if the center had failed in the three most important areas (research, industrial collaboration, and education.)[46]

During the process, the site visit team could confer with the Center Director for clarification. After the site visit team made its recommendation, the ERC PD could also confer with the Center Director for further clarification regarding major issues. Then the PD made his/her recommendation to Mayfield and Preston. They discussed the outcome with the AD for Engineering, Nam Suh, and determined which centers to recommend for renewal. Nam Suh presented his recommendations to the Director, Erich Bloch. The recommendations were as follows:

- Columbia University, Engineering Center for Telecommunications Research – Renew

- University of Maryland, Systems Research Center – Renew

- Massachusetts Institute of Technology, Biotechnology Process Engineering Center – Renew

- Purdue University, Center for Intelligent Manufacturing Systems – Renew

- University of Delaware, Center for Composite Materials – Nonrenewal

- University of California at Santa Barbara, Center for Robotic Systems in Microelectronics – Nonrenewal.

The two centers that were not renewed were those at the University of Delaware and the University of California at Santa Barbara. The major reasons for those outcome were: a failure to devote sufficient attention to systems goals; failures to attract sufficient domestic firms, in one case; and failure of the university to follow through on promised support.

At the conclusion of the renewal process Mayfield retired from NSF and his role as manager of the ERC Program and OCDR was assumed by Marshall M. Lih.

2-E(b) Assessing the ERC Program After Three Years

In September 1988, NSF Director Bloch asked the National Academy of Engineering to conduct a separate assessment of the ERC Program. The NAE formed a blue-ribbon committee for that purpose and submitted its report in August 1989.[47] Although the committee was concerned about an erosion in funding for the Program, its assessment was a glowing one:

In this Committee’s view, the Compton Committee[48] was exactly right. The program objectives that it described, and the program structure that it proposed, are as valid today as they were five years ago. If anything has changed, it is that the program is even more necessary today—more central to the purpose of engineering schools and the long-term viability of industries—than it originally appeared to be. Our message is simple: Stay on course. Fulfill the program objectives laid out five years ago. Ensure that the program reaches at least the size specified in the original report. Preserve the distinctiveness of the program, and resist attempts to dilute its mission by subsuming it within other programs. Refrain from weighing the program down with additional tasks and backing away from its purpose under the weight of controversy about the role of centers in academia. If the federal government is to assist industry in its fight to remain competitive, this is precisely the kind of program it should support.

In addition to this report and the work of the CDERC, which were focused specifically on the ERCs, the NAE/NRC also conducted other engineering-related studies in the period immediately following the establishment of the ERC Program. Given the size and “new-paradigm” impact of the Program on engineering research and education, these studies invariably referenced the ERCs, and in many cases shaped their recommendations in light of the new program.

One example was the report of the Engineering Research Board, mentioned in section 2-D(b).[49] Describing directions in specific areas of engineering research, the Board pointed to the ERCs as models for the formation of centers by other entities in such fields as biomedical engineering (NIH)[50] and manufacturing systems (the Semiconductor Research Corporation).[51] The ERCs had already changed the culture of academic engineering.

2-F Lessons Learned From Start-up

For government or other program initiators:

- Start with a team that has spent several years inside your organization trying to change the organization’s directions, with experience in how to overcome barriers.

- Support that team with higher-level administrators committed to the new mission. Without Erich Bloch as Director of NSF and Nam Suh as the AD for Engineering—both committed to redirecting academic resources to support industrial competitiveness—Preston believes that the ERC Program would have had a much harder time in starting up.

- Select centers where the leadership and teams are from the home university(-ies), with experience in trying to work across disciplines and with industry—not PIs who are transplants from other universities or industry and unfamiliar with their new home university culture.

- Stay flexible and agile as the program starts up, so new processes and procedures can easily be invented without exaggerated and extensive bureaucratic review.

- Once the new centers are funded, build a community of awardees and program directors committed to fulfilling the goals of the program and sharing lessons learned to inform other centers and the funding agency.

- Gain outside input from leaders of your community on needs for reform and ideas on how best to start up the program.

[1] National Academy of Engineering (1984). Guidelines for Engineering Research Centers: A Report to the National Science Foundation. Washington, DC: National Academy Press. [https://doi.org/10.17226/19472]

[2] McNinch, Syl (1984). “National Science Foundation Engineering Research Centers (ERC)—How They Happened, Their Purpose, and Comments on Related Programs.” Report prepared for Dr. Carl Hall, Acting Assistant Director for Engineering, September 14, 1984. p. 6.

[3] National Academy of Engineering (1984). Guidelines for Engineering Research Centers: A Report to the National Science Foundation. Washington, DC: National Academy Press, pp. 1-2. [https://doi.org/10.17226/19472]

[4] NSF (1984). Program Announcement, Engineering Research Centers, Fiscal Year 1985, April 1984. Directorate for Engineering, National Science Foundation, p. 1.

[5] Ibid., p. 1.

[6] This was the first time that research programs funded by NSF were expected to include undergraduates in the research. That feature was added because the industrial personnel advising the NAE voiced this need, as many of their hires had only B.S. degrees.

[7] This component was written in response to the NAE’s desire for a broad impact on engineering; but it had the unfortunate consequence of producing proposals with too many faculty engaged to be manageable and was quickly dropped in future announcements.

[8] McNinch, op. cit., p. 7.

[9] Mayfield, Lewis G. (1986). Nurturing the Engineering Research Centers. In The New Engineering Research Centers—Purposes, Goals, and Expectations. Report of the Cross-Disciplinary Research Committee, National Research Council. Washington, DC: National Academy Press, p. 51. https://www.nap.edu/catalog/616/the-new-engineering-research-centers-purposes-goals-and-expectations

[10] NSF (1984). Op. cit, p. 2.

[11] GAO (1988). Engineering Research Centers: NSF Program Management and Industry Sponsorship—Report to Congressional Requesters (GAO/RCED-88-177, August 1988). General Accounting Office,: Washington, DC. pp. 15-16.

[12] Walker, Eric A. (1986). The Criteria Used in Selecting the First Centers. In The New Engineering Research Centers—Purposes, Goals, and Expectations. Report of the Cross-Disciplinary Research Committee, National Research Council. Washington, DC: National Academy Press, p. 47.

[13] Year 1 Funding ERC Class of 1985 and 1986 Funding of ERC Classes of 1985 & 1986.

[14] Belanger, Dian Olson (1997). Enabling American Innovation, Engineering and the National Science Foundation. West Lafayette, IN, Purdue University Press. p. 217.

[15] Bozeman, Barry & Boardman, Craig (2004). The NSF Engineering Research Centers and the University-Industry Research Revolution: A Brief History Featuring an Interview with Erich Bloch. Journal of Technology Transfer, 29, 365-375. p.371.

[16] National Research Council (1986). The New Engineering Research Centers: Purposes, Goals, and Expectations. Cross-Disciplinary Engineering Research Committee, summary of a symposium, April 29-30, 1985, National Research Council. Washington, D.C.: National Academy Press. [https://doi.org/10.17226/616]

[17] Ibid., p. 5.

[18] Don Kash was the George Lynn Cross Research Professor of Political Science and Public Policy at the University of Oklahoma and Chair of the NRC’s Cross-disciplinary Engineering Research Committee, which provided guidance to the ERC Program in its first three start-up years.

[19] National Research Council (1986). Evaluation of the Engineering Research Centers. Cross-Disciplinary Engineering Research Committee. Washington, DC: National Academy Press [https://doi.org/10.17226/19240]

[20] GAO (1988). Engineering Research Centers: NSF Program Management and Industry Sponsorship. Report to Congressional Requesters (GAO/RCED-88-177, August 1988). Washington, DC: General Accounting Office, p. 26.

[21] “NSF guidance states that the TAC report should address the following: (1) management of the ERC and its leadership, (2) the quality of the research program, (3) the education program with particular response to undergraduate education, (4) the extent and reality of industrial participation, (5) the extent and reality of state and university support, and (6) specific comments and recommendations to the program director for improvement of the ERC.” Ibid. p. 23.

[22] Ibid.

[23] Ibid. The database administrator, Dr. George Brosseau, is quoted in the GAO document. He joined the ERC team in 1986.

[24] Interview with Carl Hall carried out at NSF by Courtland Lewis (SciTech Communications, LLC) and Lynn Preston, February 3, 2011.

[25] National Research Council (1987). Directions in Engineering Research: An Assessment of Opportunities and Needs. Engineering Research Board. Washington, D.C.: National Academy Press. [https://doi.org/10.17226/1035]

[26] Ibid., p. 4.

[27] Ibid., p. 62.

[28] Adam, J. (1985). Response to NSF research centers may test relationship of science and engineering communities. The [IEEE] Institute. January 1985, p. 6.

[29] National Academy of Engineering (1989). Assessment of the National Science Foundation’s Engineering Research Centers Program: A Report for the National Science Foundation by the National Academy of Engineering. Washington, D.C.: National Academy of Engineering, pp. 8-10.

[30] Bozeman, B. & Boardman, C. (2004). The NSF Engineering Research Centers and the University–Industry Research Revolution: A Brief History Featuring an Interview with Erich Bloch. Journal of Technology Transfer, 29, 365-375.

[31] McNinch, Syl (1985). Engineering an Expanded and More Active Role for NSF. NSF Report No. 85-x. Washington, DC: National Science Foundation. February 4, 1985, p. 8.

[32] Ann Becker, President of Ann Becker Associates (ABA), served that role from 1986 to 1989. In 1990, Court Lewis began organizing the meeting programs with the ERCs and NSF, while ABA handled meeting logistics.

[33] National Research Council (1986). Report to the National Science Foundation Regarding the Systems Aspects of Cross Disciplinary Engineering Research. Cross-Disciplinary Engineering Research Committee. Washington, DC: National Academy Press. [https://doi.org/10.17226/19220]

[34] After Lehigh graduated from NSF support, Bill continued to serve as the ILO for the graduated center, which was still actively involved with industry. In 2001, he became Lehigh’s first Associate Vice President for Government and Economic Development.

[35] National Research Council (1986).Op. cit.

[36] National Research Council (1987).The Engineering Research Centers: Leaders in Change. Cross-Disciplinary Engineering Research Committee, summary of a symposium. Washington, D.C.: National Academy Press. [https://doi.org/10.17226/18889]

[37] James Solberg was the Director of the Purdue ERC focused on intelligent manufacturing systems after the death of its first Director, King Sun Fu, in 1985.

[38] National Research Council (1985). Information and Technology Exchange Among Engineering Research Centers and Industry. Cross-disciplinary Engineering Research Committee, report of a workshop, June 11, 1985. Washington, D.C.: National Academy Press. [https://doi.org/10.17226/19282]

[39] National Research Council (1986). Report to the National Science Foundation Regarding the Systems Aspects of Cross Disciplinary Engineering Research. Op. cit.

[40] National Research Council (1986). Evaluation of the Engineering Research Centers. Cross-Disciplinary Engineering Research Committee. Washington, D.C.: National Academy Press. [https://doi.org/10.17226/19240]

[41] National Research Council (1988). Assessment of the Engineering Research Centers Selection Process. Cross Disciplinary Engineering Research Committee. Washington, D.C.: National Academy Press. [https://doi.org/10.17226/18932]

[42] National Research Council (1988). The Engineering Research Centers and Their Evaluation. Cross Disciplinary Engineering Research Committee. Washington, D.C.: National Academy Press. [https://doi.org/10.17226/18931]

[43] Given current NSF practices, these would not be released to the external community today.

[44] This was later dropped, as it became apparent that a persuasive chair could sway a team to his/her view without appropriate inclusion of all views. The ERC PDs took over the process of guiding the site visit teams through the review criteria vis a vis the center’s performance to determine a recommendation.

[45] GAO, op. cit., pp 26-27.

[46] Ibid., p. 27

[47] National Academy of Engineering (1989). Assessment of the National Science Foundation’s Engineering Research Centers Program: A Report for the National Science Foundation by the National Academy of Engineering. Op. cit.. See Chapter 3 for a fuller discussion of the recommendations and impacts on NSF.

[48] National Academy of Engineering (1984). Guidelines for Engineering Research Centers. Op. cit.

[49] National Research Council (1987). Directions in Engineering Research: An Assessment of Opportunities and Needs. Engineering Research Board. Washington, D.C.: National Academy Press. [https://doi.org/10.17226/1035]

[50] Ibid., p. 110.