9-A Overall Program Management System

As was discussed in previous chapters, the ERC Program was revolutionary in many respects. Therefore, managing the Program at the NSF level required innovation in management practices just as much as the ERC host universities required innovations in research and education practices. These management practices encompassed processes and procedures internal to NSF, such as funding mechanisms, pre-award review processes, post-award oversight, etc.; and processes and procedures for centers, such as novel reporting requirements, required management structures, and the development of performance indices or measurements in order to assess centers’ contributions and outputs in light of the substantial taxpayer investment they were receiving. This chapter describes both of these types of program management innovations.

Most of the current National Science Board (NSB) and NSF Senior Management principles for centers originated with these innovative ERC program management practices, as noted in a 2007 Office of the Inspector General report on the management of eight NSF center programs.[1] For example, the ERC Program is the innovator of the following internal NSF processes necessary for successful center programs:

- Pre-award review system that includes criteria relevant to the value added from a center configuration, pre-award site visits, and briefings to the final review panel at NSF

- Developing the cooperative agreement mechanism for centers

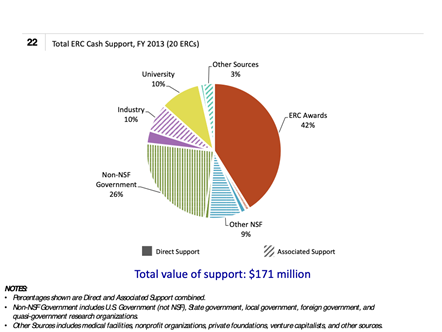

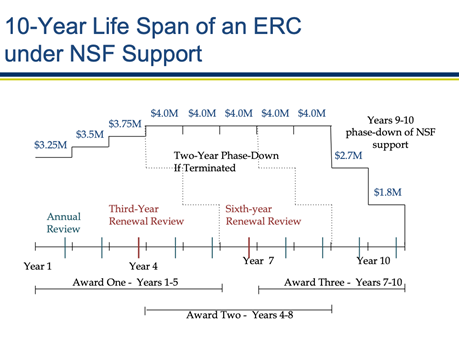

- Providing funding throughout a ten-year period at $2-$5M, with phase-down

- ERCs receive a ramp-up of funding from $2.5 and $4.2 M over eight years as an incentive for performance, with phased-down funding in the last two years to ready the ERC for self-sufficiency planning and to prepare business plans for self-sufficiency

- Collection of data on post-award performance

- Post-award oversight through annual site visit reviews

- Renewal reviews to weed out poorly performing centers and extend the life span of the best centers to ten years

- Opportunity for centers to recompete with a refreshed and redirected vision

- External program-level evaluations, including studies and Committee of Visitor reviews.

Within NSF, the ERC Program shared its pre-award and post-award review and oversight processes and documents with other NSF center programs, including the Science and Technology Centers Program (via Program Managers Nat Pitts and Dragana Braskovic), the Materials Research Science and Engineering Centers Program (Thomas Reiker), the Nanoscale Science and Engineering Centers (Mihail Roco), and the Science of Learning Centers (Soo-Siang Lim).

At the centers level, the ERC Program was the innovator of center management requirements including:

- Strategic planning at the center level

- An external industry advisory board and, later, an external scientific advisory board

- Specified management positions and management plans

- Purposeful integration of research and education.

Center-level best practices are shared through the ERC Best Practices Manual, written by members of the ERCs’ leadership teams and posted at http://erc-assoc.org/best_practices/best-practices-manual.

The following section provides a chronological overview of the evolution of the ERC Program management system.

9-A(a) Chronology of Events

i. Start-Up: 1984–1990 – Learning How

- Development of ERC cooperative agreement

- Funding term or life cycle fixed at 11 years at the request of OMB in 1986

- Post-award oversight system designed by Preston and implemented by ERC PDs, each assigned to monitor a subset of funded centers

- Development of post-award oversight system with annual and third-year renewal reviews

- Annual reporting guidelines

- Development of review criteria to define “excellent” to “poor” implementation of the ERC key features

- Database of quantitative indicators of inputs, progress, and impact established

- Implementation of third-year renewal reviews for Classes of 1985-1987

- Requirement for strategic planning—begun in 1987-88

- Third-year renewal reviews—begun in 1987

ii. 1990–1994 – Refining the Model and Preparing Older ERCs for Self-Sufficiency

- Start of sixth-year renewals

- Development of recompetition policy and approval by the NSB

- Emphasis placed on developing self-sufficiency plans

- NSB requirement that all center awards be limited to 10 years, impacting the Class of 1998 and forward

- Refinement of reporting guidelines from lessons learned at ERCs

- Expansion of database requirements due to requests from NSF and Congress

- Refinement and expansion of performance criteria based on experience and increases in requirements

- Lead ERC PD in ERC’s division supported by liaison PDs from other ENG Divisions

iii. 1995–2000 – Consolidation of Experience: Gen-2 ERCs

- ERC Program provides more development assistance based on knowledge developed by ERCs

- “ERC Best Practices Manual” written by ERCs; ERC Association website launched

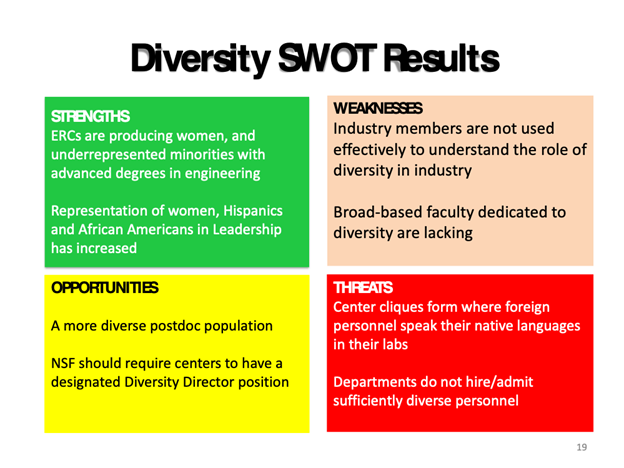

- SWOT Analysis process carried out at ERCs and included in site visit reporting

- Student Leadership Councils required

- Templates provided to ERCs to record and upload data for ERC database

- Some lead PDs now come from outside EEC Division

- Stronger start-up assistance provided through on-campus visits by NSF staff and ERC Consultancy of experienced ERC personnel

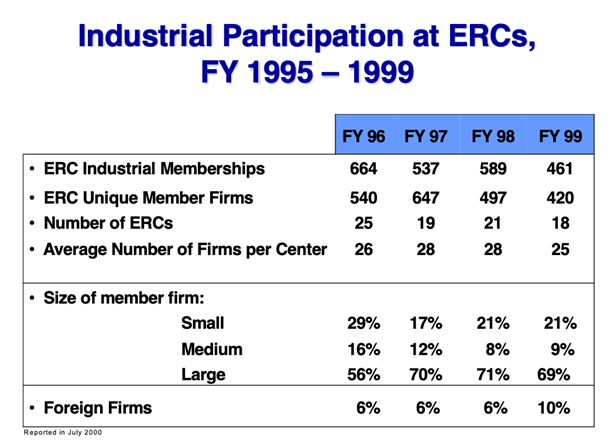

- Certification of industrial membership and tighter financial reporting due to lessons learned from malfeasance at two ERCs

- Second generation of strategic planning improved through 3-plane chart, 1998

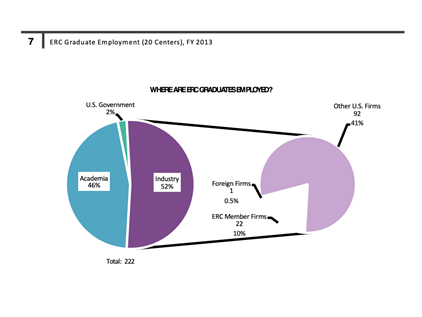

- Evaluation results indicate that Gen-1 ERC graduates were highly productive in industry, ERCs provided significant benefit to member firms, and ERCs had impacted the competitiveness of 67% of their member firms

iv. 2001–2006 – Evolving the Gen-2 Construct and Planning for Gen-3

- Improvements in strategic planning and systems constructs through 3-plane chart

- Evaluation results indicate Gen-2 ERCs are even more productive than Gen-1 and these ERCs have a greater positive impact (75%) on the competitiveness of their member firms

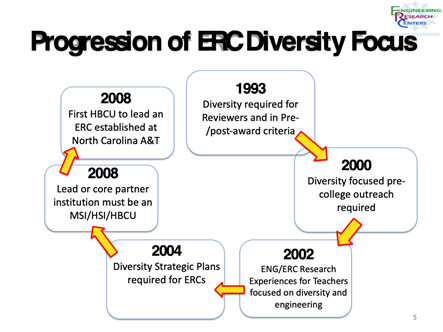

- Strategic diversity plans required

- Greater emphasis on innovation and small business development, as opposed to technology transfer

- New features developed through strategic planning for Gen-3 ERCs, including innovation and translational research support in partnership with small R&D firms; solicitation released

- New performance criteria developed for new Gen-3 ERC features

v. 2007–2014 – Gen-3 ERCs

- Initial classes of Gen-3 ERCs funded

- Education and Translational Research key features definitions improved

- Second- and third-edition ERC Best Practices chapters released

- ERCs and Economic Stimulus–Innovation Fund: 2009

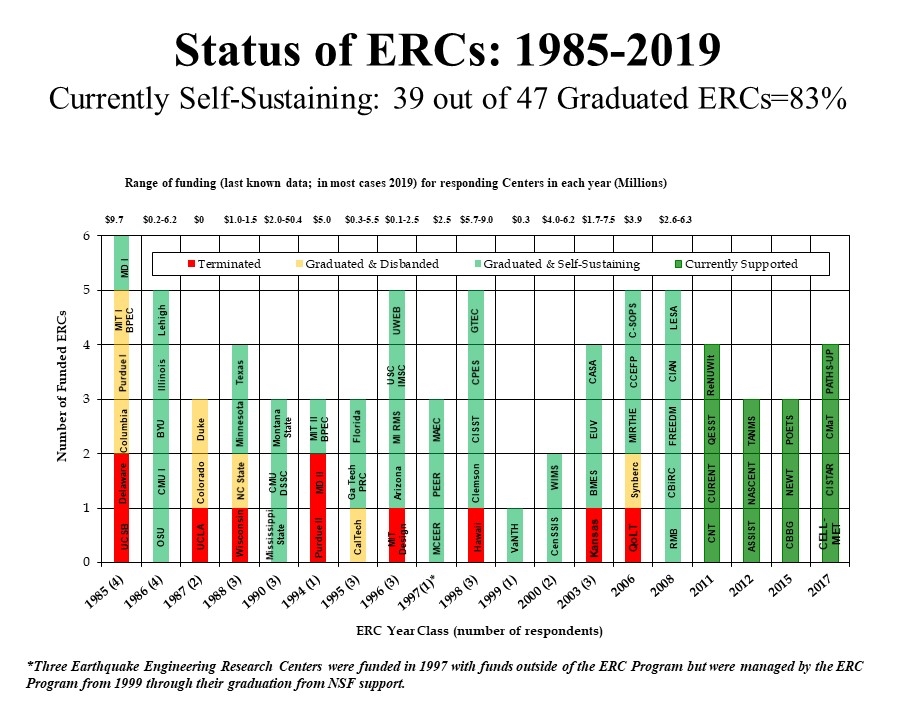

- Survey finds that 83% of graduated ERCs are self-sustaining and retain some or most ERC-like features.

The following section 9-A(b) provides a history of the development and operation of the ERC post-award oversight system. Section 9-B describes other features of NSF’s oversight of ERCs and the management of the Program. Section 9-F describes the centers’ life-span and the pattern of NSF funding relative to center operation and graduation.

9-A(b) Program Management Evolution

NSF’s traditional approach to the generation of proposals and monitoring the outcome of awards had to be changed in order to successfully implement the 1984 vision of the ERC Program as expressed by both the Executive Branch and Congress. They charged the ERC Program with a mission to change the culture of U.S. academic engineering in order to strengthen U.S. industrial competitiveness, and to do it with a relatively large monetary award. NSF’s culture at that time was to let proposals come in at the initiative of the principle investigators (PIs), carry out rigorous pre-award peer review, issue a grant, and then expect the awardees to publish results—which would serve a quality control function, inform the scientific community, and contribute to the growth of knowledge of science. Longer-term impacts on society or technology were not a part of the mission of the basic research program elements of NSF. Because the ERC Program had a mission not only to advance fundamental knowledge but also to advance technology and change the culture of engineering education, this passive approach to proposal generation and quality control was not appropriate.

To generate proposals, rather than relying on unsolicited proposals from university researchers the ERC Program released a program announcement specifying the Program’s mission and the ERC key features that the proposing team would be obligated to fulfill. The review process included mail and panel review with criteria geared to the ERC key features.

As the awards to the first Class of six ERCs were initiated in 1985, Pete Mayfield, who managed the ERC Program, and Lynn Preston, who was his deputy, realized that the traditional peer-reviewed publication of research results alone would not be sufficient to ensure the quality of the new centers. They understood that the large size of the awards and the critical mission of the ERC Program warranted an active system of post-award oversight. In addition, their prior experience in the RANN Program—where some awardees received large awards for complex interdisciplinary proposals with applied technology advancement goals and then went ahead and carried out the research in a way that ignored those proposed goals—made them both wary of fully trusting academics to address the complex goals of their proposals without oversight. For Preston, this wariness derived directly from her RANN experience, where she had asked for statements of progress and then made site visits, either by herself or with a small team of academics, and found a few awardees carrying out their research in a business-as-usual mode without addressing the interdisciplinary goals of their funded projects. This experience convinced her of the need for strong post-award management. Based on this experience, she was given the responsibility to develop the post-award oversight system to enable the ERC Program staff to monitor center-level progress in achieving its proposed goals and, thereby, the goals of the ERC Program.

Preston’s ERC post-award system was an innovation for NSF at the time. It was an active system, governed by performance criteria defined by the ERC key features, implemented through a cooperative agreement tying performance to ERC Program and center proposal goals. It was carried out through post-award progress reports, monitoring by the ERC Program Manager and ERC Program Directors (PDs), and post-award peer review through annual on-site visits by teams of the ERCs’ peers from academe and industry, led by each ERC’s Program Director. In addition, industry advisors recommended that there be two, more stringent, renewal reviews: one at the third year, to weed out centers that were not able to set up systems to effectively address their proposed goals and the ERC Program’s goals; and another at the sixth year, to weed out those that could not mount a convincing engineered systems testbed.

Looking back at the oversight system and how it grew over time, it can best be characterized initially as a “tough love” system, much the way a parent sets standards and then monitors progress and provides encouragement and corrective action to improve growth, creativity, and readiness for eventual independence. Strong developmental guidance was provided by the industry partners, the site visit teams, and the ERC Program staff. However, there was always a strict penalty to pay for poor leadership in fulfilling the goals of a proposed ERC: termination of funding.

The post-award system grew in complexity over time as the NSF itself grew into a more complex bureaucracy, the size of the ERC Program budget grew, and the size of the awards became large enough to generate oversight by the management team of the NSF Office of the Inspector General. This complexity was not always a positive feature of the system, as it became too proscriptive. A word of caution to those developing new center programs: keep the oversight system clear and focused and resist micromanagement through the system.

9-B Program Oversight System

9-B(a) Structure and Performance Standards

The basic structure of the post-award oversight system combined a major threat for poor performance with strong guidance for excellent performance. The threat was that the Program would not provide continued support to centers that functioned in an academic “business as usual” mode of operation without the necessary redirection required by the ERC key features. The guidance for excellent performance was provided by an oversight system with the “tough love” philosophy to help ensure that a center had the best guidance that NSF and the peer community could provide. If a center ignored that guidance or could not perform effectively, funding would be terminated.

The following processes were created to allow the ERC Program to achieve its mission. These were firsts for NSF and over the long run served as models for other center programs soon to be added to the NSF portfolio, such as the Science and Technology Centers Program.[2]

- Development of an ERC Cooperative Agreement that specified performance expectations and program goals. This was an award instrument that was based on an agreement used for facilities awards; the ERC agreement was the first time it was used for a research award. It combined features of both contract and grant instruments by specifying some performance expectations similar to contracts while allowing research flexibility similar to grants. In particular, the features of the ERC cooperative agreement included:

- Goals and features of the ERC, taken from its proposal

- Strategic research planning

- By 1987, submission of a long-range research plan—a strategic plan—detailing how it will carry out its work and within what timeframes;

- Responsibility to establish a partnership with industry and hold annual meetings with industry;

- Requirement to attend the ERC Program annual meeting with NSF staff and other centers;

- Requirement to establish and maintain a database in order to provide NSF with quantitative indicators of its activities and progress in meeting the center’s and Program’s goals;

- Continued support would depend, among other things on an annual review of progress.[3]

- Initially, the expected life-span (NSF-funding) of an ERC was 11 years. However, in the late 1980s the NSB implemented a requirement that all NSF center awards be limited to 10 years, which impacted the ERC Classes of 1998 and forward.

- Development of reporting requirements and guidelines to ensure that the ERC focused on its goals, gathered information to support its performance claims, and reported that to NSF. These were summarized in an Annual Reporting Guidelines document that ERC program staff sent to centers.

- Development of post-award performance criteria relevant to each key feature to define “excellent” to “poor” implementation of the key features (See linked file “Gen-2 Performance Criteria–Final”[4] for a later evolution of the original criteria.)

- Annual on-campus expert-peer review site visits led by ERC program directors and conducted under guidance common across all funded ERCs.

- Third year renewal site-visit reviews began in 1987

- Sixth year renewal site-visit reviews began in 1990

- Development of an ERC Program-level database to record quantitative information about the ERCs’ resources, funding, and outputs. This information allowed ERC outputs to be quantitatively measured in order to demonstrate the success of the centers in achieving program goals. The linked file “1998 Data. Ppt” shows the types of data collected by ERCs and displayed by the ERC Program.[5]

- Development of a community of sharing through annual meetings of NSF staff and the leaders and key staff of the ERCs to share successes and failures so as to improve individual center and overall ERC program performance. (See section 9-J for further discussion of community-building activities.)

9-B(b) Evolution of the Oversight System To Achieve ERC Program Objectives

The importance and visibility of the ERC Program and its revolutionary trajectory led to a structured and “aggressive” post-award oversight system. The high expectations placed on the ERCs meant that the Program staff were devoted to strict oversight to help ensure that the ERCs successfully addressed and achieved their complex, interdependent goals and delivered a changed culture for academic engineering as well as a new generation of engineers better able to contribute quickly in their roles in industry. A major way the Program staff provided this oversight was through the annual peer-review site visit. However, developing the appropriate response to findings from the annual site visits was another challenge of managing a new, nationally recognized program.

For example, the outcome of one site visit in particular in the Class of 1987 was brought to the attention of Nam Suh, the Assistant Director for Engineering at the time, for guidance in terms of an appropriate action. The system goals that the ERC had stated in their proposal appeared to have been ignored. After discussions with Dr. Suh, he asked the staff to send a warning shot across the bow of that ERC: only half of the next year’s annual support was provided at the time of the annual start date and the remainder would not be provided until the research plan was adjusted to address the systems goals. This response demonstrated the advantage of using a cooperative agreement as a funding instrument, because NSF had leverage to affect the performance of the center. That produced the desired result—a strengthened commitment to systems—and the remainder of the ERC’s annual funding was restored.

The first set of third-year renewal site visits was carried out in 1987 to review the renewal proposals of the first six ERCs in the Class of 1985. For those site visits, the review teams were charged with determining whether or not sufficient progress had been achieved in a trajectory that matched the centers’ goals and the Program’s goals. NSF staff often used the phrase “not business as usual” to refer the nature of that trajectory. The review teams were charged to assess whether a center had structured a new type of research program that had long-range systems technology goals and had joined multiple disciplines in research to address them. They also expected the focus for education to be on developing students—both undergraduate and graduate students—who were familiar with industrial practice and with integrating knowledge to advance technology. Industry was expected to financially support the ERC and provide guidance on the research and education programs, which the faculty integrated into their planning. Two of the six third-year renewal site visit teams determined that the ERCs under their respective reviews were not meeting the required standards. This was due primarily to the fact that the center cultures that had not shifted sufficiently from “business as usual” to give NSF confidence that they would grow into fully successful ERCs. Therefore, NSF made the determination to terminate funding for these two centers at the end of their first five-year cooperative agreement. However, NSF reduced the funding gradually; by one-third for each of the next two years, in order to protect the students from an abrupt termination of funding.

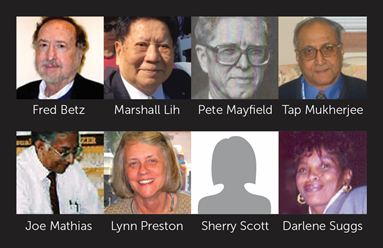

After carrying out many annual and renewal (years 3 and 6 in the life of a center) site visits throughout the 1980s and into the early 1990s, Preston and Marshall Lih, the EEC Division Director at the time, began to develop a different approach to site visit reviews. While strict adherence to program goals and high standards of performance were necessary, the annual site visit reviews became more developmental, providing the ERC with more guidance on how to improve performance, while the renewal site visit reviews were still to be judgmental. The “love” part of the Tough Love philosophy thus came into balance with the “tough” part. Through the years, this approach led to successful third- and sixth-year renewals for most of the 64 ERCs functioning from 1985 to 2014, the cutoff year for this History. Eight ERCs (12%) did not pass their renewal reviews and were phased out; the last to fail that review was one from the Class of 2003. All of the remaining ERCs passed their sixth-year renewal reviews, but five of these had to repeat their renewal review in the seventh year to deal with serious but fixable weaknesses, especially regarding their systems testbeds.

9-C Evolution of Program Announcements, Review, Awards, and Agreements

9-C(a) Announcements

As was referenced in Chapter 2-A(b), and mentioned earlier in this chapter, the NSF team charged with developing and managing the new ERC Program decided that relying on unsolicited proposals from university researchers, as traditionally was done, would not generate proposals that would be able to meet the goals of this new program. Thus, the team settled on writing a program announcement (later called a program solicitation) so that they could provide explicit guidance on the required features of this new type of center. This instrument had been pioneered in the RANN program and was subsequently used throughout the Foundation in the late 1970s and early 1980s.

Over a 30-year period these announcements (solicitations) served two roles. One was to define the information necessary to submit an ERC proposal: key features, proposal requirements, and review criteria. This information grew over time in characterization and specificity as the knowledge of what it takes to develop and manage a successful ERC grew. (See linked file “Evolution of ERC Key Features”.) The second role was as a teaching tool. As knowledge of best practices and pitfalls for an ERC grew over time, the NSF staff decided to include this knowledge in the solicitations with references to the online “ERC Best Practices Manual.” In this way, they sought to level the playing field for proposers so that proposers new to the ERC concept would not be disadvantaged by those from universities with more ERC experience.

Preliminary proposals, or pre-proposals, were introduced in program announcement NSF 94-150 to reduce the proposal preparation burden for academe; however, it significantly lengthened the time it took from releasing the announcement to making the award from one year to up to 2 years. The increase in processing time was also due to longer award approval processes at the NSF Director’s level.

i. ERC Configuration Requirements

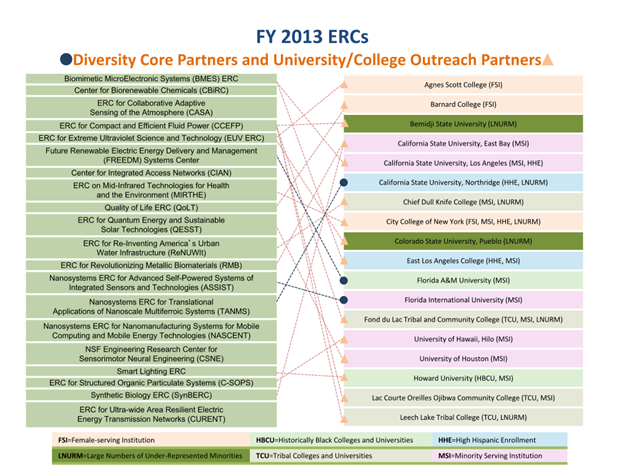

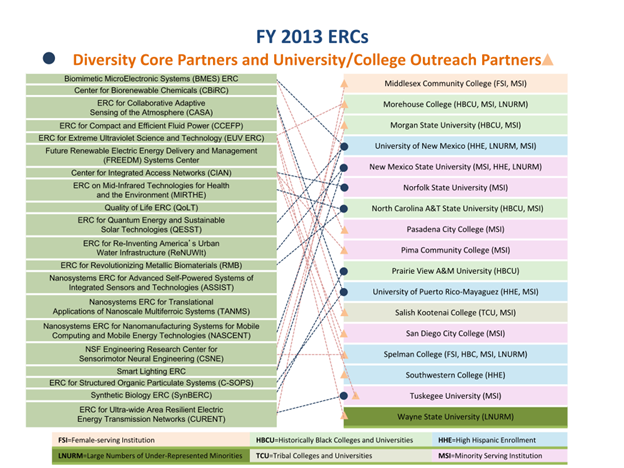

Gradually over time, ERCs became more complex in structure, moving from one university to a configuration of a lead university and a few core partners plus, at times, affiliated universities. This trend was initiated by the ERCs themselves through their proposals and facilitated by the communications made possible through the worldwide web. NSF’s diversity requirements broadened those partnerships to include universities that served groups predominantly underrepresented in engineering.

Gen-1 ERCs (simple configuration): Classes of 1985–1990[6],[7]

- U.S. academic institution with engineering research and education in the lead, in some cases with affiliates.

Gen-2 ERCs (more complex configuration to improve lead university team skill base and diversity): Classes of 1994/1995 through 2000[8],[9],[10],[11]

- U.S. academic institution with graduate and undergraduate engineering research and education programs (NSF 93-41)

- May be a single university or multi-university proposal; if the latter, one institution is the lead and submits the proposal, and programs must be integrated

- Same as above—plus, to be considered a joint partner, the university would have a significant role in planning and execution of the ERC; if participation is at the project level only, the university should be called affiliate

- NSF 97-5: Lead institution must be a Ph.D. degree-granting U.S. institution with undergraduate and graduate research and education programs; partner or affiliated institutions need not be Ph.D. degree-granting

- Partners should be involved on a flexible basis based on need and performance

- NSF 98-146: Same as above, but only 1-3 long-term partners, plus a limited number of outreach partners at the single faculty level to broaden impact.

Gen-2 ERCs: Classes of 2003–2006[12],[13]

- NSF 02-24: Same as above, plus pre-college partners may be identified

- NSF 04-570: Multi-university configuration required, with lead and up to four core partners, plus limited number of research and outreach partners, including at least one NSF diversity awardee.

Gen-3 ERCs (outreach expanded to include foreign university partnerships): Classes of 2008–2013[14],[15],[16],[17]

- Lead and up to four domestic partner institutions, one of which served large numbers of underrepresented students who were majoring in STEM field; later (NSF 07-521) the specific requirement of up to four partners became “a manageable number” of partner institutions.

- Domestic affiliates not required, but may be included

- May include collaborations and partnerships in research and education with faculty in foreign universities or foreign institutes that function in the pre-competitive space, as opposed to new product development; but by NSF 11-537 the partnerships were required

- Long-term partnerships with pre-college educational institutions

- Partnership with university, state, or local government organizations devoted to innovation and entrepreneurship.

This evolution of configuration requirements reflects an evolution in NSF policy to broaden the types of institutions involved in ERCs so as to ensure a broader involvement of a more diverse body of faculty and students in NSF awards. Caution should be exercised by those developing new center programs to keep the required team configuration motivated by two complementary goals: finding the skill base needed to address the center vision and increasing diversity in the participants.

ii. Management Plan

Because of their complexity, NSF always understood that ERCs would be a management challenge in academe, where the single-investigator culture predominated. Thus there was a level of guidance in the program announcements regarding organization and management that was more specific than for other required features. In the early and mid-years of the Program it was necessary to point out expectations regarding tenure and promotion practices because of the cultural changes inherent in the ERC construct. It was also necessary to point the ERCs toward management systems that would support self-sufficiency (i.e.., continuation as cross-disciplinary centers post-NSF funding). Mechanisms for advice from the ERCs’ peers, outside of the site visit mechanism, were introduced to broaden the amount of input and feedback for the centers. However, these mechanisms were eventually dropped because the Center Directors felt that outside peers, other than through NSF-led site visits, often had apparent conflicts of interest because they were competitors in the ERCs’ fields.

Evolution of ERC Management Plan Requirements:

Gen-1 ERCs: Classes of 1985–1990[18]

- Mechanisms for selecting research projects, allocating funds and equipment, recruiting staff, and disseminating and utilizing research results

- Organizational chart

- Procedures to ensure high-quality research

- Added in FY 1986:

- Information on faculty promotion and tenure practices for faculty involved in cross-disciplinary research

- University planning and plans for self-sufficiency

- Plans for management of industrial participation, support, and interaction.

Gen-2 ERCs: Classes of 1994/1995 through 2000[19],[20],[21]

- NSF 93-41

- Role of Center Director and key management associates (Industrial Liaison Officer, Administrative Manager,[22] and support staff)

- Procedures to plan and assess center activities to assure high-quality research and education relevant to center’s goals

- Process for allocating funds

- Plan for collaboration with any partner institutions

- Organizational chart

- Tables of committed financial support from all sources

- NSF 97-5 dropped planning specifics, added education coordinator and financial manager, projected funding by source and financial plan for allocation of funds by function, and full proposals required long-term financial plan for self-sufficiency

- NSF 98-146 added back collaboration plan for multi-university ERCs, added an advisory system, project selection and assessment, and specified reporting to the Dean of Engineering. (Most ERCs already proposed a management structure where the Center Director reported to the Dean of Engineering, but some had proposed to report to a department head instead, which was not acceptable.)

Gen-2 ERCs: Classes of 2003–2006[23]

- Management and associated performance and financial management information systems needed to deploy center resources to achieve its goals

- Mechanisms for securing external advice from academic and industrial experts to set strategic directions, select and assess projects, and develop internal policies, including cross-university policies for multi-university ERCs

- Full proposals only: Allocation of funds by function and by institution (Year 1 only).

Gen-3 ERCs: Classes of 2008–2013[24]

- For a multi-institution ERC, report to Dean of Engineering, who leads a Council of Deans (a management structure that was assumed but needed to be reitereated because one ERC began reporting to a department)

- Function with sound management systems to ensure effective integration of its components to meet its goals

- Sound financial management and reporting systems

- Sound project selection and assessment systems that include input from Scientific and Industrial/Practitioner Advisory Boards

- Student Leadership Council required

- Organizational chart

- NSF 13-520 added:

- Process for SWOT (Strengths, Weaknesses, Opportunities and Threats) analyses by Industrial/Practitioner Advisory Board and Student Leadership Council

- Table of committed funds by source (not by firm)

- Functional budget tables (invited full proposals only)

- Plan for distribution of funds by institution for year one (NSF invited full proposals only).

iii. University Financial and Cultural Support Requirements

Gen-1 ERCs: Classes of 1985–1990[25]

- Show university support in proposed budget

- Added in FY 1986: Information required on faculty promotion and tenure practices for faculty members involved in cross-disciplinary research.

Gen-2 ERCs: Classes of 1994/1995 through 2000[26]

The vague reference to university support in the program announcement for the first five years of the program led PIs and their universities to propose high levels of university support; which was often difficult to deliver. This led to tighter definitions and restrictions regarding statements of university support.

- Substantial university financial support, i.e. cost sharing with letter committing to this support

- Formal recognition of the cross-disciplinary, industrially relevant culture of the ERC

- Tenure and reward policies to support involvement in the ERC.

Gen-2 ERCs: Classes of 2003–2006[27]

- NSF 02-24: Same as above; plus university cost sharing of 10 percent, stipulated by NSF policy, applies only to lead and core partners, not affiliate institutions

- NSF 04-570: Cost sharing raised to 20 percent.

Gen-3 ERCs- Classes of 2008 – 2013[28]

- Cost sharing eliminated for all awards by NSF policy (Class of 2008)

- Academic policies to sustain and reward ERC’s cross-disciplinary, global culture; its goals for technological innovation; and the role of its faculty and students in mentoring and pre-college education; i.e.:

- Policies in place to reward faculty in the tenure and promotion process for cross-disciplinary research, research on education, research, and other activities focused on advancing technology and innovation

- NSF 09-545: Cost sharing restored for centers by Congress and policy requirements same as above.

iv. Non-university Financial Support Requirements

Gen-1 ERCs: Classes of 1985–1990[29]

- The only mention is in regard to the budget, where a separate schedule was required to show the total operating budget of the ERC including funds from NSF and other sources, including the proposing university(ies).

Gen-2 ERCs: Classes of 1994/1995 through 2000[30]

When pre-proposals were introduced in 1994 with NSF 94-150, the submission of financial support tables and commitments from non-NSF sources was required only at the full proposal stage.

- Substantial support from non-NSF sources, including funds and equipment donations from participating companies and state and local government agencies, and exchange of technical personnel with industry

- Table listing the current and committed future member firms, including letters of commitment for industrial financial support

- Table of data on current and committed industrial, university, state, and other support and by 1998 tables on how total support will be expended by function of the ERC

- Review criteria on strength of financial support from non-NSF sources.

Gen-2 ERCs: Classes of 2003–2006[31]

- Same as above

Gen-3 ERCs: Classes of 2008–2013[32]

- Dropped from NSF 07-521 at the request of NSF Policy Office, but commitments for industrial membership implies financial support

- NSF 09-545 and later solicitations returned the requirement for financial support from industry and other sources at the full proposal stage, as well as academe at the preliminary proposal stage. Tables required on expected support and how it would be allocated by function.

Over time, the management and financial systems became more complex in recognition of the complexity of the structure of ERCs and the need to set up a financial system unique to the center so the center personnel could manage inflow and outflow of funds, as if it were a business within the university system, to prepare it for financial success and self-sufficiency post-graduation from NSF/ERC support.

9-C(b) Pre-Award Review Process

While some government agencies make award decisions based only on staff input, NSF has always relied on the peer review system to gather input on quality. This is the foundation upon which the ERC Program rests. NSF award recommendations are made by NSF staff, taking into account input received through the peer review system and their judgment of needed future directions in a field. However, a new type of review and recommendation process was needed for ERC proposals and awards because the proposals were (and are) more complex than research proposals from a single investigator. The complexity stems from the requirements that ERC proposals establish a strategic vision and then integrate research across disciplines, develop a synergy between research and education, and establish a partnership with industry in order to accomplish the vision.

As was detailed in Chapter 2, Section 2-C(b), “Inventing a New Review Process,” the ERC Program had to create its own review process to handle this complexity. The process was multi-stage and began that first year with a review of the 142 full proposals submitted in response to the first ERC program announcement. To prepare for their arrival, the staff developed review criteria which were informed by the review criteria in the program announcement and expanded to focus on specifics related to those criteria. Those review criteria were:

Research and Research Team

- Is the research innovative and high quality?

- Will it lead to technological advances?

- Does it provide an integrated-systems view?

- Is the research team appropriately cross-disciplinary?

- Is the quality of the faculty sufficient to achieve the goals?

International Competitiveness

- Is the focus directed toward competitiveness or a national problem underlying competitiveness?

- Will the planned advances serve as a basis for new/improved technology?

Education

- Does the center provide for working relations between faculty and students and practicing engineers and scientists?

- Are a significant number of graduate and undergraduate students involved in cross-disciplinary research?

- Are they exposed to a systems view of engineering?

- Are there plans for new or improved course material generated from the center’s work?

- Are there effective plans for continuing education for practicing engineers?

Industrial Involvement/Technology Transfer

- Will industrial engineers and scientists be actively involved in the planning, research, and educational activities of the ERC?

- Is there a strong commitment or strong potential for a commitment for support from industry?

- Are new and timely methods for successful transfer of knowledge and developments to industry ready to be in place?

Management

- Will the center management be actively engaged in organizing human and physical resources to achieve an effective ERC?

University Commitment

- Is there evidence of support and commitment to the ERC by the university?

- Is there evidence that the university’s tenure/reward practices will not deter successful cross-disciplinary collaboration?

Later in the review process, in subsequent years as the ERC portfolio grew, the following secondary criteria were applied by the NSF staff before making a final award decision. These criteria were:

- Geographic balance and distribution

- Whether the lead university has already been granted an ERC, and if so, there could only be two ongoing ERCs on a university campus at one time

- Whether the research area complements those of the already existing centers. [33]

The basic elements of the ERC review process were set up to review the proposals in 1985: first individual and panel reviews to recommend proposals for site visit, then the site visits, and finally “Blue Ribbon” panel review of the results of the site visits and a final presentation to this panel by the proposed center direct resulting in award recommendations. That system served as the basis for ERC proposal review over the years, growing in depth over time from experience and NSF requirements.

In setting up the review system, Mayfield and Preston operated with an overriding ethical commitment that the review process could not be dominated by the opinions of the ERC Program staff, or those of one or two leaders in the field of engineering, or by higher-level management at NSF. It had to be grounded in the peer review system, collecting input from a broad base of people from a broad range of institutions and firms. It could not be swayed by Congressional pressure to fund an ERC in the districts of members of the Senate or Congress. That commitment prevailed throughout the period of this history.

i Conflict-of-Interest Policies

One of the landmark policies NSF uses to uphold ethical standards is monitoring and controlling for conflicts of interest (COI). Initially, Mayfield and Preston set up the policy regarding conflicts of interest in the ERC peer review process. Basically, it excluded the review of a proposal by anyone from the institution submitting that proposal and any industrial person who had signed a letter of support for that proposal.

Regarding staff conflicts, prior to 1985 all NSF staff below the Assistant Director (AD) level were permanent NSF employees so no academic conflicts existed at that level. However, in 1985 when the program started, the AD for Engineering, Nam Suh, was on leave from MIT and an MIT employee. At that point, he did not sit in on any of the panel meetings and was not present during the deliberations of the blue-ribbon panel that recommended awards. However, in 1986 he did insist on sittting in on the blue-ribbon panel meeting; afterwards, Mayfield and Preston decided that in the future, ADs should not sit in on those deliberations, both because they might have an institutional conflict of interest and because he/she would be the official recommending an award to the Director. In addition, any casual comments coming from someone at the AD level could be perceived as suggestions by the members of the panel and “perturb” their deliberations. Briefing the blue-ribbon panel on the ERC Program and its importance to the Nation in general prior to the initiation of the work of the panel was considered to be an appropriate level of involvement for an AD.

By the 1990s, NSF’s and the ERC Program’s COI policies became more complex for two reasons:

- Some of the ERC PDs came directly from universities to serve in a staff capacity but were still under the employment of their university.

- Congress mandated that NSF could not use reviewers who had a financial interest in any firm proposed to be a member of a center or serving as a member of any ongoing center.

These issues could be addressed through expanded COI policies, which were reviewed and approved by Charles (Charlie) S. Brown , the Ethics Officer of the NSF Office of the General Counsel (OGC). The policy developed for ERC full proposals for NSF 98-146 was as follows regarding academic institution COIs:

- No NSF staff person may be involved with a proposal from his/her home institution.

- No reviewer may serve on a panel considering a proposal from his/her institution (while the usual NSF policy was to let that person leave the panel room during the deliberations of the conflicted proposal, that was not possible given the size of ERC awards and their pervasive impact on campuses).

- A reviewer from an institution that has submitted a proposal may sit on a panel that is not considering proposals from that person’s home institution.

- Deans, Vice Presidents for Research, or a person who serves in a similar role over several departments or schools in a university that has submitted a proposal may not serve on any panel.[34]

However, a Congressional regulation regarding financial conflicts of interest posed a serious threat to the integrity of the ERC review process (pre and post-award), because as written it would have eliminated all reviewers with private investments that were under their own control or that of their spouse and/or dependent child in firms proposing to be members of a proposed ERC or that were already members of a funded ERC. Since there is only a tenuous connection for a firm, let alone an investor, between joining an ERC and predictable financial reward to that firm, Preston felt that this restriction as it related to the review of center proposals and ERC post-award reviews should be reviewed again by the OGC. She voiced her concerns about the implication of this policy on the integrity of the ERC review process with Charlie Brown, and together they worked out a waiver system that was accepted by NSF and Congress. It read as follows:

“Technical and ERC panelists also will be asked to review the lists of industrial participants committing to financial support and involvement to ascertain if they individually own stock worth more than $5,000 in any of the firms. If they own stock worth between $5,000 and $50,000 they will be asked to sign an affiliate waiver. If the amount of ownership is greater than $50,000, the affiliate waiver will have to be signed by the Office of the General Council. These conflicts will be checked by the Program Director before the panel meetings so the waivers that need OGC approval will be processed before the meetings. This does not apply to those firms merely signing letters of interest without a financial commitment.”[35]

This waiver was eventually used across the Foundation in peer review of proposals or ongoing awards and basically continues today.

However, the waiver policy had an impact on Preston’s ability to continue to use retired industrial VPs for Research or Chief Technical Officers because it required that they disclose any stock holdings where they had control over the funds invested. For some of these people, with considerable wealth, this was a burden; and for Preston, it proved to be a disincentive to using this type of reviewer as she felt the policy was an invasion of their privacy. Thus, in the late 1990s and beyond, there were fewer industrial reviewers with high level experience and in some cases, fewer academic reviewers who controlled their own investments.

Over time, the ERC Program’s COI policy for proposal review became increasingly complex because of the complex affiliations for NSF staff, presenting a broader internal impact. Because academic professors who served at NSF in temporary staff positions were employed by their home universities, that affiliation posed conflict issues regarding their knowledge of and involvement in the review of ERC proposals because of the magnitude and prestige of ERC awards. The issue was that it would be inappropriate for these people to see lists of proposals or participate in the review of proposals, as they would be put in a position of having information about proposals that might be in competition with proposals from their home institutions—and their home institutions might put pressure on them to disclose information regarding what proposals were submitted to the ERC competition by other institutions.

As a consequence, policies to deal with this type of conflict were developed with Charlie Brown until he retired from NSF in 2007 and then with Karen Santoro, the NSF Ethics Officer who took his place. These were as follows:

- An Assistant Director for Engineering and his/her Deputy AD (DAD), a Division Director, or a Program Director could not participate in the review of ERC proposals or sign on an award recommendation if one of the proposals in the competition was submitted by his/her home university.

- A conflict of interest occurred when that person’s university participated in an ERC proposal as the lead university or one of the core partner universities. An affiliate relationship, since it usually involved only one person, and not a large number of professors, would not trigger a conflict.

- If that proposal failed in one stage of the process (e.g. pre-proposal review), then the conflicted staff member could participate in the rest of process.

After Preston left the NSF in early 2014, this policy largely remained intact, except that a modification enabled an ERC Program Leader who was then an academic employee working temporarily at NSF to manage the review process for pre-proposals with access to the lists of proposals but without access to the “jackets” with more detailed information. If his/her university received a full proposal invitation, then he/she would remain conflicted unless and until the university’s proposal failed. This COI policy is always subject to modification and may well have evolved further since that time.

ii The ERC Proposal Review System

The components of the pre-award proposal review system and how it has functioned through time are described in detail in the linked file “The ERC Proposal Review System.”

NSF Award Recommendation and Decision Process

The process of determining award recommendations grew in complexity over time as NSF evolved into a more hierarchical bureaucracy. During the first decade and a half, 1985-2000, that decision process was managed by the Director of the division housing the ERC Program—Mayfield through mid-1987 and then Marshall Lih, both of them worked jointly with Preston on the recommendation; she began managing/leading the ERC Program by 1987. The issue usually was to determine which of the Highly Recommended and top Recommended proposals to recommend for awards. The ranking of the Blue Ribbon ERC Panel was the first point of reference. If there were sufficient funds to award all the proposals highly recommended by the ERC Panel, that was the outcome. If there were more proposals highly recommended for award than funds to support them, the ERC team awarded those with the highest rankings, complemented by consideration of the fields of the proposed awards in relation to the current portfolio of ERCs and National needs. Lih and Preston conferred with the ERC PDs who led the review panels and often asked for input from relevant division directors, but neither group had a formal role in the final decision process, so as to avoid “disciplinary lobbying”—i.e., “I want an ERC for my discipline.”

While the Assistant Director (AD) for Engineering had been involved in the initial decisions regarding which ERCs to fund in 1985 and 1986, by 1987 it became apparent that he/she should not initially be directly involved because the AD was the person recommending the awards to the Director through the National Science Board (NSB). When the recommendation decision was reached, it was then discussed with the AD, if he/she was not conflicted. If conflicted, it was discussed with the Deputy Assistant Director (DAD), who was usually a permanent employee of NSF.

When the recommendation decision was final, award recommendation packages were prepared for NSB review and signed by the AD/ENG or DAD/ENG. ERC and ENG staff presented the recommended awards to the NSB for their approval. If approved, that approval was sent to the Director, who then delegated the award process back to the AD/ENG, who in turn delegated it to the ERC Program’s Division Director, with instructions to prepare award recommendation actions. Award packages were signed by the AD/ENG and forwarded to the Division of Grants and Agreements—the division responsible for making an award to a university—for their review, approval, and action. Once that was achieved, the award instrument—the cooperative agreement—was sent to the university for their review and signature. At the end of that process, the award was official.

During the 1980s and early 1990s, Preston would organize a team of staff from the Directorate to review the NSB packages in advance for strength of analyses, recommendations, and to find errors. She based this effort on her experience in RANN as a Member of the RANN AD’s Grant Review Board (GRB), which used that Board for the same purpose. In 1991, Joseph Bordogna became the AD/ENG and was involved in the GRB process. When he became the Acting Deputy Director of NSF in 1996, he formed the Director’s Review Board (DRB) to assist him in the process of reviewing award recommendation packages on their way to NSB approval. Also, at that time the NSB put award size limits on proposals they would review. Individual ERC awards were too small to be reviewed by the NSB, so the DRB became the last “stop” in the process for recommending approval to the Director.

This recommendation process continued until 2004, when the recommendation process became more complex within the Directorate for Engineering (ENG). The internal review process for award recommendations requiring DRB or NSB approval was formalized and renamed the Engineering Review Board (ERB), with an expanded role in the recommendation process. The ERB now participated in the decisions regarding which proposals would be recommend for award, based on the recommendations of both the Blue Ribbon ERC Panel and the ERC Program. The result was a shift in “power” in the recommendation process from the ERC Program and its Division Director up to the AD’s level. The ERB reviewed the recommendations of the ERC Program regarding which proposals to recommend for award and eventually actually voted regarding which awards to recommend. This had a benefit for the ERC Program of developing more ENG staff who were more familiar with the ERC Program and also providing a broader base of disciplinary expertise to inform the final decisions about which proposals to recommend. The DAD was also responsible for managing any disciplinary “politics” impacting award recommendations.

Another layer of involvement of staff from the divisions of ENG was added in 2008 in the form of the ERC Working Group. This was a team of staff from the other divisions of ENG who had ERC experience, either as former ERC PDs or as site visitors. They became involved in the decisions regarding which pre-proposals to invite to submit a full proposal and remained involved in the process until the recommendations reached the ERB level. While their involvement may have broadened the knowledge about ERCs among the staff of the Directorate, Preston and the other ERC Program staff found it greatly complicated the review process, lengthening still again the time it took to make decisions. They also found that some of the Working Group members did not spend sufficient time attending panel meetings to be as fully familiar with the proposals under consideration as Preston and the ERC PD who led the panels were. She attended every panel meeting and read every proposal and ERC PDs who led the panels read all the proposals in their panel and also attended other panels.

The involvement of the ERB in the new award and renewal award recommendation process continued until 2009, when the Acting Director of the NSF, Cora Marrett, decided that ERC award and renewal packages were of such high quality, they did not have to be reviewed by the DRB. However, Tom Peterson, then the AD for ENG, requested that ERC award recommendations still be considered by the DRB so that the members of the DRB, his co-leaders of other Directorates, could continue to be cognizant of the ERCs. Thus, the role of the ERB continued as outlined above for the awards, but the ERB became the final stop for renewal recommendations. That decision was reversed after Preston retired when Richard Buckius, the Chief Operating Officer of the NSF, who had previously been an AD/ENG, decided that both award and renewal recommendations had to be presented to the DRB for approval.

In 2012, the award recommendation process became even more concentrated in the Office of the AD/ENG. The DAD decided to take over the role of selection of full proposals from the ERC Program. He formed a committee that included Preston (by then no longer the Leader of the ERC Program); Eduardo Misawa, the new Leader of the ERC Program; and two other division-level leaders who had no experience with the current competition or its panels. This move, as might be expected, was not well received by the ERC Program personnel who had worked on the panels because it left them out of the decision process and put someone in charge of full proposal selection who had not participated in the review panels. Suggestion: As you develop new programs, be mindful that this type of approach is not optimal.

9-C(c) Agreements and Start-up

i. Award Instrument: The Cooperative Agreement

Once the award decisions for the first class of ERCs were made in 1985, Pete Mayfield, the Division Director at the time, and Lynn Preston understood that a new type of funding instrument would be required. Using a traditional NSF grant was too passive an instrument, as it was not possible to require the Center Director–the Principle Investigator (PI)—to deliver on the proposed goals of the ERC. On the other hand, using a contract instrument provided NSF with too much control. Preston conferred with a grants officer in the NSF division that processed awards. After explaining to him that the ERCs had to structure programs to fulfill the research, education, and industrial collaboration goals proposed and accepted for funding by NSF, they decided that the appropriate instrument would be a cooperative agreement. This agreement is basically a set of two-way obligations. The grants officer had developed cooperative agreements for the ocean science programs to oversee the purchase and deployment of research vessels, so that model served as a starting point.

An agreement was structured for the ERC Program on that basis. The ERC Program goals were taken from the program announcement and the individual ERC’s proposed features were taken from the proposal and included in the agreement, so that the ERC PI would be obligated to harness resources to fulfill them. Funding amounts and schedules, reporting requirements, special requirements for a particular center, and joint NSF-awardee activities were stipulated. The PI was required to form an industrial advisory committee and hold annual meetings with industry, to attend annual meetings of ERC PIs and staff with NSF, and to disseminate the center’s findings in research and education. In time, the agreement was expanded to require the ERC to keep a database in order to provide NSF with quantitative indicators of activities and programs in meeting ERC Program goals. ERCs were required to submit an update of their strategic plan, within 90 days of their award, which served as a tool to assure that the strategic planning process remained in the forefront of the research programs. They were also required to submit annual reports, which grew in complexity over time.

If future center-level budgets were to grow, that growth would depend on performance. Using financial incentives to stimulate performance was a new mode for NSF and academics who had to provide evidence of performance beyond publications. The agreement also stated that continued NSF support would depend upon an annual report and an annual review of the ERC’s progress. Preston wanted to use the annual reports as a management tool at the center level, so that at least once a year the ERC team would have to get together and reassess its goals and progress, analyze its level of delivery, and determine needed course corrections for the next year—or next five years for a renewal review. The agreement also had protections for the ERC to prevent the NSF ERC Program Director from exercising too much control over the ERC and attempting to usurp the role of the PI.

Initially, the recipient university(-ies) were obligated to provide proposed cost sharing. Any state or local government was expected to provide promised support and industrial support was required, but expected levels were not stipulated.

The ERC was required to report to the Dean of Engineering, to position it equal to or higher than a department in the academic hierarchy of a school of engineering and to signify and cement its cross-disciplinary structure, since housing it in a disciplinary department would be counterproductive.

These agreements grew in complexity and specificity over time, as did the ERC Program and its awards. Initially, there was little engagement from the NSF division that actually executed (funded) the awards (the technical oversight was provided by Preston and her team in a different division), but that changed over time as well. The ERC Program’s cooperative agreement became a challenge to the funding division, where personnel were more familiar with the grant instrument as a funding method. Personnel had to be trained to understand how to monitor and guide the development of the cooperative agreements, which were increasingly used by other center programs at NSF. The name of the funding division became the “Division of Grants and Agreements” and there was one officer assigned specifically to the ERC program, initially Tim Kashmer, who worked closely with Preston and her staff in the development and execution of the agreements, and over time he became an expert in cooperative agreements.

For further detail based on an actual agreement template, see the Program Terms and Conditions in the master agreement that was used in 2006.[36] Note that the agreement provides significant guidance regarding performance components and expectations in order to serve as a mechanism for not only detailing requirements but also providing guidance on how the features should be achieved. This resulted in joining terms and best practices into one document because of concern that many ERC leaders and/or their university supervisors would not fully understand how to achieve the Program’s goals. The financial terms were contained in a separate document.

Once the agreement was signed, the award went into effect. Funds were provided on a billing-back, draw-down basis.

ii. New Center Start-Up- How to Successfully Launch an ERC

Initially, center start-up was quite informal. Preston and Mayfield met at NSF with the Directors of the first class of ERCs and their Administrative Directors plus key faculty leaders after the NAE symposium announcing their awards to personally congratulate them. The additional goals of the meeting were to inform them of the general terms of their cooperative agreements, the Program’s expectations for performance, and the fact that there would be annual reviewers and a third-year renewal review in 1987, plus a Program-level annual meeting that they and their staff would be expected to attend.

As discussed previously in other chapters and later in this chapter (section 9-J(a)), the ERC Program annual meetings were designed to serve as platforms for sharing Program-level information and center-level sharing of challenges, successes, and failures. The basic message was that the NSF staff and the ERC participants were learning together how best to fulfill the ERC Program’s mission. As more experience at the center level was validated through annual and renewal reviews, sessions were set up at the annual meetings for experienced ERC key leadership to brief new leaders on how best to start up their centers.

The first edition of the ERC Best Practices Manual, written by ERC leaders, was published in 1996 on the Web through a new site, www.erc-assoc.org, and later served as another tool for helping new ERCs get off the ground without repeating approaches that did not work under the ERC construct. By 2000, the new centers were given a place in the meeting agenda to briefly present their visions and goals, thereby informing other ERC staff at all levels about these new centers. In addition, as centers graduated from NSF support, they also had time on the agenda to present their goals and achievements, so all could see what they had achieved.

However, Preston felt over time that more was needed to help new centers, given the scope, complexity, and impact of the ERC awards. Beginning with the Class of 1996, rather than waiting for the ERC Program annual meeting, Preston brought small teams of leaders of the new ERCs to NSF for a briefing on effective practices in starting up an ERC. While this served a useful purpose, she was concerned that the impact was narrow and that full communication of NSF’s expectations depended on what the one or two leaders brought to NSF for these briefings understood about start-up and how well they communicated it back home.

With the start of the Class of 1998, she took the startup process on the road to have a broader impact on a new ERC’s team and its university administrators than was possible by relying only on one or two ERC leaders coming to NSF and the ERC annual meetings as startup training tools. The on-campus startup meetings combined a celebration of the award and training. After the long and arduous review process that took over a year, a new ERC Director could use this meeting as a means to bring the faculty team back together, to start the Industrial Advisory Board and begin the membership process for on-board and new firms, and to bring the university administrators together in support of their ERC.

Preston and the lead ERC PD (and a co-PD if there were one) went to the lead university’s campus. The meeting began with a celebratory dinner, where she and the ERC PD could congratulate the ERC team and the lead and partner universities on winning the prestigious ERC award and the Center Director could formally congratulate his/her team. This dinner involved a broad base of academic leaders, the ERC’s industrial supporters, often state and local government officials, and even students who had played a key role in the proposal development and review process. The next day, the new ERC’s team briefed on its start-up goals and features and NSF staff briefed them to provide guidance on up-to-date best practices in research, education, industrial collaboration, administration, and financial management.

The new-center briefing also took on more depth on the administrative side as a result of the leadership of Barbara Kenny, an ERC PD to whom Preston delegated the reporting and oversight system responsibility in 2008. Kenny also coordinated a team of ERC Administrative Directors, who met monthly in a conference call to discuss center administration issues. They suggested that part of the start-up celebration meeting be devoted to in-depth training of new ADs, since it was such a different academic responsibility. Kenny recruited Janice Brickley, the Administrative Director of the ERC for Collaborative Adaptive Sensing of the Atmosphere (CASA), headquartered at the University of Massachusetts-Amherst, to organize those briefings and teach the new ADs coming from new ERCs at start-up as well as new ADs from ongoing ERCs. Both Brickley and Kenny briefed on how to manage the administration and finances of a new ERC. Those start-up briefings began in 2008.[37],[38]

Brickley brought a wealth of experience based on her role as the AD for CASA and her contributions to a later edition of the ERC Best Practices Manual chapter on Administrative Management. She started her briefing to the new ADs as follows, quoting from the Best Practices Manual:

“…to all of the day-to-day challenges of operating an industry-oriented, multidisciplinary Center on a university campus are added the extra dimensions—geographic, logistical, administrative, legal, cultural, and psychological—of requiring separate institutions to collaborate closely.”[39]

9-D Post-Award Oversight System

9-D(a) Annual Reporting

i. 1984–1990: Brief Reporting Guidelines and Minimal Data Collection

During the beginning years of the ERC program, the ERC Program leaders at NSF and the ERC center leaders at the universities were learning together how best to start-up and operate an ongoing ERC. Right after the awards for the first class of ERCs were made in 1985, Preston got agreement from Pete Mayfield, the ERC Office Director, and Nam Suh, the head of the Directorate for Engineering, that ERCs had to report on their progress and outcomes. This was a break with NSF tradition, because prior awards had been made in a passive mode with little regard for accountability. The only expectation was that the awards should result in the advancement of science and engineering.

However, under the new ERC program, each ERC was much more than its own individual award; it was now a part of a community of ERCs that would together be fulfilling not only their own visions but also the mission of the overall ERC Program. In light of this, Preston strongly argued that the ERCs should prepare annual reports on their progress, plans, and outcomes and impacts, because Congress would be looking for how these ERCs impacted U.S. competitiveness. In addition, she argued, the exercise was important not just for external reporting but for the internal management of the ERCs. She reasoned that at least once a year, each ERC had to stand back from the day-to-day work and assess progress, plan for the future, and revise/build the team for the next year. Mayfield was at first uncomfortable with this approach because he was more accustomed to the traditional NSF passive practice of oversight, having been at the Foundation since the late 1950s. However, through her conversations with him, Nam Suh, and Erich Bloch (then the Director of NSF), agreement was reached that the ERCs would have to prepare annual reports and would receive post-award site visit reviews.

That requirement was put in the first cooperative agreement and minimal guidelines were issued for the first annual report, to be delivered in the winter of 1986, requiring chapters on the key features of an ERC and its management. The responses varied considerably across the centers, with one essentially coupling together individual reports by the PIs who received the funding to others that provided more thoughtful analyses of progress. The data the centers provided on inputs and outputs varied considerably as well. The next year, for the second annual report which was due in the winter of 1987, more substantial but still minimal guidelines were provided to the ERCs, including an expanded research section to include strategic planning with milestone charts depicting deliverables and discussions of testbeds to explore proof-of-concept. In the winter of 1988, the annual reports and renewal proposals for the third-year renewal reviews were due, and the reviews took place in March 1988.

The guidelines for preparing annual reports evolved over this time with the help of a GAO assessment of the ERC program in 1988. The assessment included an evaluation of the post-award oversight system. GAO experts provided Preston with useful guidance on how to prepare performance criteria and reporting guidelines, which helped her develop and improve the oversight system for the fledgling ERC program.

The annual reports also provided information for the post-award site visit teams to review and evaluate the progress of the center toward meeting its goals. By 1987 the site visit teams (then called Technical Advisory Committees) were instructed to prepare site visit reports on the following topics: (1) management of the ERC and its leadership, (2) the quality of the research program, (3) the education program with particular response to undergraduate education, (4) the extent and reality of industrial participation, (5) the extent and reality of state and university support, and (6) specific comments and recommendations to the Program Director for improvement of the ERC.[40]

In addition, the site visit teams were provided with the guiding philosophy below:

It is not intended that an evaluation/review report merely be answers to these questions. Rather, the report should reflect the judgment of the (review) team regarding the progress and prospects of the ERC using these criteria as a frame of reference. They are intended to bring the reviewer up to speed on the goals and objectives of the ERC program. The application of the criteria to each center may differ depending upon whether or not the ERC was built on an existing center or is started de novo (anew), the degree of difficulty inherent in the focus of the center, the degree of difficulty inherent in the blending of the disciplines involved in the ERC, the degree of sophistication of the targeted industrial community, etc.[41]

ii. 1991–2002: More Intensive Reporting and Data Collection

The years between 1991 and 2002 represented a honing of the ERC key features based on experience, the creation of data reporting guidelines, improved definitions of data required, and significant augmentation of reporting requirements. The oversight system eventually included:

- A definition of key features designed to promote outcomes and impacts on knowledge, technology, education, and industry

- Review criteria and program guidance to ERCs emphasizing outcome, impacts, and deliverables

- Strategic plans for research and education to organize resources to achieve goals and deliverables

- Database of indicators of performance and impact, designed to support the performance review system and reporting for ERCs and the ERC Program

- Annual reporting guidelines to focus reports on outcomes and impacts from past support, value added by ERCs, and future plans

- Annual meetings designed to encourage ERCs to share information on how to achieve cross-disciplinary, systems-focused research programs, transfer knowledge and technology to impact industry, and produce more effective graduates for industry.

iii. 1993 and After: Stronger Reporting Guidelines

In 1993, with the addition of Linda Parker, an evaluation specialist, to the NSF ERC team, Preston gained additional support in developing reporting and database guidelines. They worked with the Administrative Directors of the ERCs, who were responsible for gathering information and data from the faculty and staff and for preparing the reports in collaboration with the Center Directors. The purpose was to develop lines of communication about NSF’s need for information to provide to reviewers, to keep as records of performance, and to report to higher levels of NSF, the OMB, and Congress. The Center Directors also had needs for information about faculty and staff achievements and plans for the future. Data were required to provide a factual basis for claims about inputs (money, member firms, and students, faculty, and staff) and outputs (graduates, publications, patents and licenses, and technology transferred to industry/users).

The result was a set of database and reporting guidelines to be used by the staff of ERCs to gather information and develop their reports. Because the report writing was most often delegated to ERC staff, some of whom might not be fully familiar with the goals and expectations for performance of each key feature, Preston and Parker expanded the guidelines so they would serve as a “teaching tool” for ERC staff and participants about performance expectations and required information and formats.

During this period, the guidelines often were a “work in progress” as NSF was learning how to ask for reports of progress from the ERCs so the outcome would serve its oversight and reporting purposes, and the ERCs were learning how to gather information for the reports and how to prepare effective, readable reports, which also would be used for internal assessments of progress and plans. There was a lot of push and pull; reviewers were demanding more and more specific technical and financial information, but centers sometimes complained about the burden of reporting. Findings by the NSF Inspector General that a few centers were less than honest in their reporting prompted requirements for new certifications by higher university officials of industrial memberships and cost sharing, and new definitions of core projects and associated projects. In addition, as more ERC PDs came from an engineering background, they and the engineers in the ERCs shared a need for precise definition of requirements, rather than requirements that allowed more flexibility

By 2000, there had been several iterations of the annual reporting guidelines based on input from the ERCs, from a committee charged with improving reporting requirements, and from site visit teams. The annual reports were originally only one volume, which provided information on progress and plans for the key features and management, plus data. At the request of site visit teams, a second volume was added to include summaries of project-level reports, the certifications, and budget and other required NSF materials.

In 2000, Preston asked one of the ERC Program Directors to organize a committee, comprised of personnel from the ERCs, to develop new and improved reporting requirements because there were ongoing complaints from the Center Directors about the reporting burden. Despite the desire to reduce the reporting burden, the committee recommended that the number of volumes of the annual report be increased from two to four:

Volume I: Vision/Strategy/Outcomes/Impacts

Volume II: Data Report

Volume III: Thrust Reports

Volume IV: NSF Cooperative Agreement Documentation (Budget, Current and Pending Support, Membership Agreements, Cost Sharing Report, Intellectual Policy Description).

NSF ERC staff did not implement this suggestion of four volumes because the staff believed that it would be difficult for site visit teams to juggle their reading between four volumes. For example, there might be assertions made in Volume I about impacts but the reader would have to shift to another volume to find the proof through data—something the staff believed would not happen, as most reviewers read the reports shortly before the site visit or even on the airplane on the way to the visit.

Instead, in 2001 Parker and Preston and the ERC PDs revised the reporting guidelines in a different and specific way, based on the input from the ERCs and this committee. (See the linked file Guidelines here.[42]) Basically, the guidelines had the following format:

- Performance expectations and requirements by Key Feature with a reference to a separate document containing a set of specific criteria, organized by feature with definitions of high-and low-quality performance per feature

- Reporting Requirements

- Report scope – what to include

- Two Volumes – Size and other requirements

- Data tables suitable for inclusion in the report to be generated by the ERC Program’s database contractor, containing data specific to the reporting ERC

- Volume I Requirements

- Cover page requirements

- Project Summary

- List of all ERC Faculty

- List of External Advisory Committees and Members

- Table of Contents

- Systems Vision/Value Added and Broader Impacts of the Center

- Systems Vision

- Value Added Discussion (Knowledge, Technology Education and Outreach)

- Strategic Research Plan and Research Program

- Strategic Research Plan

- Research Program (Thrust Level)

- Education and Educational Outreach

- Industrial/Practitioner Collaboration and Technology Transfer

- Strategic Resource and Management Plan

- Institutional Configuration, Leadership, Team, Equipment and Space

- Management Systems and University Partnership

- Financial Support and Budget Allocations

- Budget Requests

- References Cited

- Systems Vision/Value Added and Broader Impacts of the Center

Volume I, Appendix I: Glossary

Volume I, Appendix II (Includes the following items):

- ERC’s Current Center-Wide Industrial/Practitioner Membership Agreement

- ERC’s Intellectual Property Agreement (if not part of the Generic Industrial/ Practitioner Membership Agreement)

- Certification of the Industry/Practitioner Membership by the Awardee Authorized Organizational Representative