5-A Gen-1 (1985–1990)

5-A(a) Directed Research from Fundamentals Through Testbeds to Systems

Engineering Research Centers came on the scene as powerful forcing functions for partnerships in research across sectors and disciplines, in order to expand the capacity of academic engineering research to move beyond the exploration of first principles to advancing emerging technology so as to strengthen U.S. competitiveness. The prevailing scene in academe was one of disciplinary faculty working largely alone on research projects exploring first principles of their disciplines—a culture of “silos,” where disciplines developed their own vocabulary and functioned in a reductionist mode rather than a synthesis mode. The incentive structure—grants for single investigators and rewards for solo achievements—reinforced this culture. Industry too had its silos—research, separated from design, which was in turn separated from product development and manufacturing—although the ultimate goal, but not always the incentive structure, was for synthesis to achieve cost-effective and innovative processes and products.

By the 1980s a culture change in both academe and industry was needed if the U.S. were to become competitive in the face of rising competition from Japan and Europe. As the NAE guidelines recommending the ERC Program pointed out:

- Rapid advances in technology are driving engineering toward cross-disciplinary interactions…there is a growing need for engineering education that cuts across the engineering subdisciplines and applied science;

- Technological advances are also leading toward integration among design, engineering, manufacturing and marketing…(and) a need for engineers with a broad understanding of the overall manufacturing systems.[1]

Synthesizing the recommendations in those guidelines, the goals for the ERC Program that would bring about that needed culture were:

- Develop fundamental knowledge in fields that will strengthen U.S. competitiveness;

- Increase the proportion of engineering faculty committed to cross-disciplinary teams;

- Focus on engineering systems and increase competence in new fields needed by industry;

- Increase the number of engineering graduates who can contribute innovatively to U.S. productivity;

- Include practicing industrial engineers as partners to stimulate technology transfer; and

- Join research and education.[2]

The ERC Program was a mandate for a new culture of engineering research—from first principles to engineering systems. The focus on engineering systems was an especially important component of ERCs, designed to impact the engineering research culture and produce an engineering workforce better able to strengthen U.S. competitiveness because a systems view of research required integration and synthesis of disciplinary knowledge. As discussed in Chapter 1, U.S. engineering schools had become too theoretical and too analytical. Rigorous grounding in fundamentals is a crucial component of engineering education, but it has to be complemented by experiences that give an integrated picture of engineering in practice and of the relationship between design and synthesis needed to build and manufacture complex engineering systems. Industry also was losing sight of systems issues and diminishing its support for the long-term research needed to address them, as short-term profits and a near-term outlook began to drive industry in the 1980s.[3] The ERCs were designed to spearhead a change in academic and industrial cultures.

In response, the ERC Program team developed ERC research features to specify how this culture change was to come about through the research component of ERCs. Initially these were:

- Provide research opportunities to develop fundamental knowledge in areas critical to U.S. competitiveness in world markets.

- Focus on a major technological concern of both industrial and national importance.

- Involve a cross-disciplinary team effort, contributing more to the focus and goals of the Center than would occur with individually funded research projects.

- Emphasize the systems aspects of engineering and educate and train students in synthesizing, integrating, and managing engineering systems.

- Provide experimental capabilities not available to individual investigators.

- Include the participation of engineers and scientists from industrial organizations in order to focus the research on current and projected industry needs and enhance understanding of systems aspects of engineering.[4]

Taken together, these features represented a radical approach to research—i.e., direct the research to achieve a desired next-generation engineering system, rather waiting for those advances to emerge “spontaneously” from basic research. This approach required that academic engineers work in close research partnerships with industry engineers, as the academic culture lacked the understanding of how to advance technology and products and the knowledge of how to integrate and synthesize knowledge in real time to develop and manage engineering systems. In addition, at the time, there was a lack of synergy among research, design, product development and manufacturing in industry. Both sectors stood to gain significantly by building bridges across the academic/ industrial divide, as it existed at the time. The participation of undergraduate and graduate students in this new research culture would achieve the desired integration of research and education and prepare a new generation of engineers better able to “hit the ground running” as they entered careers in industry and, also, better able to spearhead a broader culture change in academic engineering as they entered academic careers.

To address this mandate, 21 Gen-1 ERCs were awarded in the start-up period for the ERC Program, with 6 awarded in the first Class of 1985. The Gen-1 ERC research programs had the systems goals described in the file “Gen-1 Systems Goals.”

Given these centers’ complex systems goals, the challenge at NSF was to help the ERCs develop new ways to manage the cross-disciplinary research programs needed to address these goals. Through strategic oversight of the ERCs in partnership with industry, the NSF ERC team began to craft a new approach to managing academic research with complex systems goals. Steven Currall characterized the approach as “Engineering Innovation,” in his study of ERC strategic planning in 2004, and as “Organized Innovation” in his 2014 book based on the ERC Program.[5],[6] During the Gen-1 period, the ERC Program was learning how to put in place a new “framework or systematic method of leading the translation of scientific discoveries into societal benefit through technology and commercialization.” The ERC team put in place “conditions for technology breakthroughs that would lead to new products, companies and industries.” Currall characterizes organized innovation as consisting of three pillars: channeled curiosity, boundary-breaking collaboration, and orchestrated commercialization.[7] That outcome would take some time to achieve, and this chapter will explore how “organized innovation” came about through experimentation with new concepts in research management and organization.

5-A(b) Building Cross-Disciplinary Research Platforms

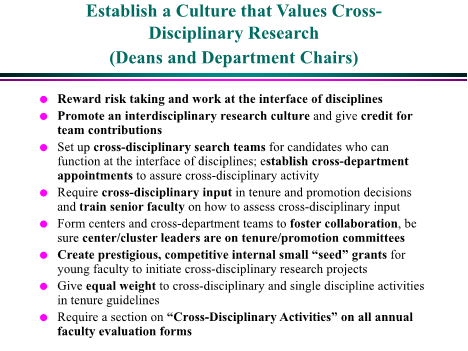

The first challenge was that each of these centers would have to take the time to build a collaborative research “space” where faculty from different disciplines could bring their skills and perspectives together to address the systems goals of their center. In the 1980s, opportunities to find support to join disciplines in research were rare and faculty were not encouraged to form cross-disciplinary teams which would enable them to collaborate and to bring their insights to bear on advancing technology or addressing societal problems. The ERC Program broke the ground for building these new spaces, setting an example for other programs in NSF and across the government and around the world to follow, and eventually to stimulate a reorganization of the academic culture that would play out over decades. Some new fields were generated as a consequence of these investments, such as biological engineering and neuromorphic engineering. However, for the ERCs to be successful the faculty had to be sufficiently motivated by shared goals to establish these collaborative spaces between their disciplines. It also took time and mutual respect for them to learn enough across fields to be able to communicate and collaborate. As a result, they could then bring their skills and knowledge to bear on challenging new problems that could not be solved without that collaboration and conversion of epistemologies. In addition, they were also learning to create research spaces which blended basic and applied research.

The following is a summary of the complex cross-disciplinary research spaces built by the Gen-1 ERCs.

BIOLOGICAL AND BIOMEDICAL ENGINEERING (Joining Engineering, Biology, and Medicine)

The following Gen-1 ERCs laid down new cross-disciplinary platforms that joined engineering, biology, and medicine and formed the basis for the start of new disciplines:

- Bioprocess Engineering Research Center at MIT joined biochemical engineering, chemical engineering, and molecular biology—forming the basis for the start of the new discipline of biological engineering.

- ERC for Emerging Cardiovascular Technologies at Duke University joined biomedical engineering, electrical and optical engineering, mechanical engineering, computer visualization and simulation, chemistry, cardiology, and physiology.

- Biofilm ERC at Montana State joined chemical engineering, electrical engineering, microscopy, and microbiology—forming the basis for the start of the new discipline of biofilm engineering.

ENVIRONMENT AND INFRASTRUCTURE

The following Gen-1 ERCs joined disciplines in research that led to the integration of structural engineering with sensing systems, the integration of chemical and environmental engineering, and the integration of structural engineering, materials engineering, and ocean science.

- Center for Advanced Technology for Large Structural Systems at Lehigh joined structural engineering, material science and engineering, electrical engineering, manufacturing engineering, robotics, and computer science.

- Hazardous Substance Control ERC at UCLA joined chemical engineering, civil engineering, manufacturing engineering, and plant and animal sciences.

- Off-shore Technology Research Center at Texas A&M/UT Austin joined materials engineering and science, ocean engineering and science, structural engineering.

MANUFACTURING AND DESIGN

The broad array of ERCs focused on manufacturing systems and design joined several disciplines to bring about new paradigms in manufacturing, integrating industrial processes with information technology.

- Advanced Combustion ERC at Brigham Young University and the University of Utah joined chemical engineering, process engineering, electrical engineering, environmental engineering, and computer-aided design.

- Engineering Design Research Center at Carnegie Mellon University joined chemical engineering, civil engineering, computer science and robotics, electrical engineering, mechanical engineering, public policy, and management,

- Center for Composites Manufacturing and Science at the University of Delaware and Rutgers University joined materials science and engineering with manufacturing.

- ERC for Compound Semiconductor Microelectronics at theUniversity of Illinois, Urbana/Champaign joined microelectronics, optoelectronics, and computer science.

- ERC for Interfacial Engineering at the University of Minnesota joined chemical engineering, materials engineering and science, and atomic microscopy.

- ERC for Net Shape Manufacturing at Ohio State University joined mechanical engineering and materials science and engineering.

- Center for Intelligent Manufacturing Systems at Purdue University joined electrical engineering with manufacturing engineering and computer science.

- ERC for Plasma-Aided Processing at the University of Wisconsin joined electrical engineering, chemical engineering, materials science and engineering, and nuclear engineering and physics.

MICRO/OPTOELECTRONICS, COMPUTING AND INFORMATION SYSTEMS

Some of these ERCs laid the groundwork for the transfer of knowledge of optics from physics to engineering, and joined electrical engineering and computer engineering and computer science with materials science, chemistry, and physics to advance micro and optoelectronic technology.

- Data Storage Systems Center at Carnegie Mellon University joined microelectronics, optoelectronics, and materials engineering and science.

- Engineering Center for Telecommunications Research at Columbia joined microelectronics, optoelectronics, computer science, industrial engineering and operations research.[8]

- ERC for Robotic Systems in Microelectronics at the University of California, Santa Barbara joined electrical engineering with robotics and manufacturing engineering.

- ERC for Optoelectronic Computing Systems at the University of Colorado/Colorado State joined microelectronics, optoelectronics, materials science and engineering, computer science, chemistry, and physics.[9]

- Systems Research Center at the University of Maryland and Harvard University joined systems engineering with electrical engineering, design automation, computer science, and information science.

- Center for Computational Field Simulation at Mississippi State University joined electrical engineering, computational science, computer science and visualization.

- ERC for Advanced Electronic Materials Processing at North Carolina State University joined electrical engineering, materials science and engineering, mechanical engineering, chemistry, and physics.

5-A(c) The Challenges of Systems and Testbeds

Understanding that the systems goals would be the most challenging aspect of the ERC Program, in 1985 the Program funded the Steering Group for Systems Aspects through the Cross-Disciplinary Engineering Research Committee of the Commission on Engineering and Technical Systems of the National Research Council. An Engineering Systems workshop was held in 1986. At the start of their deliberations, the members of the Steering Group remarked that graduating engineers were “equipped with the in-depth knowledge to adapt to rapidly changing technologies. What has suffered, however, is the crucial orientation toward industrial practice and needs that traditionally helped ensure technological eminence for the United States. The focus on analytical solutions is valuable, but in some cases it has gone too far. Engineering graduates entering industry no longer have the same ‘feel’ for systems synthesis that they once possessed, and the emphasis on specialized tasks in industry has done little to strengthen that orientation among practicing engineers.”[10]

The resulting culture emphasized theory and science, which were important, but the culture of hands-on product-and-practice orientation of academic engineering decreased. “A certain snobbishness appeared: Those who preferred to think in terms of synthesis or design of products, rather than research, became in some vague way second-class citizens.”[11] This culture was reinforced in industry through the large-scale industrial research and development laboratories that began to take on an academic “flavor” within a firm. Those who hired that type of engineer were impressed with the depth of knowledge but dismayed at how long it took for them to come up to speed. This engineering research and education culture was producing graduates who were “acquiring a notion that analysis itself—rather than the solution of engineering problems—is the focus of engineering work…but the existing curriculum tends not to impart an integrated picture of engineering, nor does it give a synthesis of complex, engineering systems. From the standpoint of industry needs, these are serious shortcomings.”[12]

“Industry had found that graduates from engineering schools were so immersed in the single-discipline focus of their professors, unfamiliar with technology and the integrative approaches needed to advance technological systems. New entrants had to be taught how to work in teams and how to depend upon the paradigms of other research disciplines needed to make incremental advances in production systems and develop new products.”[13]

These were the issues that drove the creation of the ERC Program and its focus on integrating disciplines to address engineering systems, from fundamentals to technology. The guidance to the ERC Program and the funded ERCs was to create a research and education culture that would address the shortcomings of the post-war academic engineering culture and build a new culture or “systems environment—going from narrow technical aspects of manufacturing to the broader techno-economic aspects, to the broadest techno-social concerns for national impacts.”[14] The Committee recommended that the systems environment of an ERC would have the following characteristics:

Research:

- Cross-disciplinary teams of engineers and scientists from separate disciplines should work as a team toward the solution of engineering research problems that have a direct bearing on near- and longer-term needs of industry or society

- Systems approach should focus on development of generic processes and principles, rather than an optimized product alone.

Education:

- Understanding of how systems are designed, manufactured, and supported in the field

- Not extensive curricular changes, but an increased exposure to the practical application of existing course material to the synthesis of engineering systems, with no single correct answer

- Hands-on experimentation and experience in systems design and development through exposure to industry personnel and methods of practice.

Practice:

- Interdepartmental approach to design and manufacturing as an integrated whole

- Understanding of all elements of the systems environment

- Ability to understand how the separate activities contribute to the solution to improve both product and process.[15]

Also in 1986, Erich Bloch asked the Office of Cross-Disciplinary Research staff, the home of the ERC Program, to support a workshop to explore the development of the emerging interdisciplinary field of management of technology. Bloch had been approached by Richie Herink, then the Program Director for Technology Management and Process Education at IBM, about the need to integrate the various disciplines that that been focusing on the issue of how to stimulate and manage technological innovation into a new field—i.e., Management of Technology. Lynn Preston and Fred Betz, an ERC PD who had managed an NSF program of Industry/University Cooperative Research projects, supported the workshop and worked with Herink to develop the steering committee and workshop agenda. The Management of Technology workshop, held in May 1986, brought together academic and industrial experts working in various aspects of the field.[16],[17]

The participants in the workshop agreed that in an “era of rapidly changing technology, a better understanding of the causes of inefficiencies in product development and better tools to manage technology development were needed. They recommended linking engineering, science, and management disciplines to address the planning, development, and implementation of technological capabilities to shape and accomplish the strategic and operational objectives of an organization.”[18]

Today the Management of Technology field is characterized by the image in Figure 5-1:[19] The Steering group recommended that NSF support the development of this field because of the following catalysts for change in the 1980s:

- The pace at which new product and process technology is generated had grown exponentially, creating rapidly changing sources of competitiveness; and U.S. companies must stay abreast of and lead these changes.

- Product life cycles shortened significantly because of increasing engineering capability and consumers who more readily adapt to change.

- International competitors were dramatically reducing product development times—Japanese automakers had a 3–4-year product development time compared to 6 for U.S. automakers.

- These trends would force U.S. firms to adopt flexible equipment that could adapt to changing production needs and facilities that could manage integrated systems.[20]

NSF did provide support for the development of the field. A new Program Director with experience in the field was hired and a program announcement was issued.

Figure 5-1: Technology Management: The Integration of Management, Analysis, and Engineering Skills (Credit: University of Bridgeport)

The Management of Technology and Engineering Systems workshops both had significant impacts on the management of the ERC Program. Because of their experience before the initiation of the ERC program, Preston and Betz understood that a passive approach to research management in ERCs would not enable these centers to achieve their systems goals and the envisioned changes in their research and education cultures. Given the complex systems goals of the ERCs and the implied mandate for technology management, they began to explore how effective the first two classes of ERCs were in organizing and directing research programs to achieve their systems goals.

The first task was to work with the funded ERCs to better define their engineering systems goals and to expand their horizons beyond those goals to reach for a vision for new technology systems. It quickly became apparent that if the ERCs were to achieve their complex systems visions, they would have to move beyond theory and modeling to synthesis and integration in “an experimental demonstration of a systems concept.” Some of the early Gen-1 ERCs, like the Center for Telecommunications Research (CTR) at Columbia, understood at proposal stage that they would have to build integrative systems or testbeds on an academic scale in order to demonstrate their systems idea, which would reveal additional barriers in the way of demonstrating functionality and provide a flexible testbed for future systems concepts.

CTR Director Mischa Swartz told the audience at the symposium announcing the ERC Class of 1985 that his ERC was:

“…implementing a highly flexible network testbed called MAGNET. MAGNET is a local area network of our own design capable of supporting integrated services such as data, facsimile, graphics, voice, and video communications. Through proper software design it will also emulate, at higher levels, integrated networks of various types. Once completed it can be used to study integration of services on local area networks, as well as to provide a testbed for trying out new system concepts as they are developed.”[21]

At the same symposium, Daniel I.C. Wang, the Director of the Bioprocess Engineering Research Center at MIT, also voiced a vision for new testbeds that would be needed to support the development of large-scale bioreactors capable of processing mammalian cell-based material and protein-secreting microorganisms through biosynthesis or biocatalysis. The research to support the testbeds would require the integration of knowledge from biology, engineering, and industry. [22]

During the discussions between the ERC Program team and the ERC Directors and faculty about the need to develop testbeds, that took place at the 1986 ERC Annual Meeting, other ERC directors were still reluctant to develop testbeds because they were concerned that academic research should not produce “prototypes”. It was apparent that when the ERC Program pushed the academics into a research space between traditional academic culture and industry’s research culture, they became uneasy. The outcome of the dialogue pointed to the ERC testbed as a research tool not a product prototype and they took on a role as a proof-of-concept testbed over time. By 1987, demonstrating systems concepts in testbeds became a requirement. (See Section 5-D(b) for several examples of large ERC-developed testbeds.)

By 1993, the end of the Gen-1 period, systems were defined as follows:

“Systems integrate components to serve a processing function or product need. Some of the engineering systems that are being explored (in Gen-1 ERCs) include a knowledge-based design modeling system for rapid prototyping; a wide-band optical telecommunications network that integrates a signal transmission control system with voice, data, and image presentation systems, a deep-ocean tension-leg platform for offshore recovery of oil in deep water; and next-generation magnetic or magneto-optic data storage systems that optimize head/media interface to achieve higher rates of data storage.”[23]

5-A(d) Strategic Planning—Industry Guidance and Initial Attempts

As indicated in Chapter 3, the outcome of reviews of the first two classes of ERCs found mixed capability to organize faculty—accustomed to working alone—to develop shared goals and work together to address them. Some ERCs had begun to build a strong base of research to address their engineering systems goals and a new research culture was evolving that joined disciplinary capabilities to explore new barriers to technology. Some, however, continued to look like collections of single-investigator projects, with little or no synthesis, which was needed to address higher-level engineering systems goals.

Nam Suh, then the Assistant Director for Engineering at NSF, pointed to major concern “that some centers do not have a vision…knowing simply where they are going…where the center should be three or five or ten years from now…. Some have lost sight of their goals to accommodate existing institutional power structures. It is business as usual for them…. They lack this vision because they are not doing the work proposed. Some have not expanded existing operations into a new effort. And some have not emphasized the cross-disciplinary thrust so important in any systems approach to a problem.”[24]

Industry voiced the same concerns: “What are ERCs going to deliver? Students and papers? We can already get that with the way we fund research now. The ERCs have to be focused on ‘deliverables’.”[25]

These comments were foreshadowing the reasons why several of the Gen-1 ERCs created before and after these comments were made would not succeed in their third-year renewal reviews.

To address these concerns, Preston led a team of ERC PDs to work with industry in February 1987. The result was a requirement that ERCs develop strategic plans for research. This reflected a philosophy that “directed” fundamental and applied research was a more effective means of achieving engineering systems goals than research motivated by the separate interests of faculty or targeted problem solving for industry. As an ERC Program staff member voiced to the GAO in 1987, “The goal was for these plans to serve to organize the research to reflect industry’s needs for deliverables and the researchers needs for freedom to pursue individual research interests.”[26]

The next ERC Annual Meeting, in 1987, was used to explore the strategic planning construct and how to develop it in an academic culture. There were working sessions on how to develop a strategic plan, how to define a deliverable, what a testbed would be in an academic setting, and what would constitute a technology deliverable or prototype. Preston remembers that the use of the words “deliverables” and “prototypes” caused some of the academics a lot of confusion and anxiety, as their primary goals in the past had been to deliver knowledge and publications, not technology, and that was how they were rewarded in tenure and promotion.

This conflict was ameliorated over time by a clarification that a technology deliverable would be an academic scale proof-of-concept testbed, as opposed to an “industrial” product or process prototype. Industry also recommended that these deliverables be early-stage and flexible so they could be adopted by member firms and pursued further in different ways. James Solberg, the Director of the Purdue ERC for Intelligent Manufacturing Systems noted at the 1991 ERC Symposium, that “strategic research planning was at first difficult for ERC participants to accept; but …all ERCs now agree that such planning is essential and that it does not necessarily lead to restrictions on the individual’s research if it is conducted in the right way. The ‘right way’ for strategic research planning in an ERC is not the same way that industry would pursue it; instead, it must be a form of planning that is appropriate to the university. It must provide ample freedom to maneuver, to shift directions, and capitalize on new ideas and opportunities. That is essential for good academic research. But it must also provide a sense of direction, expectations, and intended goals—i.e., overall strategy—so that the efforts of the group can be integrated.”[27]

At this symposium, Anthony Acampora, then the Director of the Columbia ERC, pointed out that an ERC’s “Strategic Research Plan:

- Identifies emerging technological trends that impact the Center’s charter

- Articulates the vision that drives the Center’s work

- Assesses technical feasibility

- Identifies key systems and technological challenges and their interdependency

- Contain research and education goals and provides a plan for achieving these goals

- Selects cross-disciplinary projects, identifies technological thrust areas, and projects milestones

- Provides a mechanism for industrial input

- Establishes review and updating procedure.”[28]

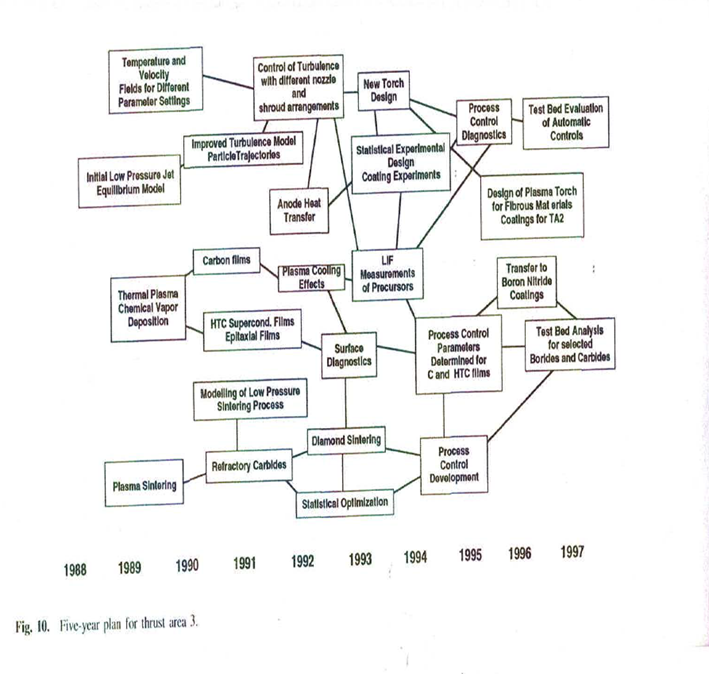

During the Gen-1 period, the initial approach to strategic planning was through the organization of the research programs into manageable “groupings”—or what was termed research program thrusts—and milestone charts were developed to provide a visual representation of the plan at the center and thrust level. Figure 5-2 is an example of one of these thrust-level milestone charts, used by the Wisconsin Plasma-Aided Manufacturing ERC (Class of 1988) to manage Thrust 3, which was focused on plasma synthesis, sintering, and spraying.[29] The long-term goal was for proof-of concept testbeds of automatic controls and a testbed to enable analysis for selected borides and carbides. This is a typical milestone chart for that period and its shortcoming was a failure to depict the knowledge and intermediate technology needed to reach the proof-of-concept testbed stage.

By the end of the Gen-1 period in 1993, Preston voiced the issues as follows:

“Traditionally, research projects originated from avenues of inquiry generated by individual investigators or in response to a particular problem posed by an industrial sponsor. In contrast, the strategic plans put a fundamental new twist on a research program, directing it to ‘strategic’ knowledge creation. They focus on long-term advances, plan for intermediate demonstration of concepts in experimental testbeds to explore ideas along the way, and serve as road maps for identifying and integrating projects necessary to move toward the needed advances. The strategic plans involve a combination of science or knowledge-driven and technology-driven research. They are flexible and evolutionary allowing industry and academe to explore technological options, with the luxury of the possibility of success and failure. Both avenues lead to greater understanding of needed advances.”[30]

While that was the new paradigm for ERC strategic planning, there was still a long way to go before it would be operationalized from the technology vision on down to the research platform. For some ERCs with a strong and guiding vision for a technology, the technology-driven research program was easier to operationalize. That could be seen in ERCs where the systems testbed was crucial to explore the new concepts. For example, one of the technology systems goals of the ERC for Emerging Cardiovascular Technology at Duke were technologies for cardiac defibrillation and arrhythmia prevention, so they developed research projects to understand the electrical fields within the heart and the wave-forms of a shock to the heart, to model and simulate them, and to develop new electrodes and analog circuits. This work led to pioneering insights that biphasic wave forms delivered to the heart require less voltage and energy to defibrillate. This pioneering work led to improved defibrillators and eventually to industry’s development of the portable defibrillator discussed in Chapter 11.

Figure 5-2: Milestone Chart for Plasma-Aided Processing ERC’s Thrust 3 (Source: Center for Plasma-aided Manufacturing)

By the end of the Gen-1 period, it had become clear to ERC research leaders that strategic planning was essential for the success of an ERC, and that there were a number of essential components of such a plan:

- An ERC has to establish a team culture versus the more traditional, individual-research culture, both intra- and inter-university;

- Ensure that the overall ERC vision and mission are articulated in the plan and shared by those in the research thrust leader’s area of responsibility;

- Define resource and budget needs, given the goals;

- Lay the groundwork to take advantage of the best communication technology (e.g., to facilitate “brainstorming sessions” and other necessary interactions).

- Define succinct deliverables and outcomes on reasonable timelines.[31]

These basic elements remained consistent from then on in ERCs, with the addition in Gen-2 of a crucial planning tool that will be described in section 5-B.

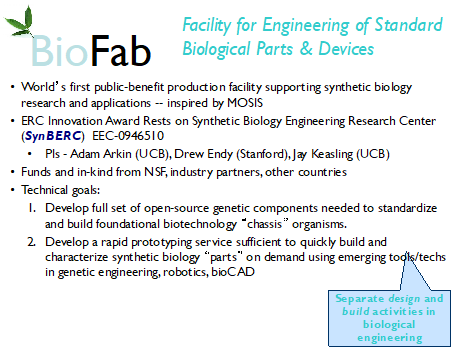

5-A(e) New Paradigms in Research

The combination of strategic knowledge creation driven by complex engineering systems goals and experimental testbeds led some ERCs to create new research areas. Prominent among these are biofilm science and engineering, catalyzed by breakthroughs at Montana State University’s Center for Biofilm Engineering (CBE); and bioprocess engineering advances at MIT’s Biotechnology Process Engineering Center (BPEC) that laid the foundation for the new field of biological engineering. The Synberc ERC provided major impetus to the emerging field of synthetic biology. Additionally, ERC Program investments in optics and optoelectronics in the late 1980s and early 1990s at several ERCs built a solid foundation for the field of optoelectronic engineering. All of these stories are told in some detail in Section 5-D(b), Emerging Fields Catalyzed by ERCs.

5-B Gen-2 (1994-2006)

During the second generation of the ERC Program, the research programs of the Gen-1 ERCs were in their later years of productivity and the research programs of the new centers created during this period benefitted from an increased sophistication of knowledge within the ERC Program and among the ERCs about how to develop a coherent engineered systems vision, structure a plan, and manage a research program to achieve it.

By 1998, the research key features required of ERCs reflected this greater refinement:

- A guiding strategic vision to produce advances in a complex, next-generation engineered system and a corresponding new generation of engineers needed to strengthen the competitive position of industry and the Nation in a global economy;

- A dynamic, evolutionary strategic research plan to focus and integrate the ERC to achieve its vision; and

- A cross-disciplinary research program, promoting synthesis of engineering, science and other disciplines, spanning the continuum from discovery to proof-of-concept in testbeds, and involving undergraduate and graduate students in research teams.[32]

The phrase “engineering systems” was changed to “engineered systems.” This changed the focus from a broader, almost cultural context that was hard to implement through research, to a technology concept that required engineering to deliver functionality. The ERC Program defined engineered systems as “deriving from integrating a number of components, processes, and devices to perform a function. The system may be living or inanimate in origin. It must be complex and challenging enough to justify a ten-year program of research. Analysis, modeling or development of individual components of a system, without their integration into a complex engineered system, is not an appropriate focus for an ERC.”[33]

The definition of ERC testbeds was articulated as follows: “proof-of-concept testbeds in ERCs are used to explore an ERC’s next-generation engineered system to determine if all components work together as planned and the system is feasible. The process of building the testbed and beginning to integrate various devices and components or processes often revealed barriers, which generated new fundamental research projects. These testbeds help to ensure that the research outcomes are integrated and tested and supply a framework for faculty, students, and industry representatives to work together and gain a better understanding of the realities of the system they are exploring and demonstrating.”[34] (See chapter 3, section 3-B for further discussion of engineered systems and testbeds.)

There were 28 ERCs awarded during the Gen-2 period. These were the ERCs that proposed systems visions, as opposed to systems goals, and those visions and their testbeds became increasingly complex as ongoing ERCs and new proposers better understood how to use strategic research planning to develop integrated, cross-disciplinary research programs to address their technology goals and systems visions. These 28 ERCs and their systems visions are summarized in “Gen-2 Systems Visions.”

Because of the complexity of systems visions of the ERCs awarded between 1994 and 2006, these ERCs also had to build cross-disciplinary platforms across fields where there had been little past collaboration.

Preston reiterated her definition of cross-disciplinary, as opposed to multi-disciplinary or interdisciplinary, in her plenary address to the ASEE’s Engineering Research Council’s Summit in 2004, as follows:

- Multidisciplinary Research: Involves different disciplines that are not necessarily integrated

- Cross-Disciplinary Research: The integration of the capabilities of different disciplines to address a major challenge in research or technology

- Interdisciplinary Research: Long-term cross-disciplinary collaboration blurs the lines between the disciplines, often leading to new fields such as bioengineering, photonics, MEMS, etc.[35]

Some strong and lasting research collaborations were built between engineering and medicine that continue today. For example, the partnership between the schools of engineering, computer science, and medicine created through the CISST ERC at Johns Hopkins continues today and has significantly impacted surgical techniques there and across the country. As was noted earlier, some of these collaborations created new disciplines, such as biological engineering and synthetic biology. Some ERCs joined engineering and social and policy sciences, like the three Earthquake Engineering ERCs and the CASA ERC headquartered at the University of Massachusetts at Amherst, reaching beyond academe to include emergency management personnel.

BIOLOGICAL AND BIOMEDICAL ENGINEERING JOINING ENGINEERING, BIOLOGY, AND MEDICINE

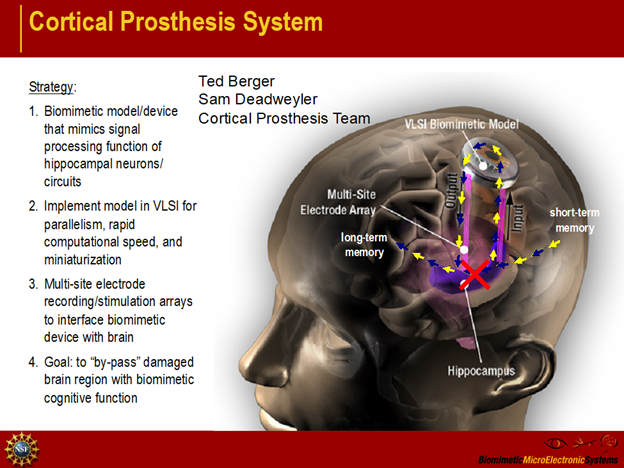

The ERCs in this cluster built strong collaborations between engineering and molecular biology and neurobiology in order to address their visions for gene biotechnology delivery systems, synthetic biology, or neuromorphic systems, for example. Some of the ERCs with technical underpinnings in electrical engineering reached out to form collaborations with medicine and biology to achieve their goals, such as an artificial retina at the BMES ERC at USC, the cochlear implant at the WIMS ERC at the University of Michigan, and a cortical implant at the Neuromorphic Systems Engineering Center at CalTech.

The cross-disciplinary research platforms built by these 13 ERCs were:

- Biotechnology Process Engineering Center at MIT joined biochemical engineering, chemical engineering, molecular biology, chemistry, and medicine.

- Center for Neuromorphic Systems Engineering at CalTech joined electrical engineering, chemistry, computer science, neurobiology, and medicine.

- Engineered Biomaterials ERC at the University of Washington joined biomedical engineers, biologists, chemists, materials scientists, physicians, and dentists[36]

- Georgia Tech/Emory Tissue Engineering Center joined biochemical engineering, biology, and medicine

- Computer-Integrated Surgical Systems and Technologies ERC at Johns Hopkins joined electrical engineering, biomedical engineering computer science, and medicine and in the process built one of the strongest partnerships between engineering and medicine among all the ERCs.

- Marine Bioproducts ERC at the University of Hawaii joined chemical engineering, biological engineering, biology, chemistry, and ocean science.

- The VANTH ERC at Vanderbilt University built unique partnerships between biomedical engineering, medicine, and engineering education research that plowed new ground in integrating core knowledge in engineering with that in engineering education to explore new ways of teaching biomedical engineering.

- The Wireless Integrated MicroSystems ERC at the University of Michigan devoted part of its effort to developing a cochlear implant that required the integration of skills in electrical engineering, materials science, and medicine.

- The Center for Subsurface Sensing and Imaging Systems at Northeastern University devoted part of its efforts to breast cancer imaging that required joining electrical engineering, biomedical engineering, computer science, and medicine.

- The Biomimetic MicroElectronic Systems ERC at the University of Southern California joined electrical engineering and medicine not only through the educational background of its director, Dr. Mark Humayun, M.D., but also through collaborating faculty via a partnership with the Keck School of Medicine and its Doheney Eye Institute and the USC’s Viterbi School of Engineering. The cross-disciplinary team also included faculty with disciplinary expertise in electrical engineering, biomedical engineering, physiology, neurobiology, and biology.

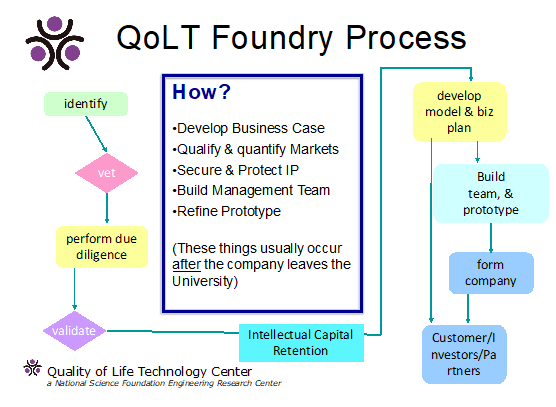

- The Quality of Life Technologies ERC at Carnegie Mellon built a complex cross-disciplinary space that joined computer science and robotics, electrical engineering, biomedical engineering, mechanical engineering, psychology, gerontology, and sociology.

- The Synthetic Biology ERC at the University of California at Berkeley joined biological engineering, chemical engineering, biology, ethics, and genetics to form over time a new discipline, synthetic biology.

- The ERC on Mid-Infrared Technologies for Health and the Environment at Princeton formed a cross-disciplinary space to work on medical diagnostics that involved electrical and optical engineering and medicine.

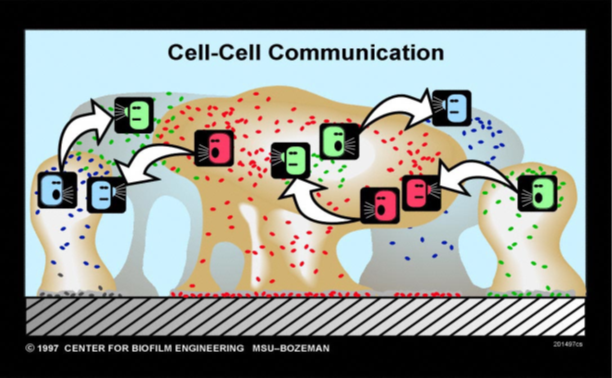

During her plenary address to the ASEE Engineering Research Council in 2004, Lynn Preston pointed to powerful examples of how new discoveries can come from the interface of biology and engineering, such as at the Center for Biofilm Engineering, an ERC at Montana State University.

“Biofilms form when different strains of bacteria bind together in a sticky web wherever there is water. Some biofilms can serve a beneficial role in reaction systems for the treatment of waste-containing liquids or in the bioremediation of contaminated groundwater aquifers. However, detrimental biofilms have been implicated in diseases such as cystic fibrosis and blood poisoning to infected catheters. Others secrete acids that eat away tough metals and minerals corroding the legs of oil derricks and even your teeth.

Before the ERC began to bring the power of engineering and microbiology to bear on the study of bacterial biofilms, our concept of these adherent populations of bacteria was that biofilms stuck to the surface by means of their own slime and the only way to treat them was with chemicals. However, the ERC was determined to understand the biological nature of these films so they could engineer them to control their formation and use.

They began by using confocal microscopy and physical probes that could be used to examine the structure of the films. They found complex, sophisticated architectures. The biofilms were seen to live in slimy towers and mushroom-shaped structures with water channels that carried nutrients to all parts of the community. The ERC team found that the bacteria communicated through chemical sensing mechanisms to form these structures.

More importantly, the ERC team discovered that the introduction of a mutant strain of the bacteria, that did not contain the signaling chemical, caused the towers and structures of the biofilm to collapse. Without the signaling molecule, the cells cannot make a biofilm.

This interdisciplinary team of engineers and biologists has discovered a class of compounds that can prevent biofilm formation. The applications in industry and medicine are legion. We can now manipulate at least one and probably many more behaviors of bacterial cells instead of simply killing them with toxic agents that harm the environment or the host. We can use these simple nontoxic molecules, active blocking analogues, which will be cheap, stable, and environmentally friendly. The whole business of biofilm control, in industry and in medicine, has entered a new era in which chemical manipulation will replace indiscriminate killing with toxic agents. It is a true green revolution that came from the driving desire of engineers to understand a phenomenon in order to control it, and that understanding required the collaboration of biologists and engineers.”[37]

The cartoon of a biofilm in Figure 5-3 illustrates this cell-to cell-communication that results in an architecture that enables the flow of nutrients within the biofilm.

Figure 5-3: Cell-to-cell communication in a biofilm. (Source: CBE)[38]

ENVIRONMENT AND INFRASTRUCTURE

Five ERCs in this cluster that were focused totally or in part on mitigating or sensing environmental pollution formed cross-disciplinary spaces that joined chemical engineering, electrical engineering, environmental engineering, chemistry, and medicine.

- The NSF/SRC ERC for Environmentally Benign Semiconductor Manufacturing at the University of Arizona joined chemical engineering, electrical engineering, environmental engineering, mechanical engineering

- The Wireless Integrated MicroSystems ERC at the University of Michigan devoted part of its effort to developing an environmental pollution sensing system that required the integration of skills in electrical engineering, environmental engineering, and chemistry.

- The Center for Subsurface Sensing and Imaging Systems at Northeastern University devoted part of its efforts to detecting hazardous wastes and other hazardous materials beneath the surface that required joining electrical engineering, optical engineering, environmental engineering, and civil engineering.

- The Center for Environmentally Beneficial Catalysis at the University of Kansas joined chemical engineering with environmental engineering.

- The ERC on Mid-Infrared Technologies for Health and the Environment at Princeton University devoted part of its effort to developing chemical sensing capabilities for environmental monitoring that required joining electrical engineering, optical engineering, chemical engineering, chemistry, and physics.

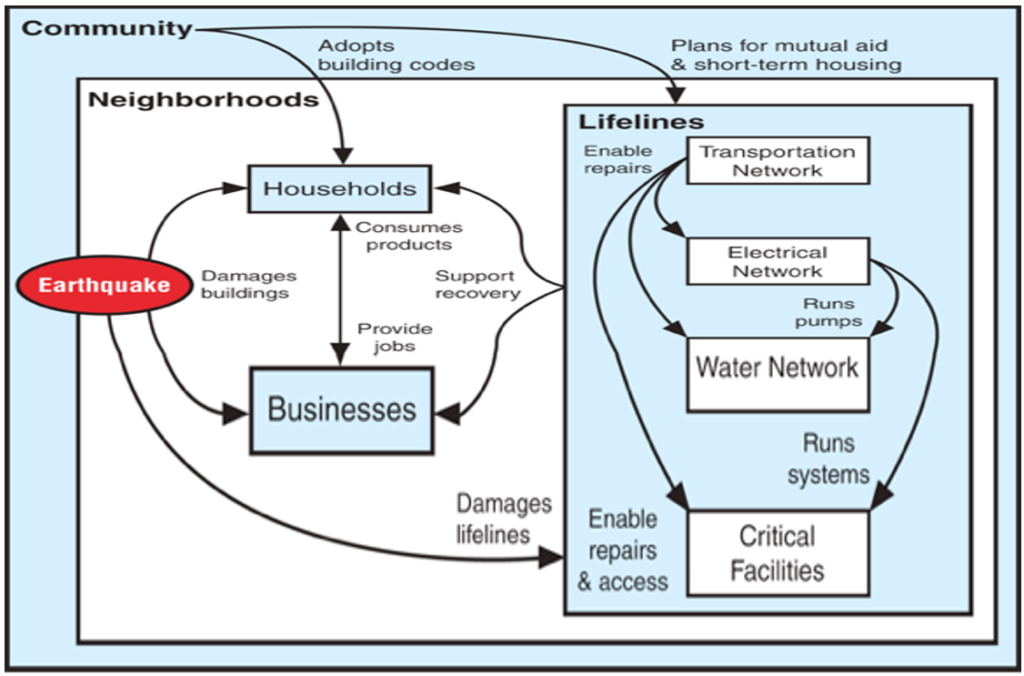

The three Earthquake Engineering Research Centers (EERCs) built complex, cross-disciplinary research platforms that joined earthquake engineering, civil (structural and geotechnical) engineering, geology, and sociology. Each EERC was required to have a research thrust devoted to societal response to earthquake hazards.

MANUFACTURING AND DESIGN

- The Institute for Systems Research (formerly the Center for Systems Research) at the University of Maryland continued to function with its cross-disciplinary team that involved electrical engineering, design automation, computer science, and information science.

- The ERC for Collaborative Manufacturing at Purdue started its new award phase building a team that joined electrical engineering, manufacturing engineering, mechanical engineering, and communications science.

- The Particle Engineering Research Center at the University of Florida built a team that joined mechanical engineering, chemical engineering, chemistry, pharmacy, dentistry, and medicine.[39]

- The Center for Innovative Product Development at MIT joined faculty from mechanical engineering, computer engineering, and the school of business administration.

- The Center for Reconfigurable Machining Systems at the University of Michigan joined mechanical engineering with electrical engineering, optical engineering, systems engineering, and economics

- The Center for Advanced Fibers and Films at Clemson University joined mechanical engineering, with chemical engineering, chemistry, computer science and visualization, and materials science and engineering.

- The ERC for Compact, Efficient Fluid Power at the University of Minnesota built a cross-disciplinary platform that integrated mechanical engineering with electrical engineering and biomedical engineering.[40]

- The ERC for Structured Organic Particulate Systems at Rutgers University integrated chemical engineering, biochemical engineering, mechanical engineering, pharmaceutical engineering, and pharmacy.[41]

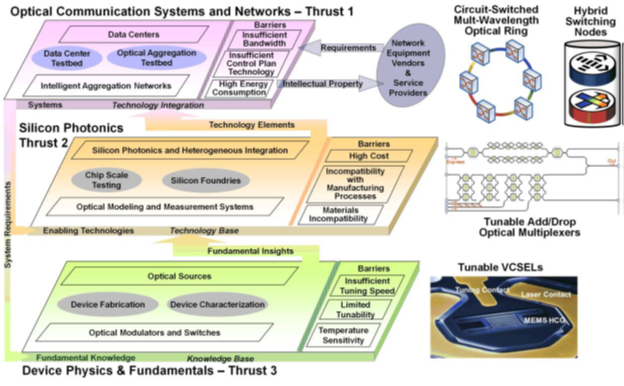

MICRO/OPTOELECTRONICS, COMPUTING AND INFORMATION SYSTEMS

- The Center for Neuromorphic Systems joined faculty from electrical engineering, chemistry, computer science, neurobiology, and medicine.

- The Packaging Research Center at Georgia Tech joined electrical engineering with mechanical engineering, optical engineering,

- The Integrated Media Systems Center at USC joined electrical engineering, computer science, and music.

- The Extreme Ultraviolet Science and Technology ERC at Colorado State University built a robust platform that integrated electrical engineering, optical engineering, biology, chemistry, and physics.

- The Collaborative Adaptive Sensing of the Atmosphere ERC at the University of Massachusetts-Amherst joined electrical engineering with mechanical engineering, atmospheric science, sociology, and public policy.

5-B(a) Gen-2 Strategic Planning

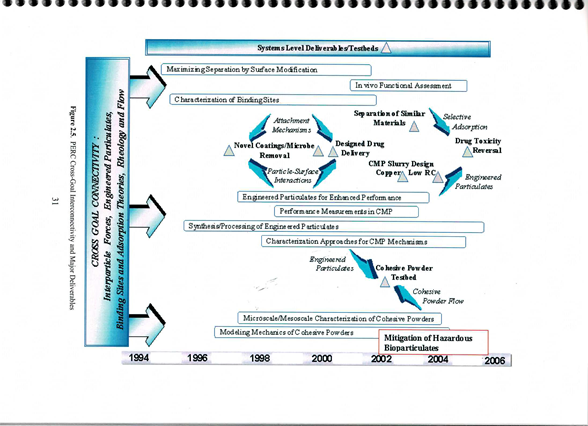

Given the complexity of the engineered systems visions of the ERCs in this period and the scope and complexity of their cross-disciplinary teams, effective strategic research planning became even more important for these Gen-2 ERCs to succeed. These ERCs started out with a framework for strategic research planning built by the Gen-1 ERCs that was based on milestone charts, at the center and thrust level. These charts plotted out knowledge and technology advances on a time line over the life of the center at the center and research thrust level. Figure 5-4 is an example of one of the more complex of these charts, developed by the Particle Engineering Research Center at the University of Florida.[42] It shows that developing novel coatings for microbe removal from surfaces was a technology goal in 1998, projected out to the graduation of the Center in 2006. However, the chart does not clearly depict what research would enable that goal and how that goal contributed to higher-level systems goals. This was a typical problem with using milestone charts and not a weakness of that particular ERC.

Therefore, while the milestone charts did serve as a way of organizing the research, helping to keep an ERC’s team focused on its knowledge and technology goals, Preston was concerned that the charts were not effectively showing how the engineered system was a driver for the research and were not depicting the dynamic nature of an ERC’s research program—the push and pull between fundamental knowledge and technology. In addition, there was a tendency to leave the proof-of-concept testbed to the last year of the ERC whereas it was more likely that intermediate-stage testbeds would be needed to test components before the systems-level testbed could be started.

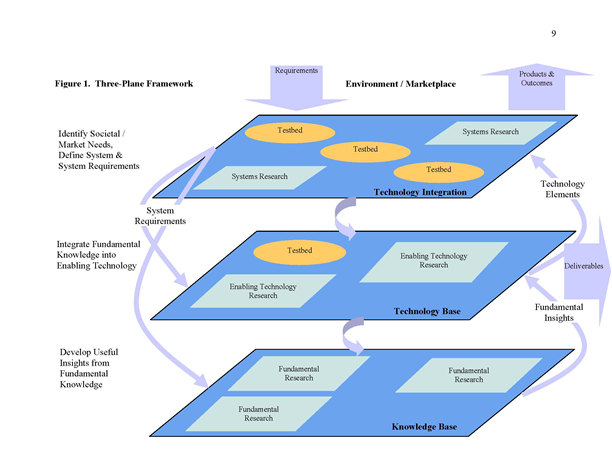

Accordingly, in 1997 Preston developed the 3-plane ERC strategic research planning chart to address these concerns. She worked with Fred Betz and Cheryl Cathey, ERC PDs at the time, to perfect how to show the flow of research, from fundamentals to enabling technology testbeds, to systems-level testbeds. As shown in Figure 5-5, the approach was to put the engineered system testbed(s) and engineered systems-level research at the top of the chart and show through driving arrows down to the fundamental research plane how the system requirements would be addressed through fundamental research. It also visualized how those fundamental insights would flow back up the chart to the enabling technology plane, where enabling technology-level research would occur and enabling technology would be tested and strengthened, eventually delivering technology insights up to the systems plane for testing in a systems-level testbed. The intent was to be able to display the research program in one comprehensive yet simple chart, so that funders and reviewers could better understand the ERC’s research program and deliverables, and faculty and students could see the work that needed to be done and how their skills could contribute to the ERC team’s achieving its goals. It is designed to be flexible and dynamic over time and should not be perceived as a “product production plan.”

Figure 5-4: Milestones for Research, Particle Engineering Research Center, University of Florida (Source: PERC)

The 3-plane chart crystalized Preston’s management of the ERC Program, which has been characterized as “’Engineering Innovation’—here, ‘Engineering’ is used as a verb in the sense that the raison d’être of the ERC Program is to create and foster new technical innovations (i.e., incremental or disruptive improvements to a technology, service, or standard). That is, ERCs are devoted to ‘engineering’ (i.e., creating) new technical innovations.”[43]

This was a significant shift in the way engineers planned their research portfolios. Initially, the intellectual discipline and visualization skills it required were a challenge for some ERCs, so the 3-plane chart met with a mixed reception from the ERC community. However, ERC review teams and industrial supporters welcomed it for the clarity of thought it required of the ERCs. Over time, it became the standard by which to measure the effectiveness of an ERC’s strategic research planning.

Figure 5-5: Standard ERC 3-Plane Chart

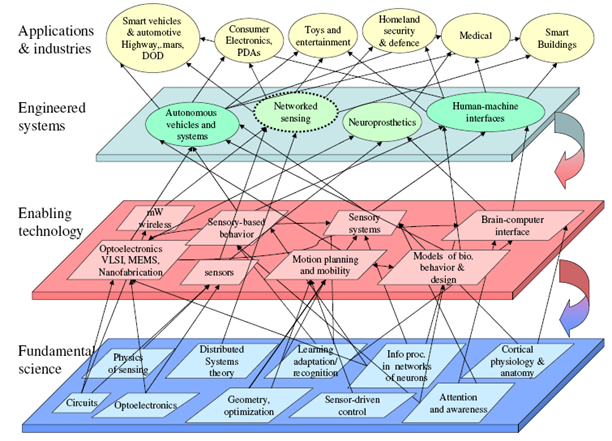

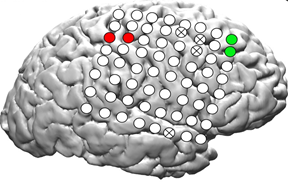

From Figure 5-6, it is possible to see that the Center for Neuromorphic Systems Engineering (CNSE) was focused by four systems-level testbeds: autonomous vehicle systems, networked sensing, neuroprosthetics, and human machine interfaces.

The chart clearly displays how the fundamental research needed to achieve those systems-level testbeds fed into a broad range of enabling technologies that fed specific systems testbeds or, in most cases, enabled several of them. For example, the neural prostheses testbed drove fundamental research in circuits and optoelectronics which fed into enabling VLSI, optoelectronics, MEMS, and nanofabrication enabling technology. It also drove fundamental research in distributed systems theory and sensory-based behavior in order to develop the sensory systems for the prostheses. And finally, fundamental knowledge was needed for attenuation and awareness, as well as cortical physiology and anatomy, to develop effective enabling brain-computer interface technology. The final system was used to enable a chimpanzee to manipulate a game to retrieve a “treat” through mind control rather than motor control.

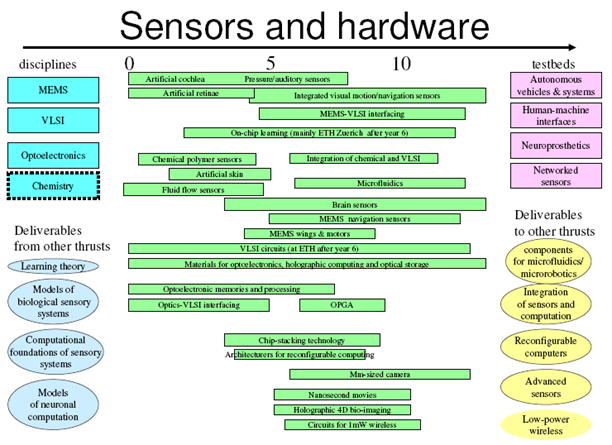

Currall and his team found that the ERC Program’s requirement to couple the 3-plane chart with detailed milestone charts by thrust area resulted in more effective delivery of research findings and technology, as shown in Figure 5-7 from the CalTech ERC.[44]

Figure 5-6: 3-Plane Strategic Research Plan for the Neuromorphic Systems Engineering Center (Source: CNSE)

The CNSE joined engineers with neurobiologists and for those scientists the chart enabled them to more easily see how their work contributed to the long-term systems goals of the center. It also enabled many of the neurobiology faculty and students to better understand engineering thinking. As a consequence, the ERC produced a new generation of neuromorphic engineers who fanned out across the country in engineering and science departments building bridges between the two fields of inquiry, as Preston remembers the assembly of young CNSE graduates who gave presentations at the ERC’s “celebration of graduation” from NSF/ERC support in 2006.

Figure 5-7: CNSE Thrust-Level Milestone Chart (Source: CNSE)

At the same time that the 3-plane chart improved the management of research to address long-term systems goals, it should be understood that there was always room in an ERC’s research program for “opportunistic” research relevant to the long-term goals of the ERC, with roles in fulfilling those goals or setting up new pathways that was not well understood.

While Preston could see that the 3-plane chart improved an ERC’s communication and research management, she wanted to understand how the ERCs were using it in the day-to-process of research management and to be sure they weren’t just “trotting it out” for display in their annual reports and annual reviews. In 2004, she and Linda Parker, the ERC Program evaluation specialist, gave a grant to Steven Currall, who was then at Rice University, to study how ERCs used the 3-plane chart in planning. He was from the Rice School of Management and had provided guidance to Professor Vicki Colvin, the PI of the Rice Center for Biological Nanotechnology (which was not an ERC), on how to develop a 3-plane chart for her proposal to NSF for a Nanoscale Science and Engineering Center.

Currall and his team interviewed 22 ongoing ERCs in 2005 to determine the impact of the 3-plane chart on research publication productivity and technology applications. They concluded that:

“…the three-plane framework and a formal process of strategic planning were vital tools for organizing the research endeavor within ERCs. Also, the three-plane framework was a useful tool for illustrating each center’s strategic plan. Yet, the method of implementing the three-plane framework critically determined whether it was beneficial to overall planning formality and quality of planning (i.e. comprehensiveness) and organizational outcomes. The most important determinant of whether planning benefited organizational outcomes was the overall comprehensiveness of the planning, rather than commitment to the planning tool or process.”[45]

The results corroborated Preston’s intent that the chart become a flexible tool that was dynamic throughout the life of the ERC. “As an ERC evolves, it periodically submits a revised three-plane framework, presenting a revised strategic plan to the NSF Program for review and comment.”[46] The study found that most of the ERCs viewed strategic planning and the three-plane framework as valuable. Leaders who had used the chart in their proposals or were familiar with strategic planning found value in top-down planning and became champions of planning and the three-plane framework in their conversations with faculty members within the ERC. There was a second cohort who valued strategic planning but found “modest” value in the three-plane framework. Currall indicated that might be because they were still learning how to use it and Preston surmises that most likely they were also learning how to think from the top down. Currall also found that a number of ERCs placed more emphasis on curiosity-driven research, doing only the minimum required to adhere to the ERC Program’s requirements for planning.[47]

The study found that the ERCs were creatively coming up with solutions to how to display their research and its complexity, especially when they had more than one systems goal. There was some concern about the timeline, as ten years was thought to be too short for most significant advances emanating from the life sciences; some even recommended that there be a precursor center program at NSF that fostered very early-stage interdisciplinary basic research,[48] with presumably a potential for eventual interfaces with engineering.

Currall and his team found that for the 3-plane chart to be an effective strategic planning tool it needed a champion who fully understood its value and could effectively communicate that to the ERC’s team. They also found that when there was not an effective champion, little use of the chart took place. “In those cases, a preexisting research plan was retrofitted into the three-plane chart and no real changes in future-oriented thinking occurred. The framework, was used primarily for communications to the NSF,” as Preston had been worried about. [49]

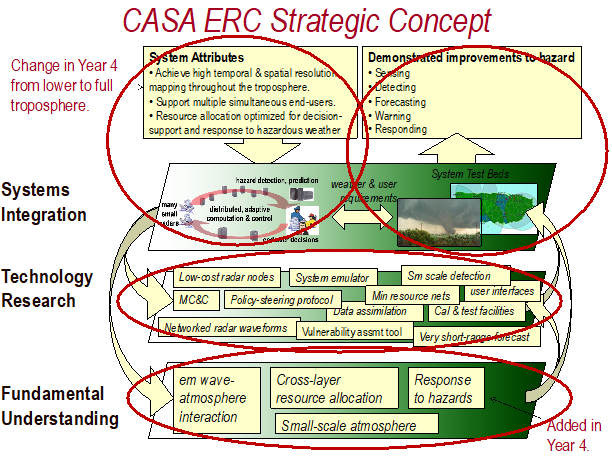

One team she didn’t have to worry about was the team from the Center for Collaborative Adaptive Sensing of the Atmosphere (CASA), as David McLaughlin, the Director, had enthusiastically embraced the 3-plane chart from start-up, even with a sense of humor, as shown in Figure 5-8.

Figure 5-8: The CASA 3-Plane Strategic Planning Cake presented to Lynn Preston by Brenda Phillips and David McLaughlin at the ERC’s Start-up Celebration in 2003 (Credit: Janice Brickley)

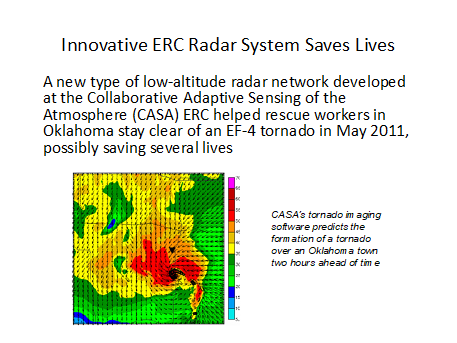

CASA’s strategic plan, shown in Figure 5-9, illustrates the ERC’s complex systems vision—build small-scale radars with sensors that could “collaborate” to communicate their findings to determine whether or not a storm had the telltale hook that signaled a tornado, and transmit that finding to emergency response personnel responsible for tornado warnings.

Figure 5-9: The CASA ERC 3-Plane Strategic Research Plan (Source: CASA)

The need to integrate the findings from the radars with those responsible for hazard warnings took a few years to be fully understood and implemented because it required engineers and atmospheric scientists to be able to effectively communicate together and with social scientists and emergency response personnel. By Year 4 that effort had begun and culminated in a four-radar testbed aimed at supporting hazardous weather response in “Tornado Alley,” Oklahoma. The experimentation between CASA and the National Weather Service was the achievement of then-ILO Brenda Philips, who knew how to communicate between the world of the weather service, with its responsibility for accurate forecasting and timely warnings, and the scientists and engineers who built the CASA system. That test yielded warnings for tornados that were three minutes faster than the NOAA radar system—a critical time difference.

Another testbed resulted in spotting a tornado two hours ahead of impact and tracking its path—which saved lives, as shown in Figure 5-10, transmitted to Preston by the CASA team. The Oklahoma City Journal Record reported on July 1, 2011, “The data from a new radar system being tested in Newcastle was so precise that refugees from the storm were able to time the closing of the town’s public shelter down to the last minute,” City Manager Nick Nazar said. “The opportunity to use this advanced technology was very helpful and probably saved lives. It was literally up to the minute and it made a difference.”[50]

Figure 5-10: Outcome of Radar System Test in Newcastle, OK (Source: CASA)

5-B(b) Adoption of Earthquake Engineering Research Centers

In 1999, the Assistant Director for Engineering, Eugene Wong, transferred three Earthquake Engineering Research Centers (EERCs) to the ERC Program in order for them to benefit from the ERC Program’s “seasoned” post-award oversight system. The Division of Civil and Mechanical Systems had solicited proposals to create new EERCs in 1996 and three were awarded in 1997. They were:

- Multidisciplinary Center for Earthquake Engineering Research (MCEER), headquartered at the University at Buffalo

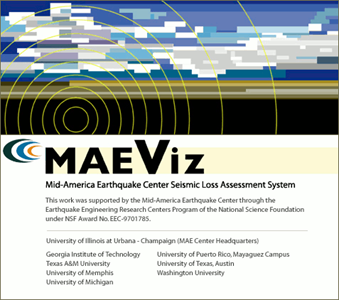

- Mid-America Earthquake Center (MAE), based at the University of Illinois at Urbana-Champaign

- Pacific Earthquake Center (PEER), led by the University of California at Berkeley.

However, the CMS division staff had little or no experience with centers. They issued highly detailed cooperative agreements, required NSF approval for each subaward to each partner of each EERC, and provided little guidance on how to operate a center. The EERCs complained about micro-management and delays in funding to Dr. Wong. As a consequence, he implemented the transfer of these centers and their NSF budgets to the ERC Program.

The transfer brought the following benefits to the EERCs:

- More flexible cooperative agreement

- NSF approval clause for subcontracts removed

- ERC Best Practices Manual providing guidance

from other ERCs on:

- Research

- Education

- Industry collaboration

- Administrative management

- Revised renewal plan that gave the centers one more year before their first renewal review, due to the transfer.

Overall, the research goals of the EERCs were to advance knowledge and technology in earthquake engineering research and earthquake hazard mitigation through the integration of engineering, earth science, and social science. To achieve these goals, each EERC was restructured with the following ERC key features:

- Vision for systems aspects of earthquake hazard mitigation

- Strategic plan to focus and integrate the resources of the EERC to achieve its vision

- Research program integrating engineering, earth science, and social sciences, from discovery to proof-of-concept(testbeds and demonstrations projects), involving both undergraduates and graduates in cross-disciplinary research teams.[51]

That transfer meant that the centers had to reorganize their research programs to more effectively join faculty from various disciplines to address the systems issues and opportunities in the field. They could no longer function like a collection of single investigators clustered around the important experimental equipment.

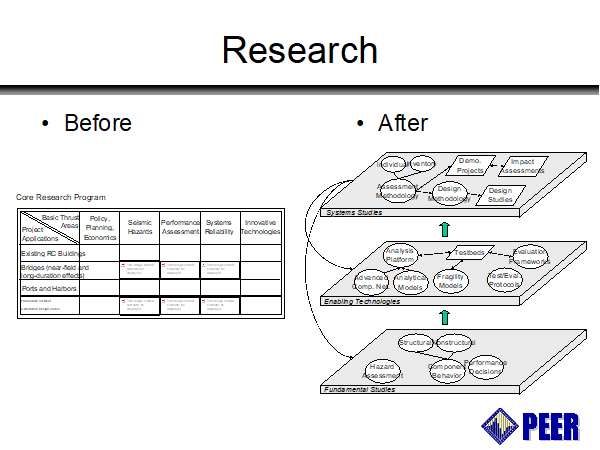

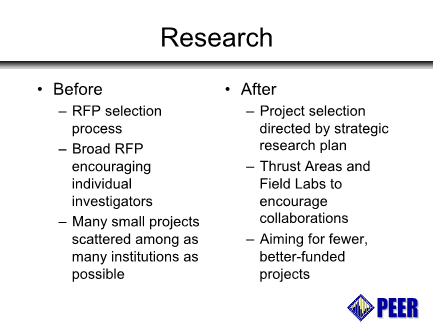

As an example, Figures 5-11 and 5-12 characterize the changes in the PEER research plan introduced by the 3-plane strategic research planning process.[52]

Dr. Joy Pauschke, an ERC PD with earthquake engineering training, was assigned the oversight responsibility for the EERCs. She and Preston visited each of the EERCs in 1999 to bring them up to speed on the ERC Program’s goals for research, education, and industrial collaboration and to become familiar with each center’s goals and research/education teams. These visits included training in how to structure their research programs using the ERC 3-plane strategic research planning tool. To Preston, there was no better example of an engineered system than a hazard mitigation technology designed to enable a structure to withstand the forces of an earthquake as they move through the earth and soil and impact the structure. While each EERC was designed to develop research programs to develop and test these technologies, there was a tendency to break down each effort by discipline, with little incentive to integrate the knowledge from a systems perspective.

Figure 5-11: PEER EERC Strategic Research Plan in 2000 (Source: PEER)

Figure 5-12: Project Selection Before the ERC 3-Plane Strategic Plan and After (Source: PEER)

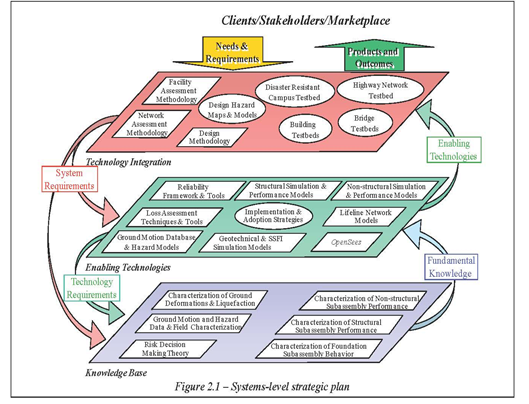

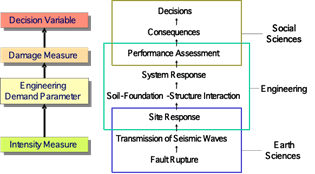

The systems perspective of PEER focused on Performance Based Earthquake Engineering (PBEE) technologies for buildings and infrastructure to meet the diverse economic and safety needs of owners and society. The center laid the groundwork and made inroads toward creating the data, models, and the performance criteria and applied the performance-based techniques to testbeds and studies to quantify the expected performance of current engineering design practices. PEER’s 3-plane strategic plan, shown in Figure 5-13, illustrates how the plan is “driven by the Needs and Requirement of Clients, Stakeholders, and the Marketplace, involves research with the Technology Integration, Enabling Technologies, and Knowledge Base Planes; and produces Products and Outcomes that respond to the Needs and Requirements” of users. [53]

Figure 5-13: PEER EERC’s Strategic Research Plan[54] (Source: PEER)

The chart demonstrates how the research planning strategy supported fundamental research in the knowledge base plane in ground motion, risk decision-making theory, non-structural performance assessment, foundation subassembly behavior, and other fundamental areas. This research fed into the loss assessment and reliability frameworks and tools, ground motion hazard models, and structural and other component simulation and performance models which supported the real-world systems testbeds—highways, bridges, and building systems in the Bay area. The systems/Technology Integration Plane in the chart represents the systems-level applications and studies in PBEE. The system includes the seismic environment, the soil-foundation-structure-nonstructural-contents systems, and the facility-impacted stakeholder segments. This plane contains the over-arching impact of PEER’s research program—specifically, the development of assessment and design methodologies that integrate the seismic-tectonic, infrastructure, and socio-economic components of earthquake engineering into a system that can be analyzed and on which rational decisions can be made.[55]

In PEER, “central to the enabling technologies are analytical models, ground motion libraries, and assessment criteria to simulate the performance of buildings and bridges. These are integrated through the OpenSees …. software platform, which enables nonlinear simulations and visualization of response”[56] OpenSees models have been validated with data from laboratory tests and data recorded during past earthquakes. The Open System for Earthquake Engineering Simulation (OpenSees) facilitates the development and implementation of models for structural behavior, soil and foundation behavior, and damage measures. In addition to improved models for reinforced concrete structures, shallow and deep foundations, and liquefiable soils, OpenSees was designed to take advantage of the latest developments in databases, reliability methods, scientific visualization, and high-end computing. It offered greater flexibility in combining modules to solve classes of simulation problems and allowed researchers from different disciplines to combine their perspectives for integrated implementation.[57]

The MAE ERC developed a decision tool, called MAEViz, that was designed for public policy-makers to use to determine the impact of decisions to retrofit buildings or transportation systems and bridges to withstand an earthquake based on consequence-based risk management. “Consequence-based Risk Management is a new paradigm for seismic risk reduction across regions or systems that incorporates identification of uncertainty in all components of seismic risk modeling and quantifies the risk to societal systems and subsystems.”[58] “MAEViz provides decision tools for public policy makers and others; at the request of the public policy advisors to the EERC, the outcomes of those tools are presented through visualization to enable policy makers who are not engineers to comprehend the consequences of risk reduction decisions.” [59] Thus, MaeViz used a visually-based, menu-driven system to generate damage estimates from scientific and engineering principles and data, test multiple mitigation strategies, and support modeling efforts to estimate higher level impacts of earthquake hazards, such as impacts on transportation networks, and social, or economic systems. It enabled policy-makers and decision-makers to ultimately develop risk reduction strategies and implement mitigation actions. At the same time, it became a focal point for the ability of the Center to drive the integration of disciplines and to facilitate the management and funding of the research within the Center.

Eventually, OpenSees and MaeViz formed the computational engines for the Computational and Modeling Simulation Center for National Hazards Engineering, headquartered at the University of California, Berkeley.

Attached here is a 2008 summary of NSF’s investment in EERCs and its impacts, written by NSF ERC Program Director Dr. Vilas Mujumdar.[60]

5-C Gen- 3

5-C(a) Gen-3 Awards (2008–2012)

The Gen-3 period spans from 2008 through 2017. Since Lynn Preston retired in 2014 before the Class of 2015 was awarded, this document will focus only on the 12 ERCs awarded in the first three Gen-3 classes—2008, 2011, and 2012—with which she has familiarity. Three new Gen-3 ERCs were also awarded in 2015 and four more in 2017. Thus, the listings in this section are current as of 2014.

The 12 Gen-3 ERCs in the first three classes and their systems visions are summarized in the file “Gen-3 Systems Visions.”

5-C(b) Cross-disciplinary Research Platforms Built by the Gen-3 ERCs

BIOLOGICAL AND BIOMEDICAL ENGINEERING JOINING ENGINEERING, BIOLOGY, AND MEDICINE

——————————————————————

CASE STUDY: The Gen-3 ERCs functioning in this technology sector continue to build new spaces in research between engineering, biology, and medicine. They benefitted from the lessons learned about how to build and maintain these partnerships that were transmitted to them by the older, more experienced Gen-2 ERCs at the ERC Annual Meetings’ working groups, which were organized by sector. One, the Center for Sensorimotor Neural Engineering (CSNE) at the University of Washington (UW) was breaking new ground by forming new partnerships between engineering and neuroscience and ethics. At the ERC Annual Meeting the CSNE team took away lessons learned from Ted Burger, leader of the cortical implant team at the Biomimetic MicroElectronic Systems (BMES) ERC at USC, and Christof Koch, one of the PIs of the Center for Neuromorphic Science and Engineering (CNSE) ERC at CalTech, which rested on a partnership between engineers and neuroscientists; and in ethics from the biological risk efforts of the Synberc ERC at UC Berkeley. In addition, Tom Daniels, who served as the interim CSNE Director, was a member of the NSF site visit review team to Caltech’s CNSE prior to developing the CSNE proposal to NSF.

At times an ERC would become a role model and stimulant for new cross-disciplinary partnerships among faculty in engineering and the sciences. This was more prevalent when a strong partnership was formed between engineers and scientists in the ERC, so that the innovation-driven approach of engineers was transferred to scientists. An example of this occurred at CSNE. Tom Daniels, a biologist, took over the leadership of the Center at start-up when the PI, Yoky Matsuoka left the university shortly after the award was made. After Tom stabilized the ERC over a few years, two ERC faculty members, Rajesh Rao and Chet Moritz, took over its leadership. Tom returned to his biology roots; but his experience with the ERC way of thinking and leading research teams with the ERC strategic planning approach led him to head up the UW Institute of Neuroengineering (UWIN) (http://uwin.washington.edu/) in partnership with Adrienne Fairhall, from the Physiology and Biophysics department. UWIN is a privately funded program that provides new matching funds to the CSNE as well as a host of other programs promoting neuroengineering and computational neuroscience on campus, including the new Air Force Center of Excellence on Nature Inspired Flight Technologies (http://nifti.washington.edu/), which Tom also directs, and a WRF-Moore-Sloan Data Science Institute (http://escience.washington.edu/). As he puts it:

“The most critical thing to realize is that the CSNE at the UW led to many new programs, ranging from new degree options to new private funding and new attention to the domain. Even more exciting was the recruitment of three new female faculty in this space: Bingni Brunton (Data Science and Biology), Azadeh Yazdan (BioE and EE) and Amy Orsborn (BioE and EE). We now boast 50 faculty members who consider themselves core to the UW Neuroengineering community (http://uwin.washington.edu/faculty/). None of this would have come about without the CSNE. I must say that the lessons we learned from running an ERC and from the framing and structure it provided for coordinating complex systems of collaborations among scientists, engineers—and even philosophers!—were instrumental in our ability to keep the CSNE going, to leverage entire new programs (UWIN, NIFTI, eScience), and to begin the new broad interests at the UW and nationally in this domain.”[61]

——————————————————————-

The four Gen-3 ERCs in this sector are:

- Center for Biorenewable Chemicals, Iowa State University, joined biochemical engineering, chemical engineering, biology, chemistry genetics, life-cycle analysis, economics.[62]

- ERC for Revolutionizing Metallic Biomaterials, North Carolina A&T University, joined biomedical engineering, materials science and engineering, medicine (orthopedics, neurosurgery), clinical pathophysiology.[63]

- NSF Engineering Research Center for Sensorimotor Neural Engineering (later renamed Center for Neurotechnology),University of Washington, joined bioengineering, electrical engineering, mechanical engineering, computer science, biology, neural engineering neural ethics, neurobiology, neurological surgery, physiology and biophysics, radiology, rehabilitation medicine, speech and hearing, statistics.[64]

- Nanosystems ERC for Advanced Self-Powered Systems of Integrated Sensors and Technologies, North Carolina State University, joined electrical engineering with biomedical engineering, chemical and biomolecular engineering, computer engineering, mechanical engineering, mechanical engineering, behavioral health, computer science.[65]

ENERGY, SUSTAINABILITY, AND INFRASTRUCTURE

- ERC for Quantum Energy and Sustainable Solar Technologies, Arizona State University joined electrical and optoelectronic engineering with mechanical engineering, materials science and engineering, energy and environmental policy, social science, and physics.[66]

- ERC for Re-Inventing America’s Urban Water Infrastructure, Stanford University, joined civil engineering with environmental engineering, mechanical engineering, architecture and urban design, earth sciences, political science, urban water policy.[67]